Strength in numbers: the DfT analytical assurance framework

Updated 12 January 2022

Foreword

This document establishes for the first time, the framework within which analysis is specified, produced and used within DfT. The approaches and conventions described here should however come as no surprise, building as they do on much of the good practice that exists across the Department.

Where these conventions are being made more formal, either through the use of analytical strategies or the requirement for analytical assurance signoff in submissions, the intention is not to hinder the timely provision of analytical advice, but instead to ensure that some of the uncertainty around process, risk and mutual understanding of analysis between analyst and policy partner is removed.

By driving early conversations and mutual responsibility between those responsible for delivering analysis and those responsible for using it, this more structured approach to assuring the quality of DfT analysis should help deliver the ambition for analytical advice to be at the heart of the policy agenda, driving innovative and evidence-based approaches to the challenges that DfT faces.

This framework sets out the fundamental importance of the dual role of analysts and their policy partners in ensuring that analysis is fit for the requirements of the Department.

Tracey Waltho

Director of Strategy and Analysis,

Chief Economist

Introduction to the Analytical Assurance Framework

The DfT Analytical Assurance Framework is the way the Heads of Analytical Professions and the Policy Head of Profession give assurance to the Permanent Secretary and Secretary of State that analysis used to inform decisions is ‘right’ - defined as striking the correct balance between robustness, timeliness, and cost, for the decision at hand. It also makes explicit the responsibilities of analysts and policy leads providing and using analysis to inform decisions.

This document outlines the DfT Analytical Assurance Framework (AAF), taking on board proposals agreed by the DfT’s Executive Committee (ExCo) in January 2013 to strengthen the standard of analytical assurance in DfT. The strengthening of the AAF was designed around the core principles of:

-

ensuring every programme of analysis is sufficient to inform the decisions it supports

-

ensuring mutual understanding, so that (a) those providing analysis fully understand the nature of the issues that their analysis is intended to illuminate; and (b) those using results of analysis to make or advise on decisions understand the broad nature of the analysis provided to them and in particular its strength and limitations

-

ensuring communication of risks in the analysis and conveying any trade-offs between time, quality and cost that may have been made to the ultimate decision-maker

-

the overarching aim is to convey to decision-makers the strengths, risks and limitations in the way that the analysis has been conducted and the uncertainty in the analytical advice. This gives decision makers visibility of the strength of the analysis and how much confidence and weight they can place on it in the final decision making process.

This is embodied in the Analytical Assurance Statement included in submissions which covers three dimensions:

- the scope for challenge to the analysis; the risks of an error in the analysis and the uncertainty inherent in the analytical advice; and the degree to which this has been reduced.

This document also gives guidance, to DfT officials, on good practice in implementing these principles. It is expected that a proportionate approach, which fits with existing good practice concerning governance processes, will be taken to achieving this.

Alongside the proposals agreed by ExCo, another driver for change that has been incorporated within the AAF is the cross-government Macpherson Review of Quality Assurance of Analytical Models.

Whilst the recommendations from the review are focussed on strengthening the quality assurance arrangements for business critical analytical modelling they also focus on the issue of creating the right environment. These themes of leadership and building culture, capacity and control can be seen throughout this document, particularly in the roles and responsibilities for senior officials in section 2.

The following sections explain each of these and set down the key responsibilities of analysts and policy leads in specifying, producing, and using analysis to inform decisions. This document is supported by the DfT Guidance on the Quality Assurance of Analytical Models.

Analytical Roles and Responsibilities

This section sets out the roles and responsibilities in the delivery of both an analytical project and in a broader analytical programme. Here an analytical project is defined as a specific piece of analysis conducted, for instance, to support a single decision. A programme of analysis is broader, covering the range of individual analytical projects that are required to support decisions in a business area.

Roles and Responsibilities

Roles and Responsibilities within an Analytical Project

For any decision relying on a piece of analysis we can define some generic roles: the Analyst Lead, the Policy Lead, the Senior Model Owner (SMO - where a business critical analytical model is being used), and an Independent Reviewer. In practice these roles are likely to be identified as:

- Policy Lead: An official leading on the piece of policy work and relying on analytical advice [footnote 1]

- Analyst Lead: Leading on the provision of analytical input into the piece of policy advice

- SMO: the named Senior Model Owner for a business critical analytical model that is being used to form some of the analytical input into the policy decision

- Independent Reviewer: a competent official independent reviewer of the analytical and policy leads and the piece of policy advice

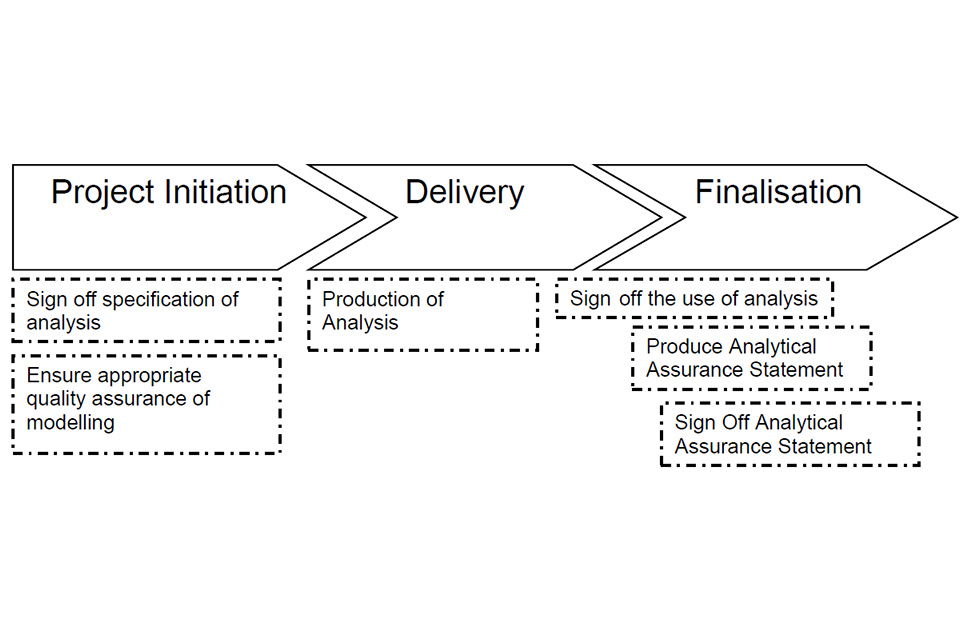

These roles come with a different mixture of responsibilities as the Analytical Project moves from initiation through delivery and then finalisation, highlighted in Figure 1.1 below.

Figure 1.1: Responsibilities over a Standard Project Lifecycle

Figure 1.1 Transcript

Stage 1. Project Initiation

Sign off specification of analysis

Ensure appropriate quality assurance of modelling

Stage 2. Delivery

Production of Analysis

Stage 3. Finalisation

Sign off the use of analysis

Produce Analytical Assurance Statement

Sign Off Analytical Assurance Statement

Roles and Responsibilities within an Analytical Project (continued)

Taking each responsibility in turn:

- signing off the specification of analysis is the agreement of: what questions the analysis needs to answer; the balance between time, cost and quality; the approach to analysis that will be taken; and, the limitations of the analysis. Analyst and policy leads are jointly responsible for this

- ensuring appropriate quality assurance of analytical modelling is an added responsibility when the piece of analytical advice relies on the use or development of a business critical analytical model. This responsibility is placed on the Senior Model Owner (SMO)

- the SMO is responsible for ensuring that model risks, limitations and major assumptions are understood by users of the model, and that the use of the model outputs is appropriate

- the production of analysis is the delivery of the analysis to meet the specification, drawing on and in line with the various analytical frameworks and guidance set out in Annex B and the processes established in section 2. Analyst leads are responsible for this

- signing off the use of analysis ensures that there is mutual understanding of the analysis that has been produced, including its risks and limitations, and that it is fit for the purpose intended. This should take place before the analysis is conveyed to decision makers and is the dual responsibility of analytical and policy leads and, where a business critical model is used, should be approved by the SMO

- producing the analytical assurance statement is the joint responsibility of the analytical and policy leads. The statement should set out a summary of the key risks and limitations of the analysis and any tradeoffs that have been made between time, quality and cost during production. These factors are considered as part of three distinct dimensions: Scope for challenge, Risk of Error; and Uncertainty. Detailed guidance on the Analytical Assurance Statement is available in section 2 and Annex D. Where a business critical analytical model has been used, this should also include a statement of the relevant quality assurance

Where analysis forms part of decision making by a Tier 1 or 2 Investment Board or a Minister or the Permanent Secretary and exposes the Department to significant legal, reputational or financial risk, the analytical assurance statement should be independently reviewed and cleared.

Independent review and clearance is aimed at ensuring that the facts around the level of assurance are presented accurately and that the overall level of assurance is correctly communicated. It is not aimed at checking and clearing the analysis itself.

It is undertaken by a qualified official on behalf of the Chief Analyst and is independent of the specification, production and use of the analysis. Where there is disagreement or the need for further information that is not forthcoming, this should be escalated to the Chief Analyst. This is the responsibility of the Independent Reviewer. Where the assurance statement contains information on the Quality Assurance (QA) approach taken to modelling, this should also be signed off by the SMO.

A summary of these roles and responsibilities is provided in Table 1.1 below.

Table 1.1: Roles and Responsibilities in the Delivery of Analysis to Support a Decision

| Role | Sign off the specification of analysis | Ensure model quality assurance is appropriate | Production of analysis | Sign off of the use of analysis | Production of Analytical Assurance Statement | Sign off of assurance Statement |

|---|---|---|---|---|---|---|

| Analyst Lead | Yes | Yes | Yes | Yes | ||

| Policy Lead | Yes | Yes | Yes | |||

| SMO | Yes | Yes | Yes | |||

| Independent Reviewer | Yes |

Senior Roles and Responsibilities within the Delivery of an Analytical Programme

The delivery of an Analytical Project takes place within the broader context of the Analytical Programme for the Department or business area. For instance, the appraisal of investment in a motorway may be thought of as an Analytical Project, but the overarching roads investment strategy and the analysis that supports the decisions within it can be thought of as the Analytical Programme. Within this broader perspective on the delivery of analysis, there are some wider roles:

- Chief Analyst: Director of analysis within DfT and independent of the policy directorates

- Senior Analyst: usually defined as being the Analyst Head of Division or the senior analyst embedded within a policy directorate

- Analytical Head of Profession: The heads of the various professions that produce analysis within DfT

- Chair of Analytical Governance Board: The chair of the Analytical Programme Board, into which Analytical Projects report

- Chair of Policy Governance Board: The chair of the overall policy programme board into which the analytical board reports

As with the delivery of an Analytical Project, the roles within the delivery of an Analytical Programme come with a mixture of responsibilities. In particular:

- ensuring that the right culture exists, including dual ownership by Policy and Analytical leads and adherence to the principles and processes established here, within the AAF. It is also about providing leadership and reward for adherence to strong quality assurance principles. This is the responsibility of the Chief Analyst, the Heads of the Analytical and Policy professions, and the senior analysts/analysts Heads of Division

- ensuring the right capacity exists, including the right mix of skills and resources. This involves aligning the analytical resources of the department to priorities, and ensuring that there is the time and space for analysts to deliver analysis appropriate to the needs of their policy partners. It is also about ensuring that the department has the right mix of skills, capabilities and experience to deliver its programme, both internally and through access to external resources. This is the joint responsibility of the Chief Analyst, the Heads of the Analytical and Policy professions, and the senior analysts/analysts Heads of Division

- ensuring that the Analytical Programme meets the requirements of the business area. This is similar to the ambition of mutual sign off for the specification of analysis at the start of an Analytical Project, but in a broader sense, so that it establishes the main drivers behind analytical requirements. This may, for example, be the delivery of a business case, as is the case for HS2, or to support the delivery of a programme of rail refranchising. This is the responsibility of the Chief Analyst the Senior Analysts/analysts Heads of Division, the Chair of the Analytical Governance Board and the Chair of the Policy Governance Board

- responsibility for providing all of the project level controls through the challenge to culture, including signoffs. This responsibility for providing challenge to the signoffs that take place within Analytical Projects helps to ensure that the requirements and ambition established around culture are being fulfilled. This is the responsibility of the Senior Analyst/Analysts Heads of Division, and the Chair of the Analytical Governance Group

- the responsibility to escalate risk supports the requirement to reward a culture that champions good quality assurance principles. It should enable risks to be managed at the appropriate level and any tradeoffs that have taken place between time, quality and cost to be made transparently, within the overall risk appetite of the organisation. This is the responsibility of the Senior Analyst/Analysts Heads of Division, Chair of the Analytical Governance Group and the Chair of the Policy Governance Group

A summary of these roles and responsibilities is provided in Table 1.2 below.

Table 1.2: Roles and Responsibilities in the Delivery of a Programme of Analysis

| Role | Ensure the right culture exists including dual ownership and adherence to AAF | Ensure the right capacity exists including the right mix of skills and capability | Ensure analytical programme meets requirements of business area | Provide project level control through challenge to culture including signoffs | Escalate risks |

|---|---|---|---|---|---|

| Director of Analysis | Yes | Yes | Yes | ||

| Senior Analyst | Yes | Yes | Yes | Yes | Yes |

| Analytical and Policy Head of Profession | Yes | Yes | |||

| Chair of Analytical Governance Board | Yes | Yes | Yes | ||

| Chair of Policy Governance Board | Yes | Yes |

Analytical Strategies: Governance and Approvals

The major fields of DfT business should be covered by an Analytical Strategy. Simply put, an Analytical Strategy ensures that the programme of analysis is aligned to the requirements of the business area and has a governance structure integrated with that of the policy programme. It can be seen as a vehicle for planning out production and resource requirements, identifying big decisions and potential problems, and for ensuring QA is built into the process on both the policy and analytical side.

In particular an Analytical Strategy should ensure that the analysis delivered is fit for illuminating the decisions that decision-makers need to take, when they take them.

This chapter sets out in detail what an Analytical Strategy looks like, how the governance of the strategy should work and how the approvals process should be defined. The precise formulation of an Analytical Strategy will differ according to the circumstances of the business area to which it refers. Given that, the description of what an Analytical Strategy looks like is expressed through principles and aims rather than being overly prescriptive.

The principles and processes used within Analytical Strategies can be characterised as a formal statement of good practice in the management of analytical projects. As such, they should also be applied to areas of DfT business that are not covered by a formal strategy, albeit in a proportionate way. The processes for doing so are established in this document, but it will be for individual areas to develop the detailed approach to application that fits with the structures and requirements of their area.

The delivery of analytical advice is often intertwined with the use of an analytical model. Where that is the case, the appropriate approach to quality assurance of that modelling should be considered. Guidance on the principles and processes that should be applied is set out in the document DfT Quality Assurance of Analytical Models.

However, where a model meets the criteria for being defined as business critical, some extra roles and responsibilities are triggered. These include, amongst others, the role of SMO and the responsibility to ensure the models are included on the DfT Register of Business Critical Analytical Models. The processes for fulfilling these responsibilities are established in Section 3 of this document.

The Purpose of an Analytical Strategy

An Analytical Strategy ensures that, for major fields of DfT business, the programme of analysis delivers the right quality of analysis to fulfil the requirements of the business area and has a governance structure integrated with that of the policy programme.

An Analytical Strategy should be a short, focussed document that performs three key functions:

- establishes the principles by which the Analytical Programme is defined and prioritised

- establishes what the upcoming policy needs and resulting analytical needs are, and the Analytical Projects that will deliver that analysis

- specifies the governance of the analysis, including the roles established for an Analytical Programme and how it will integrate with the governance of the policy programme to which it relates

The way in which the Analytical Strategy helps to define the analytical requirements is described in Figure 2.1 below.

Figure 2.1: How the Policy Requirements are delivered through an Analytical Strategy

Step 1. Policy Requirement

The policy requirements of the area relate to the big decisions that need to be taken and the key dates for actions and delivery. This feeds into the Analytical Strategy.

Step 2: Analytical Strategy

The Analytical Strategy takes the policy requirements and describes the Analytical Programme that flows from them and the way in which that programme will be governed.

Step 3: Analytical Programme

The Analytical Programme sets out in detail the series of projects that need to be delivered and identifies the resources and timescales required.

Step 4: Analytical Projects

The Analytical Projects specify, produce and deliver the analysis that constitutes the programme. Taken together, these projects will ultimately fulfil the policy requirement.

Principles for Defining the Analytical Strategy

Overarching principles can encourage analysts and their policy colleagues to think strategically about the requirements of analysis for the final decision and step back from the detail of the range of activities that are likely to be required. They can then act as a tool for defining precisely what is required to support the policy decisions and act as a filter for prioritisation. This can be especially useful if the policy requirements or work specification (e.g. time table, resource limits) change during production.

A detailed Analytical Strategy can then help respond to changing conditions and re-evaluate priorities for production. By getting joint sign up to the principles from both the policy and analytical side, the work programme that then falls out of them is placed within a context that is understood.

The principles presented below are expected to form the basis of all Analytical Strategies, although there can of course be variation in the specifics from area to area. These principles will help to define and prioritise the Analytical Programme and how it is delivered.

i) Prioritising work to where it will have the most impact and most reduce risk and uncertainty in decision-making

Analysis can be thought of as a tool for reducing uncertainty in decision-making, so an Analytical Strategy should follow from that and be focussed on prioritising analytical advice and input to the areas where it will have most impact and where it will most reduce risk and uncertainty in decision-making.

ii) Plan for the big decision points

As the first principle prioritises the decisions that need to be supported by analysis, the Analytical Strategy should ensure that the analysis is planned to meet the key decision points that flow from those decisions.

iii) Develop the evidence base

Consider whether the evidence base is sufficient to support the analysis required and whether it can be further developed within the timescales. There may be risks that flow from the decision not to further develop the evidence, or indeed to do so.

iv) Choose and monitor quality standards from the outset

This ensures that the appropriate level of quality assurance is considered at the outset and explicitly set against the time and resources available for delivery. See the DfT ‘Quality Assurance of Analytical Modelling’ for guidance on quality standards when analytical models are being used.

v) Define risk appetite

Keeping a programme of analysis on track will often require decisions to be taken over scope, approach or changes to requirements. An analytical strategy should establish the risk appetite of the Chair of the Policy Governance Board (usually the Policy SRO) and the process for owning, escalating and mitigating risks that flow from that.

- When considering threats the concept of risk appetite embraces the level of exposure which is considered tolerable and justifiable should it be realised. In this sense it is about comparing the cost (financial or otherwise) of constraining the risk with the cost of the exposure should the exposure become a reality and finding an acceptable balance. See the Risk Policy and Guidance Document (section 3) for information on defining risk appetite.

vi) Follow and deploy the Analytical Frameworks

As the Analytical Assurance Framework makes clear, there are a number of external frameworks that can shape and drive the analysis that is required. In the example of investment decisions, Value for Money advice will need to be given in a way consistent with DfT’s approach to Transport Appraisal, TAG and the DfT Transport Business Case. These approaches are both defined, at least in part, by the HMT Green Book. See Annex B for additional complementary frameworks.

Governance and reporting routes

It is essential that the specification, production and use of analysis has governance integrated with that of the policy work. The interaction between policy requirements, the Analytical Programme and the various Analytical Projects that deliver that programme, requires the identification of reporting routes.

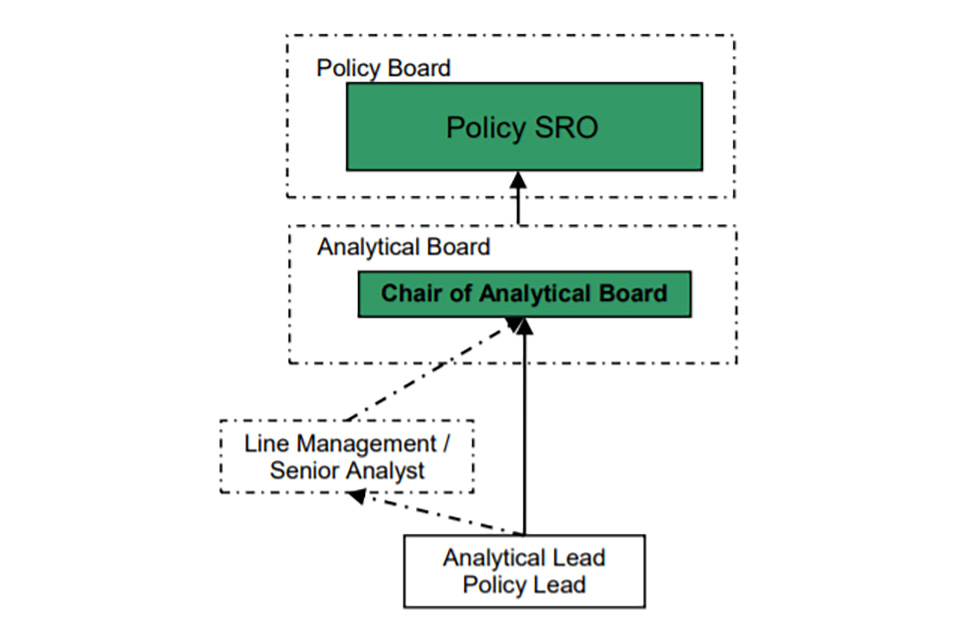

These routes ensure that the specification, production and use of analysis that is signed off by the analytical and policy leads feeds through to the ultimate decision-maker in a controlled way. It also provides a clear route for escalation of risks and areas of dispute. A simplified example of this is provided in Figure 2.2 below:

Figure 2.2 Reporting under the Analytical Strategy approach

The Analytical Lead and Policy Lead work together to specify, and deliver analysis.

The Policy and Analytical Leads may report to the Chair of the Analytical Board through their line management chain.

The Analytical Board supports the Chair of the Board (the named individual, reporting into Policy SRO) and monitors delivery of the analytical work programme.

The Policy SRO is the named individual responsible for the overall policy.

The Chair of the Board has a key role in this governance structure as the named individual responsible for the overall Analytical Programme. The chair will act as the gateway between the requirements of the Policy SRO and the deployment of analytical resource. The Chair will also act as a conduit for swiftly escalating risks and issues to the appropriate level.

The Analytical Board identified in the figure above will play a key role in supporting the fulfilment of the Chair’s responsibilities. The remit of the board may include:

-

preparing recommendations for the relevant Policy Board the analytical strategy that will support the wider programme of work

-

reviewing the content of the analytical strategy on a periodic basis and operate as the gateway for substantial additional requests for related analysis

-

monitoring delivery of the analytical work programme and agree regular progress reports to the relevant policy boards

-

proactively resolving uncertainties that impact on the delivery of the agreed programme plan

-

identifying and resolving trade-offs between the timing and quality of analysis

-

ensuring that value for money (VfM) procedures, and other complementary frameworks, are implemented in an appropriate, proportionate and timely fashion

Analytical Approvals within the Analytical Strategy

The Analytical Assurance Framework and the Governance structures established above, highlight where the interfaces occur in the overall analytical approvals process. This section now sets out in greater detail what those approvals should be, based on the roles, responsibilities and accountabilities identified with them.

Approving the Analytical Strategy

The analytical strategy should be produced by the Senior Analyst, Senior Policy Official and the Chief Analyst.

Once produced the Analytical Strategy should be challenged and agreed by the Chair of the Analytical Board and approved by the Chair of the Policy Board at the Policy Board.

Approving the Analytical Programme

The detailed Analytical Programme that is defined by the Analytical Strategy should be produced and agreed by the Analytical and Policy Leads, challenged by the Analytical Board and agreed by the Chair of the Analytical Board. This should then be approved by the SRO at the Policy Board.

Approving Analytical Projects

The set of required Analytical Projects is established by the Analytical Programme. Within each analytical project there are three distinct stages; specification, production and delivery. Figure 2.3 shows an example of good practice concerning approvals at each stage of an analytical project:

Figure 2.3 Approvals for an Analytical Project

Step 1. Analytical requirements

Defined by the Analytical Strategy and Analytical Programme

Step 2. Specification of the Analysis

Signed off by the Analytical Lead and Policy Lead

Challenged by Line Management and Analytical Board

Step 3. Production of the Analysis

Monitored by Line Management and Analytical Board

Step 4: Delivery of the Analysis

Signed off by Analytical Lead and Policy Lead

Challenged by Line Management and Analytical Board

Step 5: Use of the Analysis accompanied by an Analytical Assurance Statement

Signed off by an Independent reviewer

Further details

Specification of Analysis

Having taken the requirements for the analysis from the Analytical Programme at the first stage (specification), the policy lead and analyst lead are responsible for sign off planned analysis. It must be fit for the purpose intended and its limitations, risks and uncertainty understood. Any risks, disagreements or tradeoffs that have been taken should be escalated to the Analytical Board.

The Chair of the Analytical Group is responsible for challenging the analyst and policy lead over their success in achieving mutual sign off. There may also be a role for line management or Senior Analyst if they are providing an intermediate challenge before reporting to the Chair.

Where the specification of analysis means that a business-critical analytical model will be used, there is an additional role for the SMO to approve the quality assurance regime proposed by the analytical and policy lead.

Production of Analysis

The production of the analysis is the responsibility of the lead analyst and the analytical group is responsible for monitoring production against milestones.

Where there are requests for the release of intermediate outputs for use in submissions or investment board papers, the approvals process should follow that described in the delivery of analysis and analytical assurance statements sub-section below. The Analytical Assurance Statement will need to reflect the intermediate nature of the analytical advice and the consequential risks, limitations and uncertainties.

Delivery of Analysis

At the delivery stage, the analysis should be signed off by the policy and analytical lead. This confirms that the analysis meets the requirements as set out in the specification and that risks, limitations, and uncertainty are coherently understood. The Analytical Board should challenge the sign off before agreeing that the analysis has been delivered. Where there are disagreements about the analysis that have prevented mutual sign off, these should be escalated to the Analytical Group.

Where the analysis relies on the use of business critical modelling, the sign off should also include an indication from the SMO that they are content with the quality assurance that has been undertaken, with the use to which the model is being put and the explanation of any risks and limitations.

Approvals for business critical models are set out in more detail in section 3.

Approving the Use of Analysis - the Analytical Assurance Statement

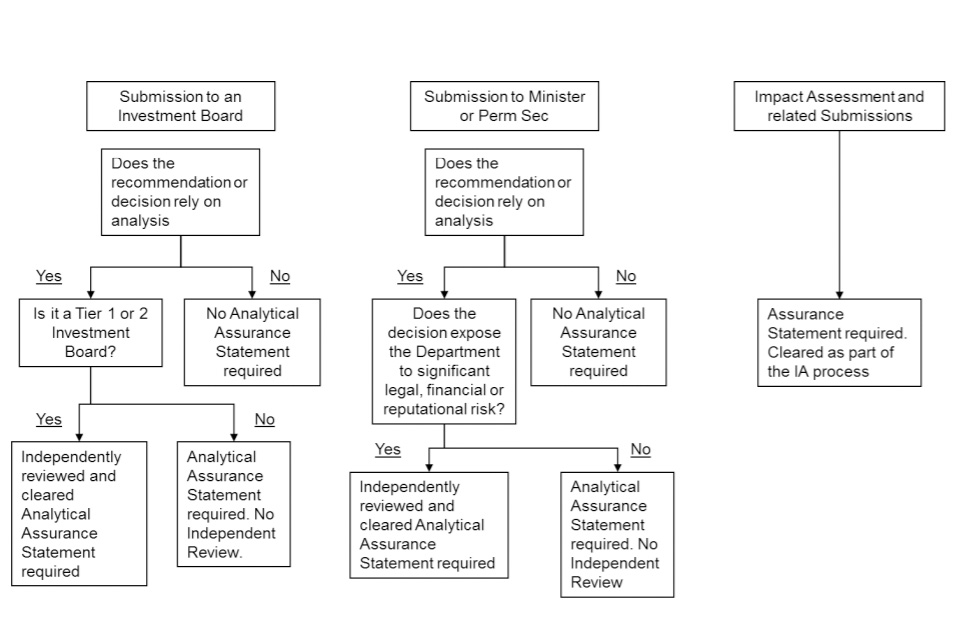

The use of the analysis in submissions to Ministers or Investment Boards to aid decision making should include an Analytical Assurance Statement. Annex E gives detailed guidance on what should be in the statement and how it should be presented. Figure 2.4 below, sets out when a statement is required and when Independent Review and clearance via TASM is required.

Figure 2.4 Requirements for an Analytical Assurance Statement and when it needs to be Independently Reviewed and Cleared

Transcript of Figure 2.4.

Process 1: Submission to an Investment Board

Does the recommendation or decision rely on analysis?

If Yes - proceed to Tiers.

If No - No Analytical Assurance Statement required - end of process.

Tiers

Is it a Tier 1 or 2 investment board?

If Yes - Independently reviewed and cleared Analytical Assurance Statement required.

If No - Analytical Assurance Statement is required - no Independent Review.

Process 2: Submission to Member of Permanent Secretary

Does the recommendation or decision rely on analysis?

If Yes - proceed to Risks section.

If No - no Analytical Assurance Statement required - end of process.

Risks

Does the decision expose the Department to significant legal, financial or reputational risk?

If Yes - Independently reviewed and cleared Analytical Assurance Statement required.

If No - Analytical Assurance Statement is required - no Independent Review.

Process 3: Impact Assessment and Related Submissions

For any Impact assessment and related submissions, an Assurance Statement is required.

Cleared as part of the IA process.

Approving the Use of Analysis - the Analytical Assurance Statement (continued)

As set out in Section 1, it is the joint responsibility of the policy and analyst lead to produce the statement. When clearance is required, it is provided by Transport Appraisal and Strategic Modelling (TASM) via [email protected] or by the economist peer reviewer via [email protected] where the Analytical Assurance Statement concerns analysis contained in an Impact Assessment.

Annex D contains more detailed guidance on when and how a statement is produced and presented.

The production of the statement is the joint responsibility of the analytical and policy/operational/delivery lead, working on the preparation of advice. Early conversations and sign off of the specification of the analysis should enable straightforward production of the assurance statement for final sign-off.

The Analytical Assurance Statement is designed to convey to decision-makers the strengths, risks and limitations in the way that the analysis has been conducted and the uncertainty in the analytical advice. In order to guide the content of the statement, analysts and their policy partners should think about the response in three dimensions: the scope for challenge to the analysis, the risks of an error in the analysis and the uncertainty inherent in the analytical advice and the degree to which this has been reduced.

The first two dimensions are directly related to the assurance of the analytical process and should be proportional to the impact of the analysis. The assurance described by the combination of these two dimensions should be given an explicit ranking of low, medium or high.

The final dimension, uncertainty, may be reduced by higher levels of assurance but the answer may still remain uncertain. In such an example, the overall assurance of the analysis may be high, but the fact that the advice remains bounded by uncertainty should be clearly explained.

1). Reasonableness of the Analysis / Scope for Challenge

- have we been constrained by time or cost, meaning further proportionate analysis has not been undertaken?

- is there further analysis that could lead to different conclusions?

- does the analysis rely on appropriate sources of evidence?

- how reliable are the underpinning assumptions?

2). Risk of Error / Robustness of the Analysis

- has there been sufficient time and space for proportionate levels of quality assurance to be undertaken?

- how complicated is the analysis?

- how innovative is the approach?

- have sufficiently skilled staff been responsible for producing the analysis?

** 3). Uncertainty**

-

what is the level of inherent uncertainty (i.e. the level of uncertainty at the beginning of the analysis) in the analysis?

-

has the analysis reduced the level of uncertainty? What is the level of residual uncertainty (the level of uncertainty remaining at the end of the analysis)?

Proportionality is applied across all three elements - a high cost project with significant reputational and legal risks would require proportionally more assurance than a low cost project with limited legal and reputational considerations. This should be accounted for when deciding whether sufficient assurance has taken place for each of the three elements.

The guidance document ‘Quality Assurance of Analytical Modelling’ provides information on how to assess the level of proportionality to be applied in assurance. This is based on the aggregated impact of the analysis.

Within submissions, the statement should be a short paragraph or a couple of sentences, setting out the level of assurance and the main reason or reasons why that level is correct.

Analytical Approvals outside Analytical Strategies

Analytical Strategies are designed to govern the specification, production and use of analysis within the Department’s major fields of business. The principles that underpin analytical strategies are however relevant for the use of all analysis in the Department. Indeed, the approach to signoffs should be similar, albeit conducted in a proportionate fashion.

Principles

Analytical approvals outside of analytical strategies should still be focussed on the mutual ownership of the specification and use of analysis by analysts and their policy partners. Early discussions about requirements will enable appropriate analysis to be delivered and any risks, limitations and uncertainty to be understood in advance.

Governance

Governance should be delivered through the appropriate line management chain. Where useful, applying good project management techniques may imply the formation of a project board to govern the analysis, where the board contains the user of analysis (a policy partner), a supplier (the provider of the analytical resource), a project manager (the lead analyst) and a project executive (the SMO in analytical strategy terms, but likely to be the line manager of the lead analyst).

Such a board can then be used in much the same way as an analytical board, with the project executive providing final sign off.

Approvals - Specification

Approval should be delivered by a mutual sign off between the analyst and their policy partner that the analysis proposed will meet the requirements of the business area and that risks, limitations and uncertainties are mutually understood. This sign off may take the form of a simple email between the two parties, or as the decision of the project executive, when project management processes are in place.

Approvals - Delivery

When the analysis has been delivered, mutual sign off between analyst and policy partner should take place to ensure that the analysis meets requirements and that risks, limitations and uncertainty are understood and appropriately communicated.

Approvals: The Use of Analysis and the Analytical Assurance Statement

3. Business Critical Analytical Models

The Accounting Officer is responsible for ensuring that business critical analytical models are identified in a central register and are subject to an appropriate quality assurance regime. This chapter establishes the definition of business critical, the process for ensuring that the register of models is comprehensive and accurate, and for ensuring that the QA regime is appropriate.

Definition of Business Critical

The Macpherson Review of quality assurance of analytical modelling in government provided the following factors for determining whether a model is business critical:

- the modelling drives essential financial and funding decisions

- the model is essential to the achievement of business plan actions and priorities

- additionally, or alternatively - errors could engender serious financial, legal, reputational damages or penalties

The DfT guidance on the Quality Assurance of Analytical Models provides a model impact matrix (see Annex C), which attempts to provide a more objective measure of the model impact. This can be used to assess the impact of an analytical model and determine whether it is likely to be on the business critical list.

Governance

Every business critical model in DfT has a specialist Senior Model Owner (SMO), notified of their appointment via a letter from the Chief Analyst. The SMO is an appropriately senior individual responsible throughout the model’s life cycle and who signs off that the model is fit for purpose, prior to the model’s use. The sign off ensures that:

- the QA process used is compliant and appropriate

- model risks, limitations and major assumptions are understood by the users of the model

- additionally - the use of the model is appropriate

The SMO can be either an analytical or policy professional. As the sign off covers both model development and output use it potentially straddles both professions. As such, the SMO may need to seek assurance from the profession that they do not cover, to ensure there is a single coherent confirmation.

The governance of a business critical model can be aligned to the governance of the analytical strategy and the policy to which that strategy relates. However, it may also be that the model applies to multiple strategies and the governance needs to stand slightly apart.

Approvals

The SMO should refer to the DfT guidance on Quality Assurance of Analytical Models to aid the development of an appropriate quality assurance regime. The guidance includes a governance checklist and highlights the type of sign offs required at each stage of the model development lifecycle.

It will be the responsibility of the analytical lead to develop and apply the appropriate quality assurance regime to the development or use of the analytical model, in light of the expectations of the SMO. Sign off from the SMO should take place at two points (see table 1.1):

- at specification: when the QA regime is specified, to signal approval that it is appropriate

- at delivery: after the model has been developed or used and before the release of the results, to indicate approval that the QA regime has been applied

Where intermediate results are released, the SMO should approve any statements about the risks, limitations and uncertainties that may be derived from the incomplete analysis or failure to complete the full quality assurance processes.

Submissions

Key submissions using results from the model should summarise the QA that has been undertaken, including the extent of expert scrutiny and challenge. They should also confirm that the SMO is content that the QA process is compliant and appropriate, model risks, limitations and major assumptions are understood by users of the model, and the use of the model outputs is appropriate.

It is likely that this sign off will often interact with the requirement to produce an Analytical Assurance paragraph in submissions to Investment Boards or Ministers. Where that is the case, the Independent Reviewer will need to be satisfied that the SMO has approved the text relating to model QA.

Maintaining the Business Critical Register

The Chief Analyst’s Office (CAO) will maintain the register on behalf of the Chief Analyst. To ensure that the register is comprehensive and accurate, the process for removal from, addition to and verification of the register is set out below.

Removal of a Model from the Register

In order to remove a model from the business critical register, the named SMO for the model should email business critical model register inbox at [email protected], copied to the Chief Analyst, explaining why the model is no longer business critical. Reasons for this decision may include:

- the model will no longer be used

- the scope of the model use has been downgraded such that it is no longer business critical

Addition of a model to the register

A model may be added to the business critical register where:

- a new model is planned, in development or finalised and meets the criteria

- an existing model is enhanced such that the new scope meets the criteria

As there remains a degree of subjectivity around the definition of business critical, the three likely scenarios for an addition to the register are outlined below:

Where the modelling is non-business critical

The decision that a new piece of modelling is not business critical should be mutually signed off between the analyst and policy partner, perhaps via email, and copied up the line management chain. The sign off should provide a short summary, not necessarily longer than two or three sentences, of why the modelling is not business critical. This sign off should be transparently stored for audit purposes.

Where the modelling is business critical

The model developer, user or policy customer should contact the business critical model register inbox with a description of the model, why it is felt to be business critical, what the model impact score is and who the most suitable SMO is. The proposed SMO should be copied into the email.

CAO representatives will formally respond, with an SMO appointment letter, copied to the relevant Director and to the Chief Analyst and the model will be placed on the business critical register.

Where the modelling is possibly business critical

Where there is some doubt over whether a model should be on the business critical register, the model developer, user or policy customer should contact the business critical model register inbox with a description of the model, why it might be business critical, what the model impact score is and who the most suitable model SMO is likely to be.

CAO will consider the information and discuss with the proposer, before formally responding with an explanation of whether or not the model should be placed on the register, copied to the proposed SMO, the relevant Director and the Chief Analyst.

Where the categorisation is disputed or there remains genuine uncertainty, the final decision will be taken by the Chief Analyst.

Publication of the Register

It is the ambition that the Department should publish the register annually, alongside the Department’s accounts.

Testing Compliance

Ongoing compliance with the requirement to place business critical modelling on the DfT register will form part of the Internal Audit programme to strengthen controls in the area of analytical modelling risk.

Annex A: Glossary of Terms

A.1 Analytical Strategy: for major fields of DfT business, an analytical strategy establishes the principles by which the analysis provided meets the requirements of the business area, to the timescales required.

A.2 Analytical Approvals Process: the process by which analysis is approved as being fit for the purpose intended and risks and limitations are conveyed to decision-makers.

A.3 Specification of Analysis: the dual process between analysts and their policy partners that ensures analysis will meet requirements.

A.4 Production of Analysis: the process by which analysts produce the analysis, in line with the specification.

A.5 Use of Analysis: the dual process by which analysts and their policy partners ensure that the use to which analysis is put is appropriate.

A.6 Analytical Group/Board: the governance through which the delivery of analysis is monitored and risks are communicated and escalated.

A.7 Analytical Project: a specific piece of analysis.

A.8 Analytical Programme: the broad programme of analytical work described by the Analytical Strategy or overarching analytical requirements of a business area. May comprise a set of analytical projects.

Annex B: Analytical Frameworks, Processes and Guidance

B.1 There are several inter-related but distinct analytical frameworks, processes, and guidance documents which shape analytical advice. These build assurance by providing consistency of methods and assumptions across projects and programmes, within DfT and across government.

B.2 Analyst leads are responsible for ensuring the agreed specification and production of analysis fits with these frameworks, processes, and guidance documents.

The Civil Service Code

B.3 The broadest framework that determines the provision of analytical advice is the Civil Service Code. Amongst other things, the code establishes a duty to provide information and advice on the basis of the evidence and accurately present options and facts.

The Green Book

B.4 The HMT Green Book provides the detailed framework and guidance by which Departments subject all new policies, programmes and projects, whether revenue, capital, or regulatory, to comprehensive but proportionate assessment. Initially, a wide range of options should be created and reviewed with the purpose of developing a value for money solution that meets the objectives of government action.

It sets out how proposals should be appraised, before significant funds are committed and forms the basis for the TAG appraisal guidance, the Transport Economic Case and Impact Assessments.

B.5 The Green Book also establishes that the analysis of investment and spending does not stop at the point of delivery. Specifically, it raises the issues of ex-post evaluation that should enable the feedback loop from implementation back into appraisal, applying the evidence of past projects to future decisions. HMT supplementary guidance to the Green Book incorporates good practice guidance from other departments.

The Magenta Book: Evaluation

B.6 If the Green Book describes the necessity of evaluation, the Magenta Book sets out in detail how evaluation should be conducted. As an evaluation will be based on the principle of comparing the world as it

would have been against the world as it is after an intervention, it will often be the case that a proper evaluation relies on the appropriate methods being identified and data being collected, at the start of the project. The result is that the possibility of an evaluation should be built into the analytical specifications of a project at the outset.

The Transport Business Case

B.7 To support decision-making by investment boards, the Department has recently adopted the Transport Business Case approach. This five-case approach mimics the recommended HMT approach to the production of business cases. Advice is given on the strategic, economic, financial, commercial and management strengths of each proposal. The five case summaries are completed by the scheme promoter, with support from DfT Central staff when appropriate.

Each case is then cleared by the relevant DfT Centre of Excellence, with reviewer comments included in summary form and submitted to the Investment Board in support of the decision-making process. The Transport Business Case applies to all capital and resource investment decisions in DfT.

The Value for Money Framework

B.8 The Value for Money framework supports the Transport Business Case by providing guidance on the completion of the Economic Case. However, the VfM framework is broader than the Transport Business Case approach because in theory it covers all submission to investment boards, the Permanent Secretary or Ministers that have VfM implications.

Officials are required to prominently include a short statement to highlight any major concerns with the VfM position of the proposition and to clear that with TASM. A more detailed analysis of the VfM position is routinely included as either a more substantive piece of text in the body of the submission or as an Annex. These statements are generally produced by local economists and again, will be signed off by TASM.

B.9 See Value for Money guidance

The Better Regulation Process

B.10 The Better Regulation Framework[footnote 2] is overseen by the Better Regulation Executive. The DfT Better Regulation and Transposition Policy Unit is responsible for ensuring the Framework is followed by DfT and its agencies.

B.11 All policies which require clearance from the Reducing Regulation Committee (RRC) must be accompanied by analysis that is proportionate to the scale and likely impacts of the proposal. The standard format for this is an Impact Assessment which summarises the rationale for Government intervention, the options considered and the expected costs and benefits.

Deregulatory and smaller regulatory proposals can follow a ‘fast track’ process, with lower mandatory requirements. DfT requires Impact Assessments to be produced for fast track measures, as well as in cases where RRC clearance is not required but the policy affects the public or private sector and civil society organisations.

B.12 Before seeking clearance from RRC the Impact Assessment must be submitted to the Regulatory Policy Committee (RPC) for scrutiny and opinion, or a case must be made to the RPC that the measure is suitable to follow the fast track. Clearance can generally not be sought without a ‘fit for purpose’ opinion or a confirmation of its suitability for the fast track from the RPC.

Before going to the RPC or being published each Impact Assessment is quality assured and cleared by the Better Regulation Unit and an economist peer reviewer on behalf of the Chief Economist.

TAG

B.13 The Department has an extensive set of guidance for the completion of transport appraisal, TAG. This ensures consistent standards are applied to all transport investments and allows for comparisons across modes. The guidance cuts across most of the investment appraisal work of the department, forming the foundations for the preparation of most economic cases that feed into investment appraisal, business case and impact assessments.

Quality Assurance of Analytical Modelling

B.14 The Department is implementing a set of Quality Assurance guidelines that apply to analytical modelling. These guidelines are based around 7 key principles:

- a wide range of Quality Assurance processes can be deployed and they should go wider than merely checking that a model calculates without errors

- the level of assurance required should increase with the assessed impact of the model and consequently of a model error

- the more complex or innovative the approach, the higher the risk of model error, and therefore the greater the level of QA that should be undertaken

- the approach to quality assurance will differ between a model in development and a model in use and should be explicitly considered

- where model development and use is outsourced, the DfT model owner and policy customer should ensure that the appropriate QA regime is in place and record that fact

- quality Assurance should be embedded within the governance structure of analytical models and/or the governance structure of a policy project

- if an analytical model is defined as business critical, a specific set of roles, responsibilities and accountabilities are triggered

B.15 The guidance itself is designed to be flexible, to allow local DfT analysts and policy partners to determine the most effective approach in their areas.

That said, the guidance does make clear that the consideration of an appropriate QA regime is fundamental to the use of analytical modelling and should be facilitated by an appropriate governance structure. The approach to governance set out in section 2 meets this requirement, particularly through the use of analytical strategies and in the description of analytical assurance in Ministerial submissions and submissions to boards.

DfT Risk Management Policy

B.16 The Updated DfT Risk Management Policy (May 2013) explains in detail best practice for identifying, understanding and handling risk. It details and best practice actions to identify risks early, communicate them, escalate risks and mitigate them where possible. The Risk Impact matrix, used to assess the severity of a risk, is comparable to the Model Impact and Model Complexity matrices (included in Quality Assurance of Analytical Modelling Annexes C and D) used to decide the level of QA required for a given analysis.

Managing Public Money

B.17 There are a number of external frameworks which directly impact on the work of DfT analysts. The guidance to carrying out fiduciary obligations responsibly is bolstered by the HMT guidance note on Managing Public Money. Amongst other things, this guidance sets out how the Permanent Secretary and Department should ensure that the organisation’s procurement, projects, and processes provide confidence about good value.

Annex C: Recommendations from the Macpherson Review

C.1 All business-critical models in Government should have appropriate quality assurance of their inputs, methodology and outputs in the context of the risks their use represents. If unavoidable time constraints prevent this happening then this should be explicitly acknowledged and reported.

C.2 All business-critical models in Government should be managed within a framework that ensures appropriately specialist staffs are responsible for developing and using the models, as well as quality assurance.

C.3 There should be a single Senior Model Owner for each model (“SMO”) through its lifecycle, and clarity from the outset on how QA is to be managed. Key submissions using results from the model should summarise the QA that has been undertaken, including the extent of expert scrutiny and challenge. They should also confirm that the SMO is content that the QA process is compliant and appropriate, that model risks, limitations and major assumptions are understood by users of the model, and the use of the model outputs is appropriate.

C.4 The Accounting Officer’s governance statement within the annual report should include confirmation that an appropriate QA framework is in place and is used for all business critical models. As part of this process, and to provide effective risk management, the Accounting Officer may wish to confirm that there is an up-to-date list of business critical models and that this is publicly available. This recommendation applies to Accounting Officer’s for Arm’s Length Bodies, as well as to departments.

C.5 All departments and their Arms-Length Bodies should have in place, by the end of June 2013, a plan for how they will create the right environment for QA, including how they will address the issues of culture, capacity and control. These plans will be expected to include consideration of the aspects identified in Box 4.A in chapter 4 of the Macpherson report.

C.6 All departments and their arms-Length Bodies should have in place, by the end of June 2013, a plan for how they will ensure they have effective processes – including guidance and model documentation – to underpin appropriate QA across their organisation. These plans will be expected to include consideration of the aspects identified in Box 4.B of chapter 4 of the Macpherson report. To support this recommendation, succinct guidance setting out the key, generic issues that drive effective quality assurance will be added to “Managing Public Money” – which offers guidance on how to handle public funds properly.

C.7 To support the implementation of these recommendations, the review recommends establishing an expert departmental working group to continue to share best practice experience and to help embed this across government.

C.8 Organisations’ progress against these recommendations should be assessed in 12 months after this review is published. HMT will organise the assessment, possibly with support from another department.

Annex D: Guidance on Analytical Assurance Statements

Analytical Assurance Statements

D.1 Analytical Assurance Statements are produced by Department for Transport officials, to highlight the degree of assurance attached to the analysis upon which Ministers and Boards are making decisions.

What is an Analytical Assurance Statement

D.2 An Analytical Assurance Statement is a short summary of the level of assurance that can be attributed to a piece of analysis that forms part of the decision-making process.

Why is an Analytical Assurance Statement needed?

D.3 Analysis is integral to reducing uncertainty in decision-making and plays an important role in shaping, ranking and informing investment and policy decisions. To be fully informed, decision-makers must be aware of the robustness of the analytical advice and consequently how much weight to attach to it in the final reckoning.

When is an Analytical Assurance Statement needed?

D.4 An Analytical Assurance Statement is produced as part of any submission to Ministers, Investment Boards or the Permanent Secretary that use analysis to support decision-making.

Independent Review of the Statement

D.5 The content of the analytical assurance statement should be independently reviewed when: • The analytical advice plays a major role in decision-making by Tier 1 and Tier 2 investment boards • When the decision could expose the department to legal, financial or reputational risks

How is an Analytical Assurance Statement Produced?

D.6 The production of the statement is the joint responsibility of the analytical and policy/operational/delivery lead, working on the preparation of advice. Early conversations and sign off of the specification of the analysis should enable straightforward production of the assurance statement for final sign-off.

D.7 The Analytical Assurance Statement is designed to convey to decision- makers the strengths, risks and limitations in the way that the analysis has been conducted and the uncertainty in the analytical advice. In order to guide the content of the statement, analysts and their policy partners should think about the response in three dimensions: the scope for challenge to the analysis, the risks of an error in the analysis and the uncertainty inherent in the analytical advice and the degree to which this has been reduced.

D.8 The first two dimensions are directly related to the assurance of the analytical process and should be proportional to the impact of the analysis. The assurance described by the combination of these two dimensions should be given an explicit ranking of low, medium or high. The final dimension, uncertainty, may be reduced by higher levels of assurance but the answer may still remain uncertain. In such an example, the overall assurance of the analysis may be high, but the fact that the advice remains bounded by uncertainty should be clearly explained.

1). Reasonableness of the Analysis / Scope for Challenge

- have we been constrained by time or cost, meaning further proportionate analysis has not been undertaken?

- is the further analysis that could be done lead to different conclusions?

- does the analysis rely on appropriate sources of evidence?

- how reliable are the underpinning assumptions?

2). Risk of Error / Robustness of the Analysis

- has there been sufficient time and space for proportionate levels of quality assurance to be undertaken?

- how complicated is the analysis?

- how innovative is the approach?

- have sufficiently skilled staff been responsible for producing the analysis?

3). Uncertainty

- what is the level of inherent uncertainty (i.e. the level of uncertainty at the beginning of the analysis) in the analysis?

- has the analysis reduced the level of uncertainty? What is the level of residual uncertainty (the level of uncertainty remaining at the end of the analysis)?

D.9 Proportionality is applied across all three elements - a high cost project with significant reputational and legal risks would require proportionally more assurance than a low cost project with limited legal and reputational considerations. This should be accounted for when deciding whether sufficient assurance has taken place for each of the three elements.

The guidance document ‘Quality Assurance of Analytical Modelling’ provides information on how to assess the level of proportionality to be applied in assurance. This is based on the aggregated impact of the analysis.

D.10 Once the statement has been agreed between the policy and analyst leads, it should be sent for independent review and clearance, along with the submission, to [email protected].

How is an Analytical Assurance Statement Presented?

D.11 Within submissions, the statement should be a short paragraph or a couple of sentences, setting out the level of assurance around the first two dimensions - low, medium or high - and the main reason or reasons why that level is correct. This should be used to frame the final section of the statement, covering the uncertainty that remains once analysis has been applied.

How is an Analytical Assurance Statement cleared?

D.12 When the statement requires independent review within the Better Regulation process, it will be cleared as part of the peer review process.

D.13 For clearance of statement contained within submissions or papers to investment boards, the responsibility for providing independent clearance resides within Transport Appraisal and Strategic Modelling (TASM). Submissions should be sent to [email protected] . Every effort will be made to ensure swift clearance is provided. This will be aided by a brief overview of the position in the covering email and a follow-up conversation to clarify any issues.

What is being cleared?

D.14 Independent review and clearance is aimed at ensuring that the facts around the level of assurance are presented accurately and that the overall level of assurance is correctly communicated. It is not aimed at checking and clearing the analysis itself.

Contacts

General enquiries and clearance: [email protected]

Further Guidance:

DfT Guidance on Analytical Model Quality Assurance

Impact Assessment Proportionately Guidance