Generative AI Framework for HMG (HTML)

Published 18 January 2024

Acknowledgements

The publication of this report has been made possible by the support from a wide number of stakeholders.

Central government department contributions have come from Home Office (HO), Department for Environment, Food & Rural Affairs (DEFRA), Department for Business and Trade (DBT), Foreign, Commonwealth & Development Office (FCDO), Department for Science, Innovation and Technology (DSIT), Cabinet Office (CO), Department for Work and Pensions (DWP), HM Treasury (HMT), HM Revenue and Customs (HMRC), Ministry of Defence (MoD), Ministry of Justice (MoJ), Department for Levelling Up, Housing and Communities (DLUHC), Department of Health and Social Care (DHSC), Department for Transport (DfT), Crown Commercial Service (CCS), Government Legal Department (GLD) and Number 10 Data Science team.

Arms length bodies, devolved administrations and public sector bodies contributions have come from National Health Service (NHS), HM Courts and Tribunals Service (HMCTS), Government Internal Audit Agency (GIAA), Information Commissioner’s Office (ICO), Office for National Statistics (ONS), Driver and Vehicle Licensing Agency (DVLA), Met Office, Government Communications Headquarters (GCHQ) and Scottish Government.

Industry leaders and expert contributions have come from Amazon, Microsoft, IBM, Google, BCG, the Alan Turing Institute, the Oxford Internet Institute, the Treasury Board of Canada Secretariat.

User research participants have come from a range of departments and have been very generous with their time.

Foreword

At the time of writing, it has been a year since generative artificial intelligence (AI) burst into public awareness with the release of ChatGPT. In that time, the ability of this technology to produce text, images and video has captured the imagination of citizens, businesses and civil servants. The last year has been a period of experimentation, discovery and education, where we have explored the potential - and the limitations - of generative AI.

In 2021, the National AI Strategy set out a 10 year vision that recognised the power of AI to increase resilience, productivity, growth and innovation across the private and public sectors. The 2023 white paper A pro-innovation approach to AI regulation sets out the government’s proposals for implementing a proportionate, future-proof and pro-innovation framework for regulating AI. We published initial guidance on generative AI in June 2023, encouraging civil servants to gain familiarity with the technology, while remaining aware of risks. We are now publishing this expanded framework, providing practical considerations for anyone planning or developing a generative AI solution.

Generative AI has the potential to unlock significant productivity benefits. This framework aims to help readers understand generative AI, to guide anyone building generative AI solutions, and, most importantly, to lay out what must be taken into account to use generative AI safely and responsibly. It is based on a set of ten principles which should be borne in mind in all generative AI projects.

This framework differs from other technology guidance we have produced: it is necessarily incomplete and dynamic. It is incomplete because the field of generative AI is developing rapidly and best practice in many areas has not yet emerged. It is dynamic because we will update it frequently as we learn more from the experience of using generative AI across government, industry and society.

It does not aim to be a detailed technical manual: there are many other resources for that: indeed, it is intended to be accessible and useful to non-technical readers as well as to technical experts. However, as our body of knowledge and experience grows, we will add deeper dive sections to share patterns, techniques and emerging best practice (for example prompt engineering. Furthermore, although there are several forms of generative AI, this framework focuses primarily on Large Language Models (LLMs), as these have received the most attention, and have the greatest level of immediate application in government.

Finally, I would like to thank all of the people who have contributed to this framework. It has been a collective effort of experts from government departments, arms-length bodies, other public sector organisations, academic institutions and industry partners. I look forward to continued contributions from a growing community as we gain experience in using generative AI safely, responsibly and effectively.

David Knott,

Chief Technology Officer for Government

Principles

We have defined ten common principles to guide the safe, responsible and effective use of generative AI in government organisations. The white paper A pro-innovation approach to AI regulation, sets out five principles to guide and inform AI development in all sectors. This framework builds on those principles to create ten core principles for generative AI use in government and public sector organisations.

You can find posters on each of the ten principles for you to display in your government organisation.

- Principle 1: You know what generative AI is and what its limitations are

- Principle 2: You use generative AI lawfully, ethically and responsibly

- Principle 3: You know how to keep generative AI tools secure

- Principle 4: You have meaningful human control at the right stage

- Principle 5: You understand how to manage the full generative AI lifecycle

- Principle 6: You use the right tool for the job

- Principle 7: You are open and collaborative

- Principle 8: You work with commercial colleagues from the start

- Principle 9: You have the skills and expertise needed to build and use generative AI

- Principle 10: You use these principles alongside your organisation’s policies and have the right assurance in place

Principle 1: You know what generative AI is and what its limitations are

Generative AI is a specialised form of AI that can interpret and generate high-quality outputs including text and images; opening up the potential for opportunities for organisations, including delivering efficiency savings or developing new language capability.

You actively learn about generative AI technology to gain an understanding of what it can and cannot do, how it can help and the potential risks it poses.

Large language models lack personal experiences and emotions and don’t inherently possess contextual awareness, but some now have access to the internet.

Generative AI tools are not guaranteed to be accurate as they are generally designed only to produce highly plausible and coherent results. This means that they can, and do, make errors. You will need to employ techniques to increase the relevance and correctness of their outputs, and have a process in place to test them. You can find more about what generative AI is in our Understanding generative AI section and what it can and cannot do for you in the Building generative AI solutions section.

Principle 2: You use generative AI lawfully, ethically and responsibly

Generative AI brings specific ethical and legal considerations, and your use of generative AI tools must be responsible and lawful.

You should engage with compliance professionals, such as data protection, privacy and legal experts in your organisation early in your journey. You should seek legal advice on intellectual property equalities implications and fairness and data protection implications for your use of generative AI.

You need to establish and communicate how you will address ethical concerns from the start, so that diverse and inclusive participation is built into the project lifecycle.

Generative AI models can process personal data so you need to consider how you protect personal data, are compliant with data protection legislation and minimise the risk of privacy intrusion from the outset.

Generative AI models are trained on large data sets, which may include biased or harmful material, as well as personal data. Biases can be introduced throughout the entire lifecycle and you need to consider testing and minimising bias in the data at all stages.

Generative AI should not be used to replace strategic decision making.

Generative AI has hidden environmental issues that you and your organisation should understand and consider before deciding to use generative AI solutions. You should use generative AI technology only when relevant, appropriate, and proportionate, choosing the most suitable and sustainable option for your organisation’s needs.

You should also use the AI regulation white paper’s fairness principle, which states that AI systems should not undermine the legal rights of individuals and organisations. And that they should not discriminate against individuals or create unfair market outcomes.

You can find out more in our Using generative AI safely and responsibly section.

Principle 3: You know how to keep generative AI tools secure

Generative AI tools can consume and store sensitive government information and personal identifiable information if the proper assurances are not in place. When using generative AI tools, you need to be confident that your organisation’s data is held securely; that the generative AI tool can only access the parts of your organisation’s data that it needs for its task.

You need to ensure that private or sensitive data sources are not being used to train generative AI models without the knowledge or consent of the data owner.

Generative AI tools are often hosted in places outside your organisation’s secure network. You must make sure that you understand where the data you give to a generative AI tool is processed, and that it is not stored or accessible by other organisations.

Government data can contain sensitive and personal information that must be processed lawfully, securely and fairly at all times. Your approach must comply with the data protection legislation.

You need to build in safeguards and put technical controls in place, this includes: content filtering to detect malicious activity and validation checks to ensure responses are accurate and do not leak data.

You can find out more in our Security, Data protection and privacy, and Building the solution sections.

Principle 4: You have meaningful human control at the right stage

When you use generative AI you need to make sure that there are processes for quality assurance controls which include an appropriately trained and qualified person to review your generative AI tool’s outputs and validation of all decision making that generative AI outputs have fed into.

When you use generative AI to embed chatbot functionality into a website, or other uses where the speed of a response to a user means that a human review process is not possible, you need to be confident in the human control at other stages in the product life-cycle. You must have fully tested the product before deployment, and have robust assurance and regular checks of the live tool in place. Since it is not possible to build models that never produce unwanted or fictitious outputs (i.e. hallucinations), incorporating end-user feedback is vital. Put mechanisms into place that allow end-users to report content and trigger a human review process.

You can find out more in our Ethics, Data protection and privacy, Building The solution and Security sections.

Principle 5: You understand how to manage the full generative AI lifecycle

Generative AI tools, like other technology deployments, have a full project lifecycle that you need to understand.

You and your team must know how to choose a generative AI tool and how to set it up. You need to have the right resource in place to support day-to-day maintenance of the tool; you need to know how to update the system, and how to close the system securely down at the end of your project.

You need to understand how to monitor and can mitigate generative AI drift, bias and hallucinations. You have a robust testing and monitoring process in place to catch these problems.

You should use the Technology code of practice to build a clear understanding of technology deployment lifecycles, and understand and use the NCSC cloud security principles.

You should understand the benefits, other use cases and applications that your solution could support across government. The Rose Book provides guidance on government-wide knowledge assets and The Government Office for Technology Transfer can provide support and funding to help develop government-wide solutions.

If you develop a service you must use the Service Standard for government.

You can find out more about development best practices for generative AI in our Building the solution section.

Principle 6: You use the right tool for the job

You should ensure you select the most appropriate technology to meet your needs. Generative AI is good at many tasks but has a number of limitations and can be expensive to use. You should be open to solutions using generative AI as they can allow organisations to develop new or faster approaches to the delivery of public services, and can provide a springboard for more creative and innovative thinking about policy and public sector problems. You can create more space for you and your people to problem solve by using generative AI to support time-consuming administrative tasks.

When building generative AI solutions you should make sure that you select the most appropriate deployment patterns and and choose the most suitable generative AI model for your use case.

You can find out about how to choose the right generative AI technology for your task or project in our Identifying the right use cases, Patterns, Picking your tools and Things to consider when evaluating LLMs sections.

Principle 7: You are open and collaborative

There are lots of teams across government who are interested in using generative AI tools in their work. Your approach to any generative AI project should make use of existing cross-government communities, where there is a space to solve problems collaboratively.

You should identify which groups, communities, civil societies, Non-governmental Organisations (NGOs), academic organisations and public representative organisations have an interest in your project. You should have a clear plan for engaging and communicating with these stakeholders at the start of your work.

You should seek to join cross-government communities and engage with other government organisations. Find other departments who are trying to address similar issues and learn from them, and also share your insights with others. You should reuse ideas, code and infrastructure where possible.

Any automated response visible to the public such as via a chatbot interface or email should be clearly identified as such (e.g. “This response has been written by an automated AI-chatbot”)

You should be open with the public about where and how algorithms and AI systems are being used in official duties (e.g. GOV.UK digital blogs). The UK Algorithmic Transparency Recording Standard (ATRS) provides a standardised way to document information about the algorithmic tools being used in the public sector with the aim to make this information clearly accessible to the public.

You can find out more in our Ethics section.

Principle 8: You work with commercial colleagues from the start

Generative AI tools are new and you will need specific advice from commercial colleagues on the implications for your project. You should reach out to commercial colleagues early in your journey to understand how to use generative AI in line with commercial requirements.

You should work with commercial colleagues to ensure that the expectations around the responsible and ethical use of generative AI are the same between in-house developed AI systems and those procured from a third party. For example, procurement contracts can require transparency from the supplier on the different information categories as set out in the Algorithmic Transparency Recording Standard (ATRS).

You can find out more in our Buying generative AI section.

Principle 9: You have the skills and expertise needed to build and use generative AI

You should understand the technical requirements for using generative AI tools, and have them in place within your team.

You should know that generative AI requires an understanding of new skills such as prompt engineering and you, or your team, should have the necessary skill set.

You should take part in available civil service learning courses on generative AI, and proactively keep track of developments in the field.

You can find out more in our Acquiring skills section.

Principle 10: You use these principles alongside your organisation’s policies and have the right assurance in place

These principles and this framework set out a consistent approach for the use of generative AI tools for UK government. While you should make sure that you use these principles when working with generative AI, many government organisations have their own governance structures and policies in place, and you also should follow any organisation-specific policies.

You need to understand, monitor and mitigate the risks that using a generative AI tool can bring. You need to connect with the right assurance teams in your organisation early in the project lifecycle for your generative AI tool.

You need to have clearly documented review and escalation processes in place, this might be a generative AI review board, or a programme-level board.

You can find out more in our Governance section.

Understanding generative AI

This section explains what generative AI is, the applications of generative AI in government and the limitations of generative AI and LLMs.

It supports: Principle 1: You know what generative AI is and what its limitations are.

This section is centred on explaining generative AI and its limitations. You can find explanations of the core concepts around managing, choosing and developing generative AI solutions in the Building generative AI Solutions section.

What is generative AI?

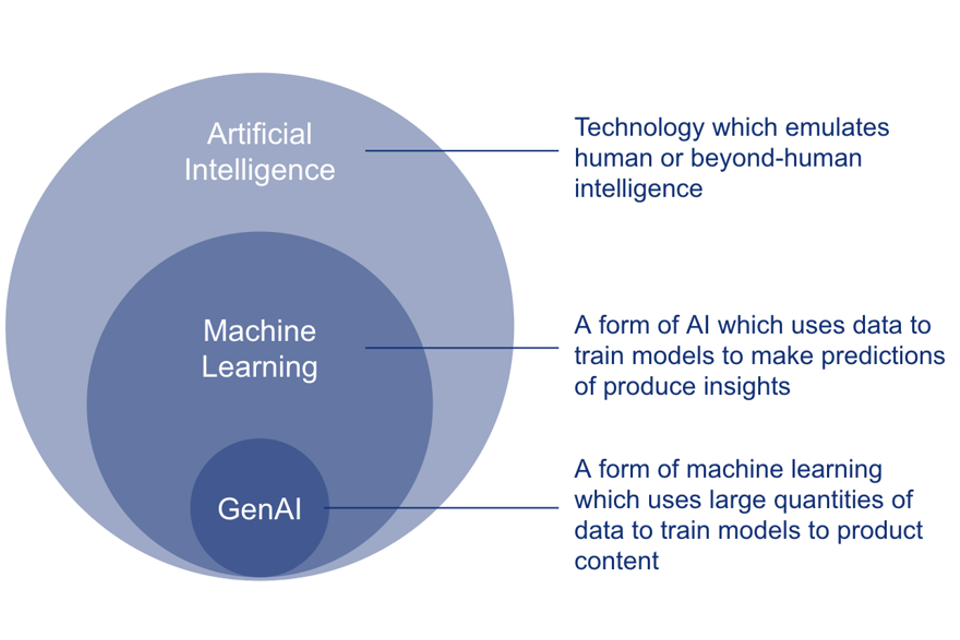

Generative AI is a form of Artificial Intelligence (AI) - a broad field which aims to use computers to emulate the products of human intelligence - or to build capabilities which go beyond human intelligence.

Unlike previous forms of AI, generative AI produces new content, such as images, text or music. It is this capability, particularly the ability to generate language, which has captured the public imagination, and creates potential applications within government.

Generative AI fits within the broader field of AI as shown below:

Models which generate content are not new, and have been a subject of research for the last decade. However, the launch of ChatGPT in November 2022 increased public awareness and interest in the technology, as well as triggering an acceleration in the market for usable generative AI products. Other well known generative AI applications include Claude, Bard, Bedrock, and Dall-E. These applications are a type of generative AI known as a Large Language Model (LLM).

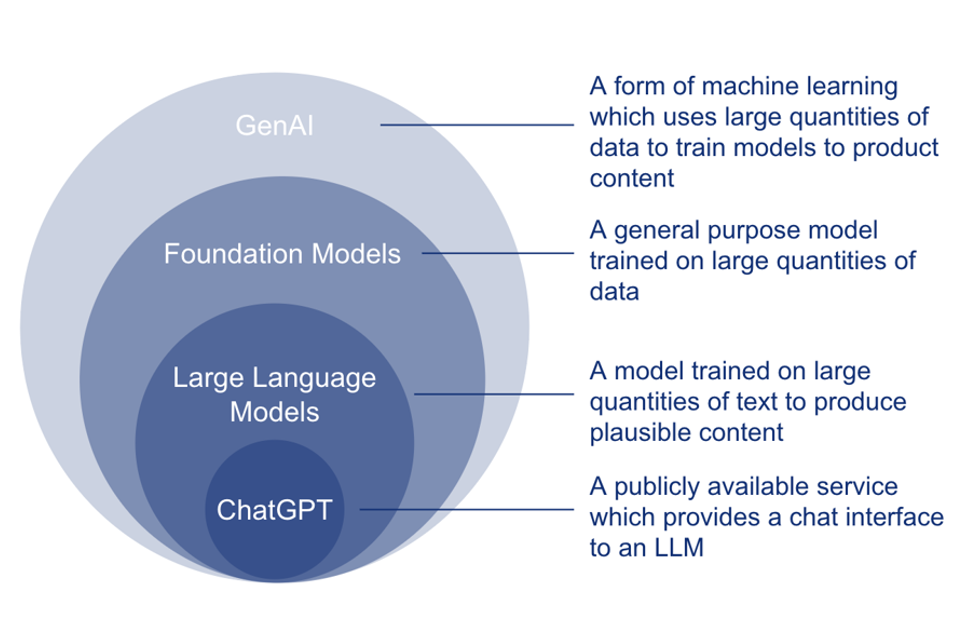

Public LLM interfaces fit within the field of generative AI as shown below:

Foundation models are large neural networks trained on extremely large datasets to produce responses which resemble those datasets. Foundation models may not necessarily be language-based, and they could have been trained on non-text data, e.g. biochemical information.

Large Language Models (LLMs) are foundation models specifically trained on text and natural language data to generate high-quality text based outputs.

User interfaces for foundation models & LLMs, are user-friendly ways that people without technical experience can use foundation models or LLMs. ChatGPT and Bard are examples of these, at present they are mostly accessed by tool-specific URLs, but they are likely to be embedded into other consumer software and tools in the near future.

Generative AI works by using large quantities of data, often harvested from the internet, to train a model in the underlying patterns and structure of that data. After many rounds of training, sometimes involving machines only, sometimes involving humans, the model is capable of generating new content, similar to the training examples.

When a user provides a prompt or input, the AI evaluates the likelihood of various possible responses based on what it has learned from its training data. It then selects and presents the response that has the highest probability of being the right fit for the given prompt. In essence, it uses its training to choose the most appropriate response for the user’s input.

Applications of generative AI in government

Despite their limitations, the ability of LLMs to process and produce language is highly relevant to the work of government, and could be used to:

- Speed up delivery of services: retrieving relevant organisational information faster to answer citizen digital queries or routing email correspondence to the right parts of the business.

- Reduce staff workload: suggesting first drafts of routine email responses or computer code to allow people more time to focus on other priorities.

- Perform complicated tasks: helping to review and summarise huge amounts of information.

- Improve accessibility of government information: improving the readability and accessibility of information on webpages or reports.

- Perform specialist tasks more cost-effectively: summarising documentation that contains specialist language like financial or legal terms, or translating a document into several different languages.

However, LLMs and other forms of generative AI still have limitations: you should make sure that you understand these, and that you build appropriate testing and controls into any generative AI solutions.

Limitations of generative AI and LLMs

LLMs predict the next word in a sequence: they don’t understand the content or meaning of the words beyond how likely they are to be used in response to a particular question. This means that even though LLMs can produce plausible responses to requests, there are limitations on what they can reliably do.

You need to be aware of these limitations, and have checks and assurance in place when using generative AI in your organisation.

- Hallucination (also called confabulation): LLMs are primarily designed to prioritise the appearance of being plausible rather than focusing on ensuring absolute accuracy, frequently resulting in the creation of content that appears plausible but may actually be factually incorrect.

- Critical thinking and judgement: Although LLMs can give the appearance of reasoning, they are simply predicting the next most plausible word in their output, and may produce inaccurate or poorly-reasoned conclusions.

- Sensitive or ethical context: LLMs can generate offensive, biased, or inappropriate content if not properly guided, as they will replicate any bias present in the data they were trained on.

- Domain expertise: Unless specifically trained on specialist data, LLMs are not true domain experts. On their own, they are not a substitute for professional advice, especially in legal, medical, or other critical areas where precise and contextually relevant information is essential.

- Personal experience and context: LLMs lack personal experiences and emotions. Although their outputs may appear as if they come from a person, they do not have true understanding or a consciousness.

- Dynamic real-time information retrieval: LLMs do not always have real-time access to the Internet or data outside their training set. However, this feature of LLM products is changing: as of October 2023, ChatGPT, Bard and Bing have been modified to include access to real-time Internet data in their results.

- Short-term memory: LLMs have a limited context window. They might lose track of the context of a conversation if it’s too long, leading to incoherent responses.

- Explainability: Generative AI is based on neural networks, which are so-called ‘black boxes’. This makes it difficult or impossible to explain the inner workings of the model which has potential implications if in the future you are challenged to justify decisioning or guidance based on the model.

These limitations mean that there are types of use cases where you should currently avoid using generative AI, such as safety-of-life systems or those involving fully automated decision-making which affects individuals.

However, the capabilities and limitations of generative AI solutions are rapidly changing, and solution providers are continuously striving to overcome these limitations. This means that you should make sure that you understand the features of the products and services you are using and how they are expected to change.

Building generative AI solutions

This section outlines the practical steps you’ll need to take in building generative AI solutions, including defining the goal, building the team, creating the generative AI support structure, buying generative AI and building the solution.

It supports:

- Principle 1: You know what generative AI is and what its limitations are

- Principle 3: You know how to keep generative AI tools secure

- Principle 4: You have meaningful human control at the right stage

- Principle 5: You understand how to manage the full generative AI lifecycle

- Principle 6: You use the right tool for the job

- Principle 8: You work with commercial colleagues from the start

- Principle 9: You have the skills and expertise needed to build and use generative AI

However, following the guidance in this section is only part of what is needed to build generative AI solutions: you also need to make sure that you are using generative AI safely and responsibly.

Defining the goal

Like all technology, using generative AI is a means to an end, not an objective in itself. Whether planning your first use of generative AI or a broader transformation programme, you should be clear on the goals you want to achieve and particularly, where you could use generative AI.

Goals for the use of generative AI may include improved public services, improved productivity, increased staff satisfaction, increased quality, cost savings and risk reduction. You should make sure you know which goal you are seeking, and how you will measure outcomes.

Identifying use cases

When thinking about how you could leverage generative AI in your organisation you need to consider the possible situations or use cases. The identification of potential use cases should be led by business needs and user needs, rather than directed by what the technology can do. Encourage business units and users to articulate their current challenges and opportunities. Take the time to thoroughly understand users and their needs as per the Service Manual to make sure you are solving the right problems. Try to focus on use cases that can only be solved by generative AI or where generative AI offers significant advantages above existing techniques.

The use of generative AI is still evolving, but the most promising use cases are likely to be those which aim to:

- Support digital enquiries: enable citizens to express their needs in natural language online, and help them find the content and services which are most helpful to them.

- Interpret requests: analyse correspondence or voice calls to understand citizens’ needs, and route their requests to the place where they can best get help.

- Enhanced search: quickly retrieving relevant organisational information or case notes to help answer citizen’s queries.

- Synthesise complex data: help users to understand large amounts of data and text, by producing simple summaries.

- Generate output: produce first drafts of documents and correspondence.

- Assist software development: support software engineers in producing code, and understanding complex legacy code.

- Summarise text and audio: converting emails and records of meetings into structured content, saving time in producing minutes and keeping records.

- Improve accessibility: support conversion of content from text to audio, and translation between different languages.

Use cases to avoid

Given the current limitations of generative AI, there are many use cases where its use is not yet appropriate, and which should be avoided:

- Fully automated decision-making: any use cases involving significant decisions, such as those involving someone’s health or safety, should not be made by generative AI alone.

- High-risk /high-impact applications: generative AI should not be used on its own in high-risk areas which could cause harm to someone’s health, safety, fundamental rights, or to the environment.

- Low-latency applications: generative AI operates relatively slowly compared to other computer systems, and should not be used in use cases where an extremely rapid, low-latency response is required.

- High-accuracy results: generative AI is optimised for plausibility rather than accuracy, and should not be relied on as a sole source of truth, without additional measures to ensure accuracy.

- High-explainability contexts: like other solutions based on neural networks, the inner workings of a generative AI solution may be difficult or impossible to explain, meaning that it should not be used where it is essential to explain every step in a decision.

- Limited data contexts: The performance of generative AI depends on large quantities of training data. Systems that have been trained on limited quantities of data, for example in specialist areas using legal or medical terminology, may produce skewed or inaccurate results.

This list is not exhaustive: you should make sure that you understand the limitations of generative AI, as well as the features and roadmap of the products and services you are using.

Practical recommendations

- Define clear goals for your use of generative AI, and ensure they are consistent with your organisation’s AI roadmap.

- Select use cases which meet a clear need and fit the capabilities of generative AI.

- Understand the limitations of generative AI, and avoid high-risk use cases.

- Find out what use cases other government organisations are considering and see if you can share information or reuse their work.

Building the team

While public-facing generative AI services such as ChatGPT are easy to use and access, building production-grade solutions which underpin services to citizens requires a range of skills and expertise.

You should aim to build a multi-disciplinary team which includes:

- Business leaders and experts who understand the context and impact on citizens and services.

- Data scientists who understand the relevant data, how to use it effectively, and how to build/train and test models.

- Software engineers who can build and integrate solutions.

- User researchers and designers who can help understand user needs and design compelling experiences.

- Support from legal, commercial and security colleagues, as well as ethics and data privacy experts who can help you make your generative AI solution safe and responsible.

You should ensure that you not only have the team in place to build your generative AI solution, but that you have the capability to operate your solution in production.

As well as building a team which contains the right skills, you should strive to ensure that your team includes a diversity of groups and viewpoints, to help you stay alert to risks of bias and discrimination.

Generative AI is a new technology, and even if you have highly experienced experts in your team, they will likely need to acquire new skills.

Acquiring skills

The broad foundational skills required for working in the digital space are outlined in the digital, data and technology capability framework including data roles, software development and user-centred design. To help you acquire the more specific skills needed to build and run generative AI solutions, we have defined a set of open learning resources available to all civil servants from within Civil Service Learning:

- Generative AI - Introduction: in this course you will learn what generative AI is, what the main generative AI applications are, and their capabilities and potential applications across various domains. It will also cover the limits and risks of generative AI technologies, including ethical considerations.

- Generative AI - Risks and ethics: in this course you will learn about the generic risks and technical limitations of AI technologies. You will consider the ethical implications of using AI, including the issues of bias, fairness, transparency and potential misuse. The course also includes the dos and don’ts of using generative AI in government.

- Generative AI - Tools and applications: in this course you will learn about the most important generative AI tools and their functionalities.

- Generative AI - Prompt engineering: in this course you will learn what prompt engineering is and how it can be used to improve the accuracy of generative AI tools.

- Generative AI - Strategy and governance: in this course you will learn how to evaluate the business value of AI and assess its potential impact on organisational culture and governance to develop a holistic AI strategy.

- Generative AI - Technical curriculum: in this course you will learn about the functionalities of various AI technologies and cloud systems, including copilots. You will also consider how to address technical and innovation challenges concerning the implementation and training of generative AI to generate customised outcomes.

A series of off-the-shelf courses on more specific aspects of generative AI has been made available on Prospectus online through the Learning Framework.

You should tailor your learning plan to meet the needs of five groups of learners:

- Beginners: All civil servants who are new to generative AI and need to gain an understanding of its concepts, benefits, limitations and risks. The suggested courses provide an introduction to generative AI, and do not require any previous knowledge.

- Operational delivery and policy professionals: Civil servants who primarily use generative AI for information retrieval and text generation purposes. The recommended resources provide the necessary knowledge and skills to make effective and responsible use of appropriate generative AI tools.

- Digital and technology professionals: Civil servants with advanced digital skills who work on the development of generative AI solutions in government. The suggested learning opportunities address the technical aspects and implementation challenges associated with fostering generative AI innovation.

- Data and analytics professionals: Civil servants who work on the collection, organisation, analysis and visualisation of data. The recommended resources focus on the use of generative AI to facilitate automated data analysis, the synthesis of complex information, and the generation of predictive models.

- Senior Civil Servants: Decision-makers who are responsible for creating a generative AI-ready culture in government. These resources and workshops help understand the latest trends in generative AI, and its potential impact on organisational culture, governance, ethics and strategy.

Practical recommendations

- Make full use of the training resources available, including those available on Civil Service Learning.

- Build a multi-disciplinary team with all the expertise and support you need.

Creating the generative AI support structure

As generative AI is a new technology, you should make sure that you have the structures in place to support its adoption. These structures do not need to be fully mature before your first project: indeed, your experience in your first project will shape the way you organise these structures. However, you should ensure that you have sufficient control to make your use of generative AI safe and responsible.

The supporting structures required for effective generative AI adoption are the same as those required to support the broader adoption of other forms of AI. If your organisation is already using other forms of AI, these structures may already be in place.

If you do not already have them in place, you should consider establishing:

- AI strategy and adoption plan: a clear statement of the way that you plan to use AI within your organisation, including impact on existing organisation structures and change management plans.

- AI principles: a simple set of top level principles which embody your values and goals, and which can be followed by all people building solutions.

- AI governance board: a group of senior leaders and experts to set principles, and to review and authorise uses of AI which fit these principles.

- Communication strategy: your approach for engaging with internal and external stakeholders, to gain support, share best practice and show transparency.

- AI sourcing and partnership strategy: definition of which capabilities you will build within your own organisation and which you will seek from partners.

Practical recommendations

- Identify the support structures you need for your level of maturity and adoption.

- Reuse support structures which are already in place for AI and other technologies.

- Adapt your support structures based on practical experience.

Buying generative AI

The generative AI market is still new and developing engagement with commercial colleagues is particularly important to discuss partners, pricing, products and services.

Crown Commercial Service can guide you through existing guidance, routes to market, specifying your requirements, and[running the procurement process. They can also help you navigate procurement in an emerging market and regulatory and policy landscape, as well as ensure that your procurement is aligned with ethical principles.

Existing guidance

There is detailed guidance to support the procurement of AI in the public sector. You should familiarise yourself with this guidance and make sure you’re taking steps to align with best practice.

-

Guidelines for AI procurement - provides a summary of best practice when buying AI technologies in government:

- Preparation and planning: Getting the right expertise, data assessments and governance, AI impact assessment and market engagement

- Publication: Problem statements, specification, avoiding vendor lock-in

- Selection, evaluation and award: Setting robust criteria, ensuring you have the correct expertise

- Contract implementation and ongoing management: Managing your service, testing for security and how to handle end of life considerations

- Digital, Data & Technology (DDaT) playbook - provides general guidance on sourcing and contracting for digital and data projects and programmes, which all central government departments and their arms length bodies are expected to follow on a ‘comply or explain’ basis. It includes specific guidance on AI and machine learning, as well as Intellectual Property Rights (IPR).

- Sourcing playbook - defines the commercial process as a whole and includes key policies and guidance for making sourcing decisions for the delivery of public services.

- Rose Book - provides guidance on managing and exploiting the wider value of knowledge assets (including software, data and business processes). Annex D contains specific guidance on managing these in procurement.

Routes to market

Consider the available routes to market and commercial agreements, and determine which one is best to run your procurement through based on your requirements.

There are a range of routes to market to purchase AI systems. Depending upon the kind of challenges you’re addressing, you may prefer to use a Framework or a Dynamic Purchasing System (DPS). A ‘Find a Tender Service’ (FTS) procurement route also exists which may be an option for bespoke requirements or contractual terms, or where there is no suitable standard offering.

Crown Commercial Service (CCS) offers a number of compliant Frameworks and DPSs for the public sector to procure AI.

A summary of the differences between a Framework agreement and DPS is provided below, with further information available at www.crowncommercial.gov.uk, and more information on use of frameworks in the Digital, Data & Technology (DDaT) Playbook.

| Category | Framework | DPS |

|---|---|---|

| Supplier access | Successful suppliers are awarded to the framework at launch. Closed to new supplier registrations. Prime suppliers can request to add new subcontractors. | Open for new supplier registrations at any time. |

| Structure | Often divided into lots by product or service type. | Suppliers filterable by categories. |

| Compliance | Thorough ongoing supplier compliance checks carried out by CCS, meaning buyers have less to do at call-off. (excluding GCloud) | Basic compliance checks are carried out by CCS, allowing the buyer to complete these at the call-off. |

| Buying options | Various options, including direct award, depending on the agreements. | Further competition only. |

A number of CCS agreements include AI within their scope, for example:

Dynamic Purchasing Systems:

Frameworks:

- Big Data & Analytics

- GCloud 13

- Technology Products & Associated Services 2

- Technology Services 3

- Back Office Software

- Cloud Compute 2

In addition to commercial agreements, CCS has signed a number of Memorandum of Understanding (MoU) with suppliers. These MoUs set out preferential pricing and discounts on products and services across the technology landscape, including cloud, software, technology products and services and networks. MoU savings can be accessed through any route to market.

To find out more or for support, please contact [email protected].

Specifying Your requirements

When buying AI products and services, you will need to document your requirements to tell your suppliers what you need. Read the CCS guide on ‘How to write a specification’ for more details.

When drafting requirements for generative AI, you should:

- Start with your problem statement

- Highlight your data strategy and requirements

- Focus on data quality, bias (mitigation) and limitations

- Underline the need for you to understand the supplier’s AI approach

- Consider strategies to avoid vendor lock-in

- Apply the data ethics framework principles and checklist

- Mention any integration with associated technologies or services

- Consider your ongoing support and maintenance requirements

- Consider data format of your organisation and provide suppliers with dummy data where possible

- Provide guidance on budget to consider hidden costs

- Consider who will have intellectual property rights if new software is developed.

- Consider any acceptable liabilities and appetite for risk, to match against draft terms & conditions, once provided

For further information and detail, read the Selection, Evaluation and Award section of the Guidelines for AI Procurement.

Running your procurement

Having prepared your procurement strategy, defined your requirements, and selected your commercial agreement, you can now proceed to conduct a ‘call-off’ in accordance with the process set out in the relevant commercial agreement. The commercial agreement will specify whether you can ‘call-off’ by further competition, a direct award or either.

CCS offers buyer guidance tailored to each of its agreements, which describe each step in detail, including completing your order contract and compiling your contract.

Detailed guidance on planning and running procurements is available in the Digital, Data & Technology (DDaT) Playbook.

Procurement in an emerging market

Commercial agreements

AI is an emerging market. As well as rapidly evolving technology, there are ongoing changes in the supply base and the products and services it offers. Dynamic Purchasing Systems (DPSs) offer flexibility for new suppliers to join, which often complement these dynamics well for buyers.

Any public sector buyers interested in shaping Crown Commercial Service’s (CCS) longer term commercial agreement portfolio should express their interest via [email protected].

Regulation and policy

Regulation and policy will also evolve to keep pace. However, there are already a number of legal and regulatory provisions which are relevant to the use of AI technologies, including:

- UK data protection law: regulation around automated decision making, processing personal data, processing for the purpose of developing and training AI technologies. In November 2022, a new Procurement Policy Note was published to provide an update to this: PPN 03/22 Updated guidance on data protection legislation.

- Online Safety Act: provisions concerning design and use of algorithms are to be included in a new set of laws to protect children and adults online. It will make social media companies more responsible for their users’ safety on their platforms.

- A pro-innovation approach to AI regulation: this white paper published in March 2023, sets out early steps towards establishing a regulatory regime for AI. The white paper outlines a proportionate pro-innovation framework, including five principles to guide responsible AI innovation in all sectors.

- Centre for Data Ethics and Innovation (CDEI) AI assurance techniques: The portfolio of AI assurance techniques has been developed by the Centre for Data Ethics and Innovation (CDEI), initially in collaboration with techUK. The portfolio is useful for anybody involved in designing, developing, deploying or procuring AI-enabled systems. It shows examples of AI assurance techniques being used in the real-world to support the development of trustworthy AI.

Further guidance is also from the Information Commissioner’s Office (ICO), Equality and Human Rights Commission (EHRC), Medicines and Healthcare products Regulation Authority (MHRA) and the Health and Safety Executive (HSE).

Aligning procurement and ethics

It’s important to consider and factor in data ethics into your commercial approach from the outset. A range of guidance relating specifically to AI and data ethics is available to provide guidance for public servants working with data and/or AI. This collates existing ethical principles, developed by government and public sector sector bodies.

- The Data ethics framework outlines appropriate and responsible data use in government and the wider public sector. The framework helps public servants understand ethical considerations, address these within their projects, and encourages responsible innovation.

- Data ethics checklist: CCS has created a checklist for suppliers to follow that will mitigate bias and ensure diversity in development teams, as well as transparency/ interpretability and explainability of the results.

- The Public Sector Contract (PSC) includes a number of provisions relating to AI and data ethics.

For further information, please see Data protection and privacy, Ethics and Regulation and policy sections.

Practical recommendations

- Engage your commercial colleagues from the outset.

- Understand and make use of existing guidance.

- Understand and make use of existing routes to market, including Frameworks, Dynamic Purchasing Systems and Memoranda of Understanding.

- Specify clear requirements and plan your procurement carefully.

- Seek support from your commercial colleagues to help navigate the evolving market, regulatory and policy landscape.

- Ensure that your procurement is aligned to ethical principles.

Building the solution

Core concepts

Generative AI provides a wide breadth of capability, and a key part of designing and building a generative AI solution will be to get it to behave accurately and reliably. This section sets out key concepts that you need to understand to design and build generative AI solutions that meet your needs.

- Prompts are the primary input provided to an LLM. In the most simple case, a prompt may only be the user-prompt. In production systems, a prompt will have additional parts, such as meta-prompts, the chat history, and reference data to support explainability.

- Prompt engineering describes the process of adjusting LLM input to improve performance and accuracy. In its simplest form it may be testing different user-prompt formulations. In production systems, it will include adjustments, such as adding meta-prompts, provision of examples and data sources, and sometimes parameter tuning.

- User-prompts are whatever you type into e.g. a chat box. They are generally in the everyday natural language you use, e.g. ‘Write a summary of the generative AI framework’.

- Meta-prompts (also known as system prompts) are higher-level instructions that help direct an LLM to respond in a specific way. They can be used to instruct the model on how to generate responses to user-prompts, provide feedback, or handle certain types of content.

- Embedding is the process of transforming information such as words, or images into numerical values and relationships that the computer algorithms can understand and manipulate. Embeddings are typically stored in vector databases (see below).

- Retrieval Augmentation Generation (RAG) is a technique which uses reference data stored in vector databases (i.e. the embeddings) to ground a model’s answers to a user’s prompt. You could specify that the model cites its sources when returning information.

- Vector databases index and store data such as text in an indexed format easily searchable by models. The ability to store and efficiently retrieve information has been a key enabler in the progress of generative AI technology.

- Grounding is the process of linking the representations learned by the AI models to real-world entities or concepts. It is essential for making AI models understand and relate its learned information to real-world concepts. In the context of large language models, grounding is often achieved by a combination of prompt engineering, parameter tuning, and RAG.

- Chat history is a collection of prompts and responses. It is limited to a session. Different models may allow different session sizes. For example, Bing search sessions allow up to 30 user-prompts. The chat history is the memory of LLMs. Outside of the chat history LLMs are “stateless”. That means the model itself does not store chat history. If you wanted to permanently add information to a model you would need to fine-tune an existing model (or train one from scratch).

- Parameter tuning is the process of optimising the performance of the AI model for a specific task or data set by adjusting configuration settings.

- Model fine-tuning is the process of limited re-training of a model on new data. It can be done to enforce a desired behaviour. It also allows us to add data sets to a model permanently. Typically, fine-tuning will adjust only some layers of the model’s neural network. Depending on the information or behaviour to be trained, fine-tuning may be more expensive and complicated than prompt engineering. Experience with model tuning in HMG is currently limited and we are looking to expand on this topic in a future iteration of this framework.

- Open source models are publicly accessible, and their source code, architecture, and parameters are available for examination and modification by the broader community.

- Closed source models on the other hand, are proprietary and not openly accessible to the public. The inner workings and details of these models are kept confidential and are not shared openly.

Practical recommendations

Learn about generative AI technology; read articles, watch videos and undertake short courses. See section on acquiring skills.

Patterns

Generative AI can be accessed and deployed in many different ways or patterns. Each pattern provides different benefits and presents a different set of security challenges, affecting the level of risk that you must manage.

This section explains patterns and approaches as the main ways that you are likely to use and encounter generative AI, including:

- Public generative AI applications and web services

- Embedded generative AI applications

- Public generative AI APIs

- Local development

- Cloud solutions

Public generative AI applications and web services

Applications like OpenAI’s ChatGPT, Google’s Bard, Microsoft’s Bing search, are the consumer side of generative AI. They have a simple interface, where the user types in a text prompt and is presented with a response. This is the simplest approach, with the benefit that users are already familiar with these tools.

Many LLM providers offer web services free of charge, allowing users to experiment and interact with their models. Generally, you’ll just need an email address to sign up.

There are a few things you’ll need to consider before signing up to a generative AI web service:

- You must make sure you’re acting in line with the policies of your organisation.

- While the use of these web services is free of charge, you should be aware that any information provided to these services may be made publicly available and/or used by the provider. Make sure you have read and understood the terms of service.

- Generative AI web services and applications are often trained using unfiltered material on the internet. This means that they can reproduce any harmful or biassed material that they have found online. You can learn more about bias and how to use generative AI safely in the Using generative AI safely and responsibly section.

- Generative AI web services and applications may produce unreliable results, you should not trust any factual information provided without a validated reference.

Embedded generative AI applications

LLMs are now being embedded, or integrated, into existing and popular products; embedded generative AI allows people to use language-based prompts to ask questions about their organisation’s data, or for specific support on a task.

Embedded generative AI tools provide straightforward user interfaces in products that people are already familiar with. They can be a very simple way to bring Generate AI into your organisation. Examples of embedded generative AI tools include:

- Adobe Photoshop Generative Fill tool: helps with image editing by adding or removing components.

- Github Copilot and AWS CodeWhisperer: helps to develop code by providing auto-complete style suggestions.

- AWS ChatOps: an AI assistant that can help to manage an AWS cloud environment.

- Microsoft 365 Copilot: an AI assistant that can support use of Microsoft products.

- Google Duet AI: an AI assistant that can support the use of Google products including writing code.

You must be certain you understand the scope of access and data processing of these services. Most enterprise licenced services will assure your control over your data. However, supporting services like abuse monitoring may still retain information for processing by the vendors.

If data sovereignty is a concern, you must also clarify the data processing geolocation with a vendor.

LLMs that are integrated into organisations existing enterprise licences may have access to the data that’s held by your organisation by default. Before enabling a service, you must understand what data an embedded generative AI tool has access to in your organisation.

The use of code assistance tools requires the addition of integrated development environment (IDE) or editor plugins. You must be certain to only use official plugins. If you use a coding assistant to generate a complex algorithm, it may be necessary to verify the licensing status manually by searching for the code on the internet to double-check you’re not inadvertently violating any copyrights or licences.

Public generative AI APIs

Most big generative AI applications will offer an Application Programming Interface (API). This allows developers to integrate generative AI capabilities directly into solutions they build. It takes only a few lines of code to build a plugin to extend the features of another application.

As with web services, signing up is typically required to obtain an access token. You need to be aware of the terms and conditions of using the API.

By using an API your organisation’s data is still sent over to the provider, and you must be sure that you are comfortable with what happens to it before using an API.

The benefit of using APIs is that you will have greater control over the data. You can intercept the data being sent to the model and also process the responses before returning them to the user. This allows you to e.g.:

- Include privacy enhancing technology (PET) to prevent data leakage

- Add content filters to sanitise the prompts and responses

- Log and audit all interactions with the model

However, you will also need to perform additional tasks commonly performed by the user interface of web and embedded services, such as:

- Maintaining a session history

- Maintaining a chat history

- Developing and maintaining meta-prompts and general prompt engineering

Local development

For rapid prototyping and minimum viable product (MVP) studies, the development on personal or local hardware (i.e. sufficiently powerful laptops) may be a feasible option.

Development best practices like distributed version control systems, automated deployment, and regular backups of development environments are particularly important when working with personal machines.

When working on local development you should consider containerisation and cloud-native technology paradigms like twelve-factor applications. These will help when moving solutions from local hardware into the cloud.

Please note that the recommendation for production systems remains firmly with fully supported Cloud environments.

Cloud solutions

Cloud services provide similar functionality to public and paid-for APIs, often with a familiar web interface with useful tools for experimentation. In addition to compliance with HMG’s Cloud First Policy, their key advantages is that they allow increased control over your data. You can access cloud service providers’ LLMs by signing up through your organisation’s AWS, Microsoft or Google enterprise account.

When establishing your generative AI cloud service, make sure the development environment is compliant with your organisations’ data security policies, governmental guidelines and the Service Standard.

If your organisation and/or use case requires all data to remain on UK soil, you might need to plan in additional time for applying for access to resources within the UK as these may be subject to additional regulation by some providers. Technical account managers and solution architects supporting your enterprise account will be able to help with this step.

Practical recommendations

- Learn about the different patterns and approaches, evaluate them against the needs of your project and users.

- Refer to your organisation’s policies before exploring any use of public generative AI applications or APIs.

- Be aware that any information provided to generative AI web services may be made publicly available and/or used by the provider. Make sure you have read and understood their terms of service.

- Check licensing and speak to suppliers to understand the capabilities and data collection and storage policies for their services; including geographic region if data sovereignty is a concern.

- Before enabling embedded generative AI tools understand what organisational data they would have access to.

- Use only official code assistance plugins.

- Learn from other government organisations who have implemented generative AI solutions.

Picking your tools

In order to develop and deploy generative AI systems you will need to pick the right tools and technology for your organisation. Deciding on the best tools will depend on your current IT infrastructure, level of expertise, risk-appetite and the specific use cases you are supporting.

Decisions on your development stack.

There are a number of technology choices you will need to consider when building your generative AI solutions, including the most appropriate IT infrastructure, which programming languages to use and the best large language model.

- Infrastructure: You should select a suitable infrastructure environment. Microsoft, Google or AWS may be appropriate, depending on your current IT infrastructure or existing partnerships and expertise in your teams. Alternatively, it may be that a specific large language model is considered most appropriate for your particular use case, leading to a particular set of infrastructure requirements.

- As models change and improve, the most appropriate one for your use case may also change, so try to build-in the technical agility to support different models or providers.

Items for consideration include:

- Use of cloud services vs local development. You should be aware of the HMG’s Cloud First Policy, but understand that local development may be feasible for experimentation. Using container technology from the start can help you to move your solution between platforms with minimal overhead.

- Web Services, access modes such as APIs and associated. frameworks, see section on patterns.

- Front-end / user interface and back-end solutions.

- Programming languages.

- Data storage (e.g. Binary Large Object (BLOB) stores and vector stores)

-

Access logging, prompt auditing, and protective monitoring.

-

Programming language: In the context of AI research, Python is the most widely used programming language. While some tools and frameworks are available in other languages, for example LangChain is also available in JavaScript, it is likely that most documentation and community discussion is based on Python examples. If you’re working on a use case that has focused interaction with a generative AI model API endpoint only, the choice of programming language is less important.

- Frameworks: generative AI frameworks are software libraries or platforms that provide tools, APIs, and pre-built models to develop, train, and deploy generative AI models. These frameworks implement various algorithms and architectures, making it more convenient for you to experiment with and create generative models. Example frameworks include LangChain, Haystack, Azure Semantic Kernel and Google Vertex AI pipelines. AWS Bedrock similarly provides an abstraction layer to interact with varied models using a common interface. These frameworks have their own strengths and unique features. However, you should also be aware that their use may increase the complexity of your solution.

The choice of a generative AI framework might depend on:

- Your specific project requirements.

- The familiarity of the developer with the framework and programming language.

- The size and engagement of the community support around it.

Things to consider when evaluating Large Language Models (LLMs)

There are many models currently available so you need to select the most appropriate for your particular use case. The Stanford Center for Research on Foundation Models (CRFM) provides the Holistic Evaluation of Language Models (HELM) to benchmark different models against criteria such as accuracy, robustness, fairness, bias, and toxicity; and can help you to compare the capabilities of a large number of language models. Here are some of the things you should consider:

- Capability: Depending on your use case, conversational foundation models may not be the best fit for you. If you have a domain-specific requirement in sectors like medical or security applications, pre-tuned, specialised models like Google PaLM-2-med and Google PaLM-2-sec may reduce the amount of work required to reach a certain performance level and time to production. Equally, if you’re mainly focused on indexing tasks, BERT-type models may provide better performance compared to GPT-style LLMs.

- Availability: At the time of writing, many LLMs are not available for general public use, or are locked to certain regions. One of the first things to consider when deciding on which model to use is whether implementation in a production environment is possible inline with your organisation’s policy requirements.

- Mode of deployment: Many LLMs are available via a number of different routes. For production applications the use of fully-featured cloud services or operation of open-source models in a fully controlled cloud environment will be a hard requirement for most if not all use cases.

- Cost: Most access to LLMs is charged by the number of tokens (roughly equal to the word count). If your generative AI tool is hosted in a cloud environment, you’ll have to pay additional infrastructure costs. While the operation of open-source models will not necessarily incur a cost per transaction, the operation of GPU-enabled instances is costly as well. Cloud infrastructure best practices like dynamic scaling and shutting down instances outside of working hours will help to reduce these costs.

- Context limits: LLMs often limit the maximum amount of tokens the model can process as a single input prompt. Factors determining the size of prompts are the context window of conversation (if included), the amount of contextual data included via meta-prompting and retrieval augmented generation as well as the expected size of user inputs.

- API Rate limits: Model providers impose limits on how frequently users can make requests through an API. This may be important if your use case leads to a high volume of requests. Software development best practices for asynchronous execution (such as use of contexts and queues) may help to resolve bottlenecks but will increase the complexity of your solution.

- Language capability: If your use case includes multilingual interaction with the model, or if you expect to operate with very domain-specific language using specific legal or medical terminology, you should consider the amount of relevant language-specific training the LLM has received.

- Open vs closed-source (training data, code and weights): If openness is important to your solution, you should consider whether all aspects of the model, including training data, neural network coding and model weights, are available as Open Source. Sites such as Hugging Face host a large collection of models and documentation. Examples of sites that provide open source low-code solutions include Databricks and MosaicML.

- Non-technical considerations: There may be data-protection, legal or ethical considerations which constrain or direct your choice of technology, for example an LLM may have been trained on copyrighted data, or to produce a procedurally fair decision-making system, one solution should be chosen over another.

Practical recommendations

- Select the simplest solutions that meet your requirements, aligned to your IT infrastructure.

- Understand the key characteristics of generative AI products and how they fit your needs, realising that these characteristics may change rapidly in a fast moving market.

- Speak to other government organisations to find out what they have done, to help inform your decisions.

- Conduct ‘well architected’ reviews at appropriate stages of the solutions’ lifecycle.

Getting reliable results

Generative AI technology needs to be carefully controlled and managed in order to ensure the models behave and perform in the way you want them to, reliably and consistently. There are a number of things you can do to help deliver high quality and reliable performance.

- Select your model carefully: In order to achieve a reliable, consistent and cost-effective implementation, the most appropriate model for a particular use case should be chosen.

- Design a clear interface and train users: Ensure your generative AI system is used as intended. Design and develop a useful and intuitive interface your users will interact with. Define and include any required user settings (for example the size of required response). Be clear about the design envelope for generative AI systems i.e. what it has been designed and built to do, and, more importantly, what its limitations are. Ensure your user community is trained in its proper use and fully understand its limitations.

- Evaluate input prompts: User inputs to the generative AI tool can be evaluated with a content filtering system to detect and filter inappropriate inputs. The evaluation of input using deterministic tools may be feasible and could reduce the amount of comparatively expensive calls to a LLM. Alternatively, calls to a smaller and/or classification-specialised LLM may be required. Make sure that the system returns a meaningful error to allow a user to adjust their prompt if rejected. There are some commercially available tools that can provide some of this functionality. Example checks include:

- Identify whether the prompt is abusive or malicious.

- Confirm the prompt is not attempting to jailbreak the LLM, for example by asking the LLM to ignore its safety instructions.

- Confirm no unnecessary Personally Identifiable Information (PII) has been entered.

- Ground your solution: If your use case is looking for the model to provide factual information - as opposed to just taking advantage of a models’ creative language capabilities - you should follow steps to ensure that its responses are accurate, for example by employing Retrieval Augmented Generation (RAG). With RAG you identify useful documentation then extract the important text, break it into ‘chunks’, convert them to ‘embeddings’ and send them to a ‘vector-database’. This relevant information can now be easily retrieved and integrated as part of the model responses.

- A key application of generative AI is working with your organisation’s private data, by enabling the model to access, understand and use the private data, insights and knowledge can be provided to users that is specific to their subject domain. There are different ways to hook a generative AI model into a private data source.

- You could train the model from scratch on your own private data, but this is costly and impractical. Alternatively, you can take a pre-trained model and further train it on your own private data. This is a process called fine-tuning, and is less expensive and time consuming than training a model from scratch.

- The easiest and most cost-efficient approach to augmenting your generative AI model with private data, is to use in-context learning, which means adding domain-specific context to the prompt sent to the model. The limitation here is usually the size of the prompt, and a way around this is to chunk your private data to reduce its size. Then a similarity search can be used to retrieve relevant chunks of text that can be sent as context to the model.

- Use prompt engineering: An important mechanism to shape the model’s performance and produce accurate and reliable results is prompt engineering. Developing good prompts and meta-prompts is an effective way to set the standards and rules for how the user requests should be processed and interpreted, the logical steps the model should follow and what type of response is required. For example, you could include the following:

- Set the tone for the interactions, for example request a chatbot to provide polite, professional and neutral language responses. This will also help to reduce bias.

- Set clear boundaries on what the generative AI tool can, and cannot, respond to. You could specify the requirement for a model to not engage with abusive or malicious inputs, but reject them and instead return an alternative, appropriate response.

- Define the format and structure of the desired output. For example asking for a boolean yes/no response to be provided in JSON format.

- Define guardrails to prevent the assistant from generating inappropriate or harmful content.

For further information see the deep dive section.

- Evaluate outputs: Once a model returns an output, it is important to ensure that its messaging is appropriate. Off-the-shelf content filters may be useful here, as well as classical or generative AI text-classification tools. Depending on the use case, a human might be required to check the output some or all of the time, although the expenditure of time and money to do this needs careful consideration. Accuracy and bias checks on the LLM responses prior to presentation to the user can be used to check and confirm:

- The response is grounded in truth with no hallucinations

- The response does not contain toxic or harmful information

- The response does not contain biased information

- The response is fair and does not unduly discriminate

- The user has permission to access the returned information

- Include humans: There are many good ways that humans can be involved in the development and use of generative AI solutions to help implement reliable and desired outcomes. Humans can be part of the development process to review input data to make sure it is high-quality, to assess and improve model performance and also to review model outputs. If there is a person within the processing chain preventing the system from producing uncontrolled, automated outputs this is called having a ‘human-in-the-loop’.

- Evaluate performance: In order to maintain the performance of the generative AI system, its performance should be continually monitored and evaluated by logging and auditing all interactions with the model.

- Conduct thorough testing to assess the functionality and effectiveness of the system. See section on testing generative AI solutions for further information.

- Record the input prompts and the returned responses.

- Collect and analyse metrics across all aspects of performance: including hallucinations, toxicity, fairness, robustness, and higher-level business key performance indicators.

- Evaluate the collected metrics and validate the model’s outputs against ground truth or expert judgement. Obtain user feedback to understand the usefulness of the returned response. This could be a simple thumbs-up indicator or something more sophisticated.

Practical recommendations

- Assume the model may provide you with incorrect information unless you build in safeguards to prevent it.

- Understand techniques for improving the reliability of models, and that these techniques are developing rapidly.

- Ground the generative AI system in real organisational data, if possible, to improve accuracy.

- Implement extensive testing to ensure the outputs are within expected bounds. It is very easy to develop a prototype, but can be very hard to produce a working and reliable production solution.

Testing generative AI solutions

Generative AI tools are not guaranteed to be accurate as they are designed to produce plausible and coherent results. They generate responses that have a high likelihood of being plausible based on the data that they have processed. This means that they can, and do, make errors. In addition to employing techniques to get reliable results, you should have a process in place to test them.

During the initial experimental discovery phases you should look to assess and improve the existing system until it meets the required performance, reliability and robustness criteria.

- Conduct thorough testing to assess the functionality and effectiveness of the system.

- Record the input prompts and the returned responses, and collect and analyse metrics across all aspects of performance including hallucinations, toxicity, fairness, robustness, and higher-level business key performance indicators.

- Evaluate the collected metrics and validate the model’s outputs against ground truth or expert judgement, obtaining user feedback if possible.

- Closely review the outcomes of the technical decisions made, the infrastructure and running costs and environmental impact. Use this information to continually iterate your solution.

Technical methods and metrics for assessing bias in generative AI are still being developed and evaluated. However, there are existing tools that can support AI fairness testing, such as IBM fairness 360, Microsoft FairLearn, Google What-If-Tool, University of Chicago Aequitas tool, and PyMetrics audit-ai. You should carefully select methods based on the use case, and consider using a combination of techniques to mitigate bias across the AI lifecycle.

Practical recommendations

Establish a comprehensive testing process and continue to test the generative AI solution throughout its use.

Data management

Good data management is crucial in supporting the successful implementation of generative AI solutions. The types of data you will need to manage include:

- Organisational grounding data: LLMs are not databases of knowledge, but advanced text engines.Their contents may also be out of date. To improve their performance and make them more reliable, relevant information can be used to ‘ground’ the responses, for example by employing Retrieval Augmented Generation.

- Reporting data: It is important to maintain documentation, including methodology, description of the design choices and assumptions. Keep records from any architecture design reviews. This can help to support the ability to audit the project and support the transparency of your use of AI. If possible collect metrics to help to estimate any efficiency savings, value to your business and to taxpayers and the return on investment.