The roadmap to an effective AI assurance ecosystem - extended version

Published 8 December 2021

Introduction

This extended Roadmap is designed to complement the CDEI’s Roadmap to an effective AI assurance ecosystem, which sets out the key steps, and the roles and responsibilities required to develop an effective, mature AI assurance ecosystem.

Where the short version of the roadmap is designed to be accessible as a quick-read for decision makers, this extended version incorporates further research and examples to provide a more detailed picture of the ecosystem and necessary steps forward.

Additionally, chapter 1 of this extended roadmap offers further context on the AI assurance process, delivering AI assurance, the role of AI assurance in broader AI governance and the roles and responsibilities for AI assurance. Chapter 3 discusses some of the ongoing tensions and challenges in a mature AI assurance ecosystem.

This extended roadmap will be valuable for readers interested in finding out more information about how to build an effective, mature assurance ecosystem for AI.

Why we need AI assurance

Data-driven technologies, such as artificial intelligence (AI), have the potential to bring about significant benefits for our economy and society. AI systems offer the opportunity to make existing processes faster and more effective, and in some sectors offer new tools for decision-making, analysis and operations.

AI is being harnessed across the economy, helping businesses to improve their day-to-day operations, such as achieving more efficient and adaptable supply chain management. AI has also enabled researchers to make a huge leap forward in solving one of biology’s greatest challenges, the protein folding problem. This breakthrough could vastly accelerate efforts to understand the building blocks of cells, and could improve and speed up drug discovery. AI presents game changing opportunities in other sectors too, through the potential for operating an efficient and resilient green energy grid, as well as helping tackle misinformation on social media platforms.

However, AI systems also introduce risks that need to be managed. The autonomous, complex and scalable nature of AI systems (in particular, machine learning) pose risks beyond that of regular software. These features pose fundamental challenges to our existing methods for assessing and mitigating the risks of using digital technologies.

The autonomous nature of AI systems makes it difficult to assign accountability to individuals if harms occur; the complexity of AI systems often prevents users or affected individuals from explaining or understanding the link between a system’s output or decision and its causes, providing further challenges to assigning accountability; and the scalability of AI makes it particularly difficult to define legitimate values and governance frameworks for a system’s operation e.g. across social contexts or national jurisdictions.

As these technologies are more widely adopted, there is an increasing need for a range of actors to check that these tools are functioning as expected and demonstrate this to others. Without being able to assess the trustworthiness of an AI system against agreed criteria, buyers or users of AI systems will struggle to trust them to operate effectively, as intended. Furthermore they will have limited means of preventing or mitigating potential harms if a system is not in fact trustworthy.

Assurance as a service draws originally from the accounting profession, but has since been adapted to cover many areas such as cyber security and quality management. In these areas, mature ecosystems of assurance products and services enable people to understand whether systems are trustworthy. These products and services include: process and technical standards; repeatable audits; certification schemes; advisory and training services. For example, in financial accounting, auditing services provided by independent accountancy firms enable an assurance user to have confidence in the trustworthiness of the financial information presented by a company.

AI assurance services have the potential to play a distinctive and important role within AI governance. It’s not enough to set out standards and rules about how we expect AI systems to be used. It is also important that we have trustworthy information about whether they are following those rules.

Assurance is important for assessing efficacy, for example through performance testing; addressing compliance with rules and regulations, for example performing an impact assessment to comply with data protection regulation; and also for assessing more open ended risks. In the latter category, rules and regulations cannot be relied upon to ensure that a system is trustworthy, more individual judgement is required. For example, assessing whether an individual decision made by an AI system is ‘fair’ in a specific context.

By ensuring both trust in and the trustworthiness of AI systems, AI assurance will play an important enabling role in the development and deployment of AI, unlocking both the economic and social benefits of AI systems. Consumer trust in AI systems is crucial to widespread adoption, and trustworthiness is essential if systems are going to perform as expected and therefore bring the benefits we want without causing unexpected harm.

An effective AI assurance ecosystem is needed to coordinate appropriate responsibilities, assurance services, standards and regulations to ensure that those who need to trust AI have the sort of evidence they need to justify that trust. In other industries, we have seen healthy assurance ecosystems develop alongside professional services to support businesses from traditional accounting, to cybersecurity services. Encouraging a similar ecosystem to develop around AI in the UK would be a crucial boon to the economy.

For example, the UK’s cyber security industry employed 43,000 full-time workers, and contributed nearly £4bn to the UK economy in 2019. More recently, research commissioned by the Open Data Institute (ODI) on the nascent but buoyant data assurance market found that 890 data assurance firms are now working in the UK with 30,000 staff. The research carried out by Frontier Economics and glass.ai noted that 58% of these firms incorporated in the last 10 years. Following this trend, AI assurance is likely to become a significant economic activity in its own right. AI assurance is an area in which the UK, with particular strengths in legal and professional services, has the potential to excel.

Purpose of this roadmap

The roadmap provides a vision of what a mature ecosystem for AI assurance might look like in the UK and how the UK can achieve this vision. It builds on the CDEI’s analysis of the current state of the AI assurance ecosystem and examples of other mature assurance ecosystems.

The first section of the roadmap looks at the role of AI assurance in ensuring trusted and trustworthy AI. We set out how assurance engagements can build justified trust in AI systems, drawing on insights from more mature assurance ecosystems, from product safety through to quality management and cyber security. We illustrate the structure of assurance engagements and highlight assurance tools relevant to AI and their applications for ensuring trusted and trustworthy AI systems. In the latter half of this section we zoom out to consider the role of assurance within the broader AI governance landscape and highlight the responsibilities of different actors for demonstrating trustworthiness and their needs for building trust in AI.

The second section sets out how an AI assurance ecosystem needs to develop to support responsible innovation and identifies six priority areas:

- Generating demand for assurance

- Supporting the market for assurance

- Developing standards

- The role of professionalisation and specialised skills

- The role of regulation

- The role of independent researchers

We set out the current state of the ecosystem and highlight the actions needed in each of these areas to achieve a vision for an effective, mature AI assurance ecosystem. Following this, we discuss the ongoing tensions that will need to be managed, as well as the promise and limits of assurance. We conclude by outlining the role that the CDEI will play in helping deliver this mature AI assurance ecosystem.

What have we done to build this view?

We have combined multiple research methods to build the evidence and analysis presented in this roadmap. We carried out literature and landscape reviews of the AI assurance ecosystem to ground our initial thinking and performed further desk research on a comparative analysis of mature assurance ecosystems. Based on this evidence, we drew on multidisciplinary research methods to build our analysis of AI assurance tools and the broader ecosystem.

Our desk-based research is supported by expert engagement, through workshops, interviews and discussions with a diverse range of expert researchers and practitioners. We have also drawn on practical experience from assurance pilot projects with organisations adopting or considering deploying AI systems, across both private sector organisations (in partnership with researchers from University College London), along with the CDEI’s work with public sector organisations across recruitment, policing and defence.

The role of AI assurance

What is AI assurance and why do we need it?

Building and maintaining trust is crucial to realising the benefits of AI systems. If organisations don’t trust AI systems, they will be less willing to adopt these technologies because they don’t have the confidence that an AI system will actually work or benefit them. They might not adopt for fear of facing reputational damage and public backlash. Without trust, consumers will also be cautious about using data-driven technologies, as well as sharing the data that is needed to build them.

The difficulty is, however, that these stakeholders often have limited information, or lack the appropriate specialist knowledge to check and verify others’ claims to understand whether AI systems are actually deserving of their trust.

This is where assurance is important. Being assured is about having confidence or trust in something, for example a system or process, documentation, a product or an organisation. Assurance engagements require providing evidence - often via a trusted independent third party - to show that the AI system being assured is reliable and trustworthy.

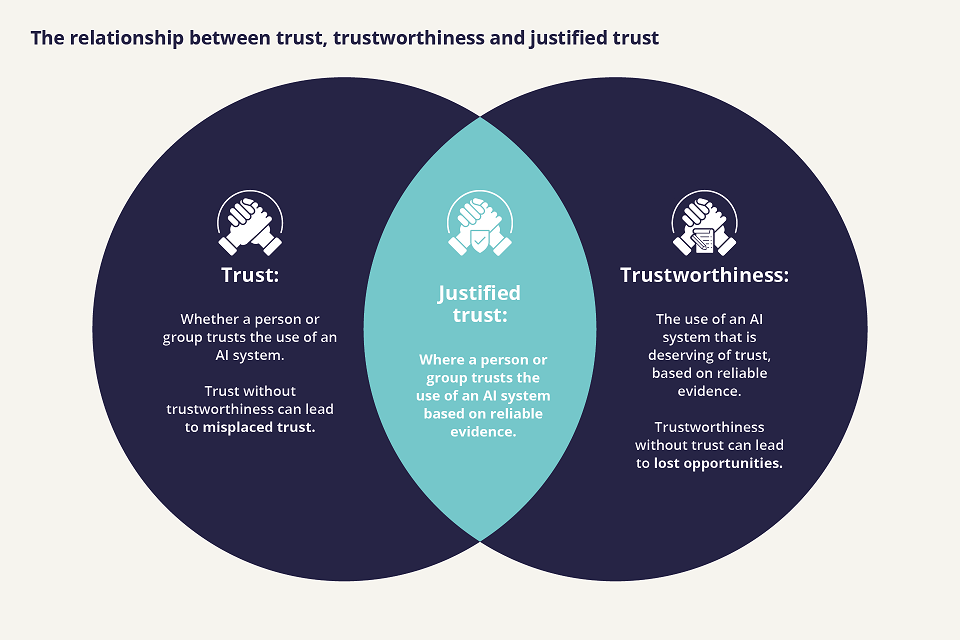

Trust and trustworthiness

The distinction between trust and trustworthiness is important here: when we talk about trustworthiness, we mean whether something is deserving of people’s trust. On the other hand, when we talk about trust, we mean whether something is actually trusted by someone. Someone might trust something, even if it is not in fact trustworthy.

A successful relationship built on justified trust requires both trust and trustworthiness:

Trust without trustworthiness = misplaced trust. If we trust technology or the organisations deploying a technology when they are not in fact trustworthy, we incur potential risks by misplacing our trust.

Trustworthy but not trusted = (unjustified) mistrust. If we fail to trust a technology or organisation which is in fact trustworthy, we incur the opportunity costs of not using good technology.

Fulfilling both of these requirements produces justified trust.

How AI assurance builds justified trust

There are two key problems which organisations must overcome to build justified trust:

-

An information problem: Organisations need to reliably and consistently evaluate whether an AI system is trustworthy to provide the evidence base for whether or not people should trust it.

-

A communication problem: Organisations need to communicate their evidence to other assurance users and translate this evidence at the appropriate level of complexity so that they can direct their trust or distrust accordingly.

The value of assurance is overcoming both of these problems to enable justified trust.

Assurance requires measuring and evaluating a variety of information to show that the AI system being assured is reliable and trustworthy. This includes how these systems perform, how they are governed and managed, whether they are compliant with standards and regulations, and whether they will reliably operate as intended. Assurance provides the evidence required to demonstrate that a system is trustworthy.

Assurance engagements rely on clear metrics and standards against which organisations can communicate that their systems are effective, reliable and ethical. Assurance engagements therefore provide a process for (1) making and assessing verifiable claims to which organisations can be held accountable and (2) for communicating these claims to the relevant actors so that they can build justified trust, where a system is deserving of their trust.

The five elements of an assurance engagement

This challenge of assessing the trustworthiness of systems, processes and organisations to build justified trust is not unique to AI. Across different mature assurance ecosystems, we can see how different assurance models have been developed and deployed to respond to different types of risks that arise in different environments. For example: from risks around professional integrity, qualifications and expertise in legal practice; to assuring operational safety and performance risks in safety critical industries, such as aviation or medicine.

The requirements for a robust assurance process are most clearly laid out in the accounting profession, although we see very similar characteristics in a range of mature assurance ecosystems.

In the accounting profession, the 5 elements of assurance are specified as:

| A 3-party relationship | The three parties typically involved in an assurance relationship are the responsible party, practitioner and assurance user. |

|---|---|

| Agreed and appropriate subject matter | The subject matter of an assurance engagement can take many forms. An appropriate subject matter is identifiable, and capable of consistent evaluation or measurement against the identified criteria. Appropriate subject matter is such that the information about it can be subjected to procedures for gathering sufficient and appropriate evidence. |

| Suitable criteria | Criteria are the benchmarks used to evaluate or measure the subject matter. They are required for the reasonably consistent measurement and evaluation of the subject matter within the context of professional judgement. |

| Sufficient and appropriate evidence | Sufficiency is a measure of the quantity of evidence. Appropriateness is the measure of the quality of the evidence, i.e. its relevance and reliability. |

| Conclusion | The conclusion conveys the assurance obtained about the subject matter information. |

The accounting model is helpful for thinking about the structure that AI assurance engagements need to take. The five elements help to ensure that information about the trustworthiness of different aspects of AI systems is reliably evaluated and communicated.

While this roadmap draws on the formal definitions developed by the accounting profession, similar roles, responsibilities and institutions for standard setting, assessment and verification are present across the range of assurance ecosystems - from cybersecurity to product safety - providing transferable assurance approaches.

Within these common elements, there is also variation in the use of different assurance models across mature ecosystems. Some rely on direct performance testing, while others rely on reviewing processes or ensuring that accountable people have thought about the right issues at the right time. In each case, the need to assure different subject matters has led to variation in the development and use of specific assurance models, to achieve the same ends.

Delivering AI assurance

In this section, we will build on our analysis of AI assurance and how it can help to build justified trust in AI systems, by briefly explaining some of the mechanisms that can be used to deliver AI assurance. We will explore where they are useful for assuring different types of subject matter that are relevant to the trustworthiness of AI systems.

A more detailed exploration of AI assurance mechanisms and how they apply to different subject matter in AI assurance is included in our AI assurance guide.

Assurance techniques

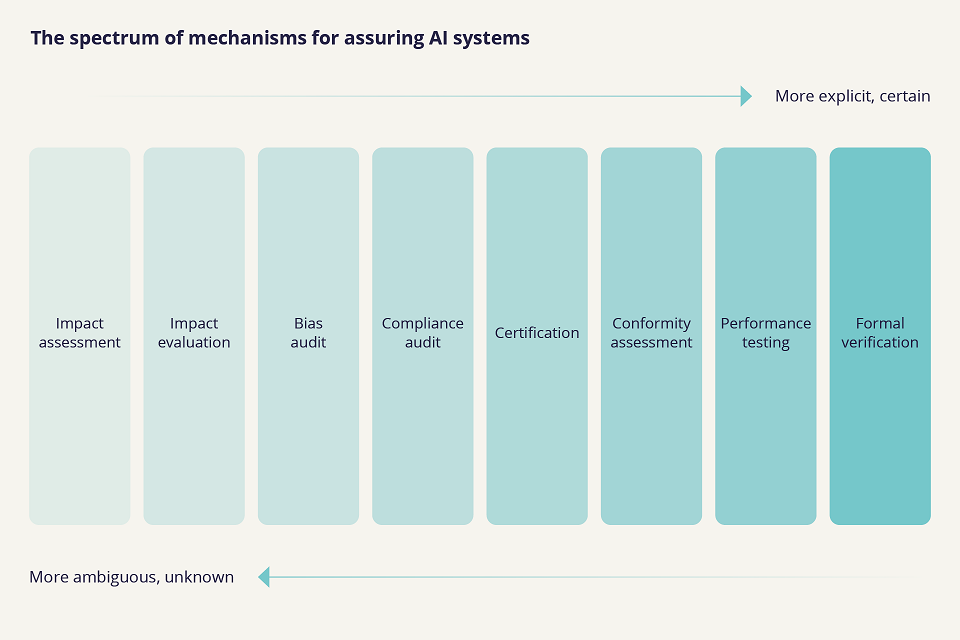

There are multiple approaches to delivering assurance. The spectrum of assurance techniques offer different processes for providing assurance, enabling assurance users to have justified trust in a range of subject matters relevant to the trustworthiness of AI systems.

On one end of this spectrum, impact assessments are designed to account for uncertainty, ambiguity and the unobservability of potential future harms. Impact assessments require expertise and subjective judgement to account for these factors, but they enable standardised processes for qualitatively assessing potential impacts. Assurance can be provided against these processes and the mitigation strategies put in place to deal with potential adverse impacts.

At the other end of this spectrum, formal verification is appropriate for assessing trustworthiness for subject matters which can be measured objectively and with a high degree of certainty. It is ineffective if the subject matter is ambiguous, subjective or uncertain. For example, formal guarantees of fairness cannot be provided for an AI system’s outputs.

| Impact assessment |

Used to anticipate the effect of a policy/programme on environmental, equality, human rights, data protection, or other outcomes. Example: The Canadian government’s Algorithm Impact Assessment (AIA) is a mandatory questionnaire designed for government departments and agencies that determines the impact level of an automated decision-system. The tool is composed of 48 risk and 33 mitigation questions. Assessment scores are based on factors including the system design, algorithm, decision type, impact and data. |

|---|---|

| Impact evaluation |

Similar in origin and practice to impact assessment, but conducted after a programme or policy has been implemented in a retrospective manner. Example: CAHAI (the Council of Europe’s Committee on AI and Human Rights) are producing an impact assessment for organisations developing, procuring or deploying AI to identify and mitigate the potential impacts of AI systems on human rights, democracy and the rule of law. The impact assessment, which combines AI impact assessment with human rights due diligence, is designed to be iterative, therefore will incorporate impact evaluation in subsequent assessments. |

| Risk assessment |

Seeks to identify risks that might be disruptive to the business carrying out the assessment e.g. reputational damage. Example: The UK company Holistic AI is a platform service that provides automated risk assessment and assurance for companies deploying AI systems. Holistic AI’s risk assessment platform offers a range of tools in this list including bias audit, and performance testing and continuous monitoring, to provide assurance across verticals of bias, privacy, explainability and robustness. |

| Bias audit |

Focused on assessing the inputs and outputs of algorithmic systems to determine whether there is unfair bias in the outcome of a decision classification made by the system or input data used in the system. Example: Etiq AI is a customisable software platform for data scientists, risk and business managers in the insurance, lending, and technology industries. The platform offers testing, monitoring, optimisation and explainability solutions to allow users to identify and mitigate the unintended bias in machine learning algorithms. |

| Compliance audit |

Involves a review of a company’s adherence to internal policies and procedures, external regulations or legal requirements. Specialised types of compliance audit include. System and process audits that can be used to verify that organisational processes and management systems are operating effectively, according to predetermined standards. Regulatory inspection is a type of compliance audit, required by regulatory agencies to review an organisation’s operations and ensure compliance with applicable laws, rules and regulations. Example: ForHumanity have produced a framework for the independent audit of AI Safety in the categories of Bias, Privacy, Ethics, Trust and Cybersecurity. The aim of the audit is to build an ‘infrastructure of trust’. To create this infrastructure of trust, ForHumanity have set out five criteria to ensure that rules are auditable: 1. Binary, i.e. compliant/non-compliant 2. Measurable/unambiguous 3. Iterated and open source 4. Consensus driven 5. Implementable |

| Certification |

A process where an independent body attests that a product, service, organisation or individual has been tested against, and met, objective standards of quality or performance. Example: Responsible Artificial Intelligence Institute (RAI) certification is used by organisations to demonstrate that an AI system has been designed, built, and deployed in line with the Organisation for Economic Co-operation and Development (OECD)’s five Principles on AI: 1. Explainability 2. Fairness 3. Accountability 4. Robustness 5. Data Quality |

| Conformity assessment |

Provides assurance that a product, service or system being supplied meets the expectations specified or claimed, prior to it entering the market. Conformity assessment includes activities such as testing, inspection and certification. Example: The Medicines and Healthcare Products Regulatory Agency (MHRA) has developed an extensive work programme to update regulations applying to software and AI as a medical device. The reforms will offer clear assurance guidance on how to interpret regulatory requirements and how to demonstrate conformity. |

| Performance testing |

Used to assess the performance of a system with respect to predetermined quantitative requirements or benchmarks. Example: Fiddler’s platform offers continuous machine learning monitoring and automated AI explainability for an organisation to monitor, observe and analyse the performance of their AI models. The platform enables users to pinpoint data drift and alerts users when they need to retrain their models. |

| Formal verification |

Establishes whether a system satisfies some requirements using the formal methods of mathematics. Example: Imandra provides what they call ‘reasoning as a service’. Imandra is a general-purpose automated reasoning engine that leverages formal verification methods to offer a suite of tools that can be used to provide insights into algorithm performance and guarantees for a wide range of algorithms. Imandra’s automated reasoning and analysis engine aims to mathematically verify algorithm properties and systematically explore and elucidate possible algorithm behaviors. |

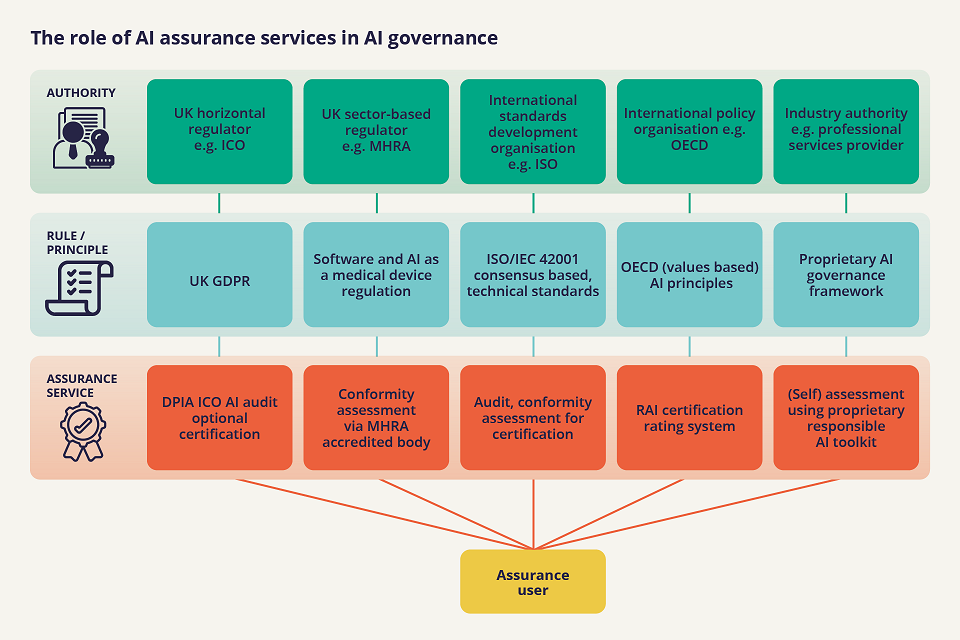

The role of assurance in AI governance

AI assurance services are a distinctive and important aspect of broader AI governance. AI governance covers all the means by which the development, use, outputs and impacts of AI can be shaped, influenced and controlled, whether by government or by those who design, develop, deploy, buy or use these technologies. AI governance includes regulation, but also tools like assurance, standards and statements of principles and practice.

Regulation, standards and other statements of principles and practice set out criteria for how AI systems should be developed and used. Alongside this, AI assurance provides the ‘infrastructure’ for checking, assessment and verification, to provide reliable information about whether organisations are following these criteria.

An AI assurance ecosystem can offer an agile ‘regulatory market’ of assurance services, consisting of both for-profit and not-for-profit services. This regulatory market can support regulators as well as standards development bodies and other responsible AI authorities to ensure trustworthy AI development and deployment while enabling industry to innovate at pace and manage risk.

AI assurance services will play a crucial role in a regulatory environment by providing a toolbox of mechanisms and processes to monitor regulatory compliance, as well as the development of common practice beyond statutory requirements to which organisations can be held accountable.

Compliance with regulation

AI assurance mechanisms facilitate the implementation of regulation and the monitoring of regulatory compliance in the following ways: implementing and elaborating rules for the use of AI systems in specific circumstances; translating rules into practical forms useful for end users and evaluating alternative models of implementation; and providing technical expertise and capacity to assess regulatory compliance across the system lifecycle.

Assurance mechanisms are also important in the international regulatory context. Assurance mechanisms can be used to facilitate assessment against designated technical standards that can provide a presumption of conformity with essential legal requirements. The presumption of conformity can enable interoperability between different regulatory regimes, to facilitate trade. For example, the EU’s AI act states that ‘compliance with standards…should be a means for providers to demonstrate conformity with the requirements of this Regulation.’

Managing risk and building trust

Assurance services also enable stakeholders to manage risk and build trust by ensuring compliance with standards, norms and principles of responsible innovation, alongside or as an alternative to more formal regulatory compliance. Assurance tools can be effective as ‘post compliance’ tools where they can draw on alternative, commonly recognised sources of authority. These might include industry codes of conduct, standards, impact assessment frameworks, ethical guidelines, public values, organisational values or preferences stated by the end users.

Post-compliance assurance is particularly useful in the AI context where the complexity of AI systems can make it very challenging to craft meaningful regulation for them. Assurance services can offer means to assess, evaluate and assign responsibility for AI systems’ impacts, risks and performance without the need to encode explicit, scientific understandings in law.

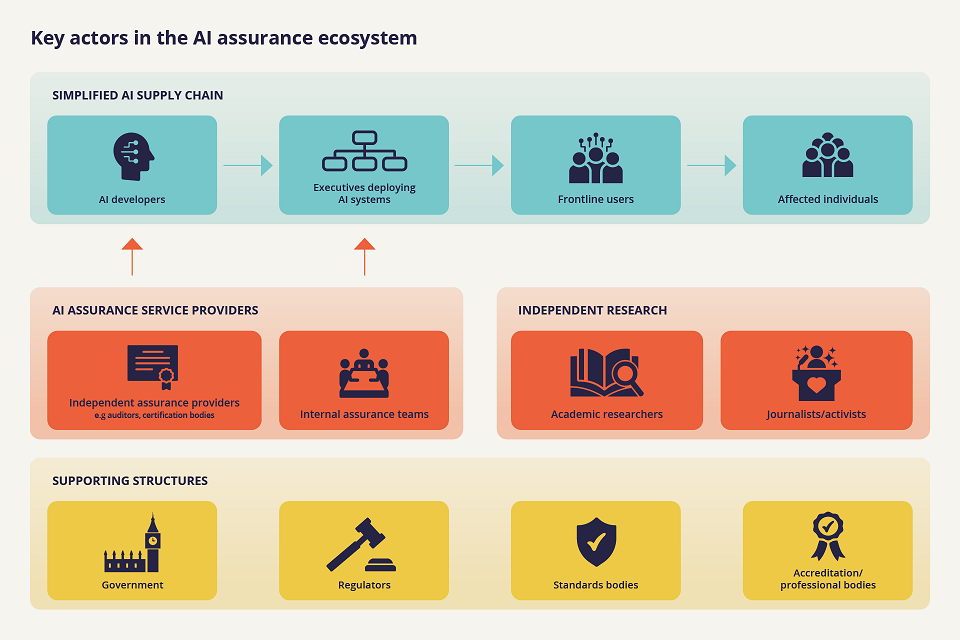

Roles and responsibilities in the AI assurance ecosystem

Effective AI assurance will rely on a variety of actors with different roles and responsibilities for evaluating and communicating the trustworthiness of AI systems. In the diagram below we have categorised four important groups of actors who will need to play a role in the AI assurance ecosystem: the AI supply chain, AI assurance service providers, independent research and oversight, and supporting structures for AI assurance. The efforts of different actors in this space are both interdependent and complimentary. Building a mature assurance ecosystem will therefore require an active and coordinated effort.

The actors specified in the diagram are not meant to be exhaustive, but represent the key roles in the emerging AI assurance ecosystem. For example, Business to Business to Consumer (B2B2C) deployment models can greatly increase the complexity of assurance relationships in the real-world, where the chain of deployment between AI developers and ‘end consumers’ can go through multiple client layers.

Similarly, it is important to note that while the primary role of the ‘supporting structures’ in developing an AI assurance ecosystem is to set out the requirements for trustworthy AI through regulation, standards or guidance, these actors can also play other roles in the assurance ecosystem. For example, regulators also provide assurance services via advisory, audit and certification functions e.g. the Information Commissioner’s Office’s (ICO) investigation and assurance teams assess the compliance of organisations using AI. Government and other public sector organisations also play the ‘executive’ role when procuring and deploying AI systems.

These actors play a number of interdependent roles within an assurance ecosystem. The table below illustrates each actor’s role in demonstrating the trustworthiness of AI systems and their own requirements for building trust in AI systems.

| Assurance user | Role in demonstrating trustworthiness | Requirements for building trust |

| Simplified AI Supply Chain | ||

| Developer | Demonstrating their development is responsible and compliant with applicable regulations and in conformity with standards, and communicating their system’s trustworthiness to executives and users. | Need clear standards, regulations and practice guidelines in place so that they can have trust that the technologies they develop will be trustworthy and they will not be liable. |

| Executive | Ensuring the systems they procure comply with standards, communicating this compliance to boards, users and regulators, and managing the Environmental, Social & Governance risks. | Require evidence from developers and vendors that gives them confidence to deploy AI systems. |

| Frontline users | Communicating the system’s compliance to demonstrate trustworthiness to affected individuals. | Need to understand system compliance and its expected results to trust in its use. |

| Affected individuals | N/A | A citizen, consumer or employee, needs to understand enough about the use of AI systems in decisions affecting them, or being used in their presence (e.g. Facial recognition technologies), to exercise their rights. |

| Assurance Service Provider | ||

| Independent assurance provider | Collecting evidence, assessing and evaluating AI systems and their use, and communicating clear evidence and standards which are understood by their assurance customers. | Require clear, agreed standards as well as reliable evidence from developers, vendors or executives about AI systems to have confidence and trust that the assurance they provide is valid and appropriate for a particular use-case. |

| Independent research and oversight | ||

| Research bodies | Contributing research on potential risks or developing potential assurance tools. | Need appropriate access to industry systems, documentation and information to be properly informed about the systems for which they are developing assurance tools and techniques. |

| Journalists and activists | Providing publicly available information and scrutiny to keep affected individuals informed. | Require understandable rules and laws around transparency and information disclosure to have confidence that systems are trustworthy and open to scrutiny. |

| Supporting structures | ||

| Governments | Shaping an ecosystem of assurance services and tools to have confidence that AI will be deployed responsibly, in compliance with laws and regulations, and in a way that does not unduly hinder economic growth. | Require confidence that AI systems being developed and deployed in their jurisdiction will be compliant with applicable laws and regulation and will respect citizens’ rights and freedoms, to trust that AI systems will not cause harm and will not damage the government’s reputation. |

| Regulators | Encouraging, testing and confirming that AI systems are compliant with their regulations, incentivising best practice and creating the conditions for the trustworthy development and use of AI. | Require confidence that they have the appropriate regulatory mandate, skills and resources to manage and mitigate AI risks. |

| Standards bodies | Convening actors including industry and academia to develop commonly accepted, technical standards, and encouraging adoption of existing standards. | Require confidence that standards developed are technically appropriate and have sufficient consensus to ensure trustworthy AI where those standards are being used. |

| Professional body | Enforcing standards of good practice and ethics, which can be important both for developers and assurance service providers. | Need to maintain oversight of the knowledge, skills, conduct and practice for AI assurance. |

| Accreditation body | Recognising and accrediting trustworthy independent assurance providers to build trust in auditors, assessors and certifiers throughout the ecosystem. | Need accredited bodies or individuals to provide reliable evidence of good practice. |

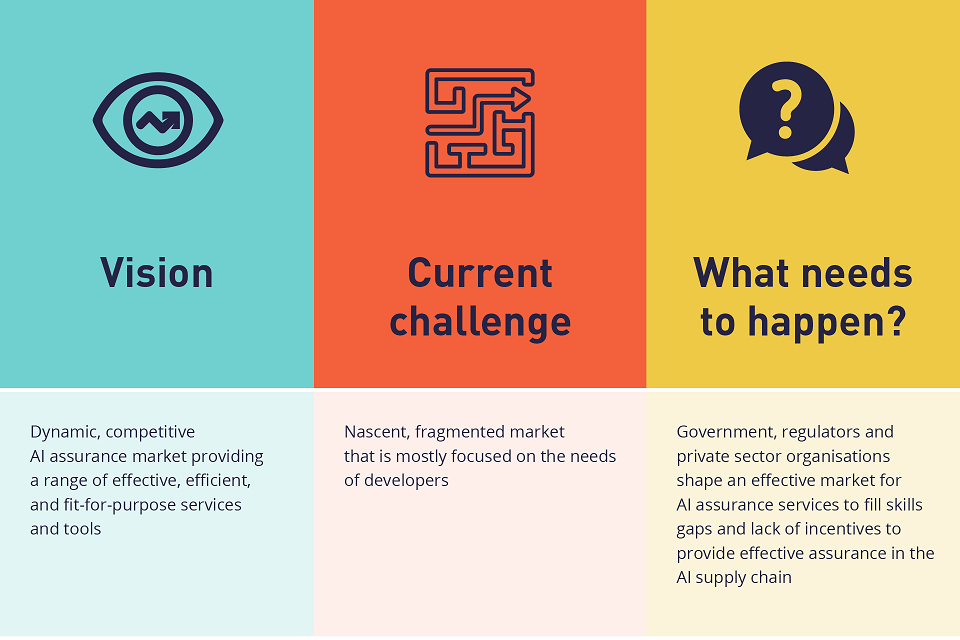

Roadmap to a mature AI assurance ecosystem

This section sets out a vision for a mature AI assurance ecosystem, and the practical steps that can be taken to make this vision a reality. We have based this vision on our assessment of the current state of the AI assurance ecosystem, as well as comparison with more mature ecosystems in other domains.

An effective AI assurance ecosystem matters for the development of AI. Without it, we risk either trust without trustworthiness, where risky, unsafe or inappropriately used AI systems are deployed, leading to real world harm to people, property, and society. Alternatively, the prospect of these harms could lead to unjustified mistrust in AI systems, where organisations hesitate to deploy AI systems even where they could deliver significant benefit. Worse still, we risk both of these happening simultaneously.

An effective AI assurance ecosystem will rely on accommodating the perspectives of multiple stakeholders who have different concerns about AI systems and their use, different incentives to respond to those concerns, and different skills, tools and expertise for assurance. This coordination task is particularly challenging for AI, as it is a general purpose group of technologies that can be applied in many domains. Delivering meaningful assurance requires understanding not only the technical details of AI systems, but also relies on subject matter expertise and knowledge of the context in which these systems are used.

The current ecosystem contains the right ingredients for success, but is highly fragmented and needs to mature in a number of different ways to become fully effective. Responsibilities for assurance need to be distributed appropriately between actors, the right standards need to be developed and the right skills are needed throughout the ecosystem.

The market for AI assurance is already starting to grow, but action is needed to shape this ecosystem into an effective one that can respond to the full spectrum of risks and compliance issues presented by AI systems. To distribute responsibilities effectively and develop the skills and supporting structures needed for assurance, we have identified six key areas for development. These are:

- Generating demand for assurance

- Supporting the market for assurance

- Developing standards

- The role of professionalisation and specialised skills

- The role of regulation

- The role of independent researchers

In the following sections, we will outline the current state of the AI assurance ecosystem with regard to these six areas and compare this with our vision for a mature future ecosystem. We highlight the roles of different actors and outline important next steps for building towards a mature AI assurance ecosystem.

Increasing demand for effective AI assurance

Early demand for AI assurance has been driven primarily by the reputational concerns of actors in the AI supply chain, along with proactive efforts by AI developers to build AI responsibly. However, pressure on organisations to take accountability for their use of AI is now coming from a number of directions. Public awareness of issues related to AI assurance (especially bias) is growing in response to high profile failures. We are also seeing increasing interest from regulators, higher customer expectations, and concerns about where liability for harms will sit. The development community is being proactive in this space, managing risks as part of the responsible AI movement, however we need others in the ecosystem to better recognise assurance needs.

Increased interest and higher consumer expectations mean that organisations will need to demand more evidence from their suppliers and their internal teams to demonstrate that the systems they use are safe and otherwise trustworthy.

Organisations developing and deploying AI systems already have to respond to existing regulations including data protection law, equality law and sector specific regulations. As existing motivations to regulate AI appear, organisations will need to anticipate future regulation to remain competitive. This will include both UK sector-based regulation and for organisations exporting products, non-UK developments such as the EU AI regulations and the Canadian AIA.

Regulators are starting to demand evidence that AI systems being deployed are safe and otherwise trustworthy, with some regulators starting to set out assurable recommendations and guidelines for the use of AI systems.

In a mature assurance ecosystem, assurance demand is driven by:

-

Organisations’ desire to know that their systems or processes are effective and functioning as intended.

-

The need for organisations to earn and keep the trust of their customers and staff, by demonstrating the trustworthiness of the AI systems they deploy. This will partly need to happen proactively but will also be driven by commercial pressures.

-

An awareness of and a duty to address real material risks to the organisation and wider society.

-

The need to comply with, and demonstrate compliance with regulations and legal obligations.

-

Demonstrating trustworthiness to the wider public, competing on the basis of public trust.

The importance of these drivers will vary by sector. For example, in safety-critical industries the duty to address material risks and build consumer trust will be stronger in driving assurance demand, compared to low risk industries. In most industries where AI is being adopted, the primary driver for assurance services will be gaining the confidence that their systems will actually work effectively, as they intend them to.

To start to respond to these demands, organisations building or deploying AI systems should be developing a clear understanding of the concrete risks and concerns that arise. Regulators and professional bodies have an important supporting role here in setting out guidance to inform industry about key concerns and drive effective demand for assurance. When these concerns have been identified, organisations need to think about the sorts of evidence that is needed to understand, manage and mitigate these risks, to provide assurance to other actors in the ecosystem.

In a mature AI assurance ecosystem, those accountable for the use of AI systems will demand and receive evidence that these systems are fit-for-purpose. Organisations developing, procuring or using AI systems should be aware of the risks, governance requirements and performance outcomes that they are accountable for, and provide assurance accordingly. Organisations that are aware of their accountabilities for risks will be better placed to demand the right sort of assurance services to address these risks. As well as setting accountabilities, regulation and standards will play an important role in structuring incentives for assurance i.e. setting assurance requirements and criteria to incentivise effective demand.

The drivers discussed above will inevitably increase the demand for AI assurance, but we still need to take care to ensure that the demand is focused on services that add real value. Many current AI assurance services are focused primarily on aspects of risk and performance that are most salient to an organisation’s reputation. This creates risks of deception and ethics washing, where actors in the supply chain can selectively commision or perform assurance services primarily to benefit their reputation, rather than address the underlying drivers of trustworthiness.

This risk of ethics washing relates to an incentive problem. The economic incentives of actors within the AI supply chain come into conflict with incentives to provide reliable, trustworthy assurance. Incentive problems in the AI supply chain currently prevent demand for AI assurance from satisfactorily ensuring AI systems are trustworthy, and misalign demand for AI assurance with broader societal benefit. Avoiding this risk will require a combination of ensuring that assurance services are valuable and attractive for organisations, but also that assurance requirements whether regulatory or non-regulatory are clearly defined across the spectrum of relevant risks, and organisations are held to account on this basis.

Demand is also constrained by challenges with skills and accountability within the AI supply chain. In many organisations there is a lack of awareness about: the types of risks and different aspects of systems and development processes that need to be assured for AI systems to be trustworthy, and appropriate assurance approaches for assessing trustworthiness across these different areas. There is also a lack of knowledge and coordination across the supply chain around who is accountable for assurance across different areas. Clearer understanding of accountabilities is required to drive demand for assurance.

A growing market for assurance services

As demand for AI assurance grows, a market for assurance services needs to develop in response to limitations in skills and competing incentives that actors in the AI supply chain, government and regulators are not well placed to overcome.

Organisations in the AI supply chain will increasingly demand evidence that systems are trustworthy and compliant as they become aware of their own accountabilities for developing, procuring and deploying AI systems.

However, actors in the AI supply chain won’t have the expertise required to provide assurance in all of these areas. In some cases building specialist in-house capacity to serve these needs will make sense. For example, in the finance industry, model risk management is a crucial in-house function. In other areas, building specialist in-house capacity will be difficult and will likely not be an efficient way to distribute skills and resources for providing assurance services.

The business interests of actors in the AI supply chain means that without independent verification, in many cases first and second party assurance will be insufficient to build justified trust. Assurance users will be unable to have confidence that the assurance provided by the first party faithfully reflects the trustworthiness of the AI system.

Therefore, as demand for AI assurance grows, a market for external assurance providers will need to grow to meet this demand. This market for independent assurance services should include a dynamic mix of small and large providers offering a variety of services to suit a variety of needs.

A market of AI-specific assurance services has started to emerge, with a range of companies including established professional services firms, research institutions and specialised start-ups beginning to offer assurance services. There is a more established market of services addressing data protection issues, with a relatively new but growing sector of services addressing the fairness and robustness of AI systems. More novel services are also emerging to enable effective assurance, such as testbeds to promote the responsible development of autonomous vehicles. Similarly, the Maritime Autonomy Surface Testbed enables the testing of autonomous maritime systems for verification and proof of concept.

Not all AI assurance will be new though. In some use-cases and sectors, existing assurance mechanisms will need to evolve to adapt to AI. For example, routes such as conformity assessment, audit and certification used in safety assurance mechanisms will inevitably need to be updated to consider AI issues. Regulators in safety critical industries are leading the way here. The Medicines and Healthcare Products Regulatory Agency (MHRA) is committed to developing the world’s leading regulatory system for the regulation of Software as a Medical Device (SaMD) including AI.

The ICO has also begun to develop a number of initiatives to ensure that AI systems are developed and used in a trustworthy manner. The ICO has produced an AI Auditing Framework alongside Draft Guidance, which is designed to complement their guidance on Explaining decisions made with AI, produced in collaboration with the Alan Turing Institute. In September 2021, Healthily, the creator of an AI-based smart symptom checker submitted the first AI explainability statement to the ICO. ForHumanity, a US led non-profit, has submitted a draft UK GDPR Certification scheme for accreditation by the ICO and UK Accreditation Body (UKAS).

There are a range of toolkits and techniques for assuring AI emerging. However, the AI assurance market is currently fragmented and at a nascent stage. We are now in a window of opportunity to shape how this market emerges. This will involve a concerted effort across the ecosystem, in both the public and private sectors, to ensure that AI assurance services can meet the UK’s objectives for ethical innovation.

Enablers for the market of assurance services

Assuring AI systems requires a mix of different skills. Data scientists will be needed to provide formal verification and performance testing, audit professionals will be required to assess organisations compliance with regulations, and risk management experts will be required to assess risks and develop mitigation processes.

Given the range of skills, it is perhaps unlikely that demand will be met entirely by multi-skilled individuals; multi-disciplinary teams bringing a diverse range of expertise will be needed. A diverse market of assurance providers needs to be supported to ensure the right specialist skills are available. The UK’s National AI Strategy has begun to set out initiatives to help develop, validate and deploy trustworthy AI, including building AI and data science skills through skills bootcamps. It will be important for the UK to develop both general AI skills and the specialist skills in assurance to do this well.

In addition to independent assurance providers, there needs to be a balance of skills for assurance across different roles in the ecosystem. For example, actors within the AI supply chain will require a baseline level of skills for assurance to be able to identify risks to provide or procure assurance services effectively. This balance of skills should reflect the complexity of different assurance processes, the need for independence, and the role of expert judgement in building justified trust.

Supporting an effective balance of skills for assurance, and more broadly enabling a trustworthy market of independent assurance providers, will rely on the development of two key supporting structures. Standards (both regulatory and technical) are needed to set shared reference points for assurance engagements enabling agreement between assurance users and independent providers. Secondly, professionalisation will be important in developing the skills and best-practice for AI assurance across the ecosystem. Professionalisation could involve a range of complementary options, from university or vocational courses to more formal accreditation services.

The next section will outline the role of standards in enabling independent assurance services to succeed as part of a mature assurance ecosystem. After exploring the role of standards, the following section will expand on the possible options for developing an AI assurance profession.

Standards as assurance enablers

Standards are crucial enablers for AI assurance. Across a whole host of industries, the purpose of a standard is to provide a reliable basis for people to share the same expectations about a product, process, system or service.

Without commonly accepted standards to set a shared reference point, a disconnect between the values and opinions of different actors can prevent assurance from building justified trust. For example, an assurance user might disagree with the views of an assurance provider about the appropriate scope of an impact assessment, or how to measure the level of accuracy of a system. As well as enabling independent assurance, commonly understood standards will also support the scalability and viability of self-assessment and assurance more generally across the ecosystem.

There are a range of different types of standards that can be used to support AI assurance, including technical, regulatory and professional standards. The rest of this section will specifically focus on the importance of Global technical standards for AI assurance. Global technical standards set out good practice that can be consistently applied to ensure that products, processes and services perform as intended – safely and efficiently. They are generally voluntary and developed through an industry-led process in global standards developing organisations, based on the principles of consensus, openness, and transparency, and benefiting from global technical expertise and best practice. As a priority, independent assurance requires the development of commonly understood technical standards which are built on consensus.

Technical standardisation can help to make subject matter more objectively measurable, which can help with the adoption of more scalable assurance methods.

-

Foundational standards help build common understanding around definitions and terminology, making them less ambiguous (i.e. more explicit). Foundational standards enable dialogue between assurance users and providers, facilitating greater trust throughout the ecosystem by providing common grounds for assurance users to assess and challenge assurance processes. For example, ISO/IEC DIS 22989 Information technology — Artificial intelligence — Artificial intelligence concepts and terminology.

-

Process standards help to universalise best practice in organisational management, governance and internal control as a way to achieve good outcomes via organisation processes. Process standards institutionalise trust building practices such as risk and quality management. Some process standards are ‘certifiable’, meaning that organisations can be independently assessed as meeting ‘good practice’ and can receive a certification against that standard. For example, ISO/IEC CD 42001 - Information Technology - Artificial Intelligence - Management System.

-

Measurement standards provide common definitions for quantitative measurement of particular aspects of performance. This helps to make the subject matter more explicit and mutually understood, enabling more scalable assurance methods. This can also be beneficial for assurance, even if there are multiple (mutually incompatible) measurement standards, for helping to improve the transparency and accessibility of assurance processes even where standards for performance can’t be agreed. For example, ISO/IEC DTS4213 Assessment of Machine Learning Performance describes best practices for comparing the performance of machine learning models, and specifies classification performance metrics and reporting requirements.

-

Performance standards set specific thresholds for acceptability. For AI, performance thresholds are likely to be established only once measurements are agreed, and these are still in discussion.

Standards at all of these levels can help to deliver mutually understood and scalable AI assurance.

Where independent assurance is valuable, agreed standards are needed to enable assurance users to trust the evidence and conclusions presented by assurance service providers. Standards enable clear communication of a systems trustworthiness i.e. “this standard has been met/hasn’t been met”. Existing AI ‘auditing’ efforts are done without commonly accepted standards: this is more like ‘advisory’ services, which are useful, but rely heavily on the judgement of the ‘auditor’.

To ensure that AI systems are safe and otherwise trustworthy, many AI systems and organisations will need to be assured against a combination of standards of different types.

Developing AI technical standards that support assurance

Standards can address the disconnect between different actors and help assurance users to understand what assurance services are actually offering. But we need to draw on different perspectives and sources of expertise for these standards to be useful and effective.

Drawing on diverse expertise is particularly important for AI standards setting, due to the sociotechnical nature of AI systems. AI systems are not purely technical instruments, they embed values and normative judgements in their outputs. For this reason, AI standardisation cannot be a purely technical benchmarking exercise but also needs to consider the social and ethical dimensions of AI.

IEEE Standard Model Process for Addressing Ethical Concerns during System Design sets out to address this problem. The standard provides a systemic, transparent and traceable approach for integrating human and social values into the design of AI systems. Where traditional evaluations of technology focus on physical risk, this standard broadens the focus to consider value harms, making it important for the design and development of AI.

We need independently agreed standards for tools such as audit, conformity assessment and certification to work effectively. We’re starting to see efforts to build these technical standards in international standards development organisations, which is an encouraging start. For example, significant work on AI standards is being done by the Joint Technical Committee of the ISO (International Organisation for Standardisation) and IEC (International Electrotechnical Commission), a consensus-based voluntary international standards group.

Notable efforts are also being made by the UK’s National Quality Infrastructure (NQI) which has produced a Joint Action Plan, intended to enable the NQI’s activities across standards, measurement, and accreditation for emerging technologies including AI. The NQI consists of the UK Accreditation Service (UKAS), British Standards Institution (BSI) and National Physical Laboratory (NPL), alongside the Department for Business Energy and Industrial Strategy (BEIS).

Focusing technical standards development efforts to support AI assurance

Current efforts in AI standards development can be split into four types: performance, process and measurement (metrology) standards, and foundational standards.

Ideally, an explicit performance standard allows for assurance techniques to assess the conformity of an AI system with a standard. Although it is desirable to develop performance standards where possible, it is unlikely that universally agreed standards for performance will be attainable across all dimensions of performance. This is due to differences in opinion which are hard to resolve and variation in the levels of performance and risk which are acceptable across different contexts and use cases.

Due to the difficulty associated with developing agreed performance standards, common measurement standards and process standards should be initial areas of focus for standardisation efforts.

There is an opportunity for British organisations to play a leading role in international standards development and to collaborate with international standards organisations to export best practice and shape global standards. For example, ISO270001 cybersecurity standards and ISO9001 Quality Management standards have been developed from British standards, and the UK has a similar opportunity to shape the areas of standardisation that will best support good governance of AI systems. Taking on this opportunity, the National AI Strategy outlines the government’s plans to work with stakeholders to pilot an AI Standards Hub to expand the UK’s international engagement and thought leadership on AI standards.

Developing process standards

As a first step towards standardising AI assurance, standards bodies should prioritise a management system standard for the development and use of AI. This focus would reflect trends in early sustainability certification standards, which focused on processes procedures, i.e. farming practices which are more easy to observe than the direct or indirect impacts of farming on the environment.

In the AI context, current examples of process standards include ISO 42001 AI Management system standards currently in development and the Explaining decisions made with AI guidance which has been jointly published by the ICO and the Alan Turing Institute.

Developing measurement standards

Another area where standardisation is both feasible and useful to AI assurance is in the development of measurement or metrology standards providing commonly understood ways of measuring risks or performance, even if thresholds for what is acceptable remain more context-specific.

The measurement of bias is an important example here. There is currently a wealth of academic literature describing different approaches to defining and measuring bias in AI systems, often under the title of AI fairness. An increasing range of open source and proprietary tools exist to help machine learning practitioners apply these techniques. There has been hugely positive progress in this area in the last few years.

However, the range of approaches is also a challenge; it makes it difficult for practitioners, organisations and assurance providers to understand what exactly is being measured. Hence it is difficult to build common understanding and skill sets for interpreting the results, and using those quantitative measures to support inherently subjective judgements about what is fair in a given context.

It would be useful to see some consolidation around a smaller number of well-defined technical approaches to measuring bias, defined in standards. This would provide a useful basis for the wider ecosystem to develop common approaches to interpreting the results, for example helping regulators or industry bodies to set out guidance for what is acceptable in a given context, or individual organisations to specify acceptable limits to their own supply chain. It would also help the ecosystem move towards a clearer understanding of how UK law, especially the Equality Act 2010, should be interpreted in this space.

Standards bodies should aim to convene stakeholders to build consensus around terminology and useful ways of measuring bias. The resulting family of measurement standards should be published alongside guidance as to where different types of measurement standards might be appropriate.

Combining different types of technical standards

To ensure that AI systems are safe and otherwise trustworthy, many AI systems and organisations will need to be assured against a combination of standards of different types.

Testing systems against performance standards alone is insufficient to ensure trustworthy use. Performance testing can only show whether the performance of an AI system is consistent with specified requirements (goals), not whether those requirements are flawed or unsuitable for the use case. In fact, most accidents involving software do not stem from coding or testing errors, rather they can be traced back to flawed or unsafe system requirements. Therefore, while important, performance testing alone cannot guarantee safety and trustworthiness.

Assessments of the trustworthiness of an AI system need to take into account the broader context and aims of deployment to appropriately mitigate risk. For example, the benchmark deemed sufficiently accurate for an AI system in a medical setting will be far higher than other contexts. Risk and impact assessments will need to complement testing to ensure that the performance standards chosen reflect the appropriate requirements and potential harms in a specific deployment context.

In this way, trustworthiness is dependent on the goals and operation of the whole system. A further aspect of this system level trustworthiness is the need to assess not only how specific components function but how the interaction between components function and how the system functions as a whole.

Process standards - such as quality and risk management standards - ensure that not only the components of the system but also the interactions between components in the system (both technical and non technical) are adequately managed and monitored. Complex systems, such as AI, tend to drift toward unsafe conditions unless they are constantly monitored and maintained. Even where the components of a system are safe and otherwise trustworthy, unsafe interactions between these components can lead to failures which cause harm.

Maintaining trustworthy AI systems across the system lifecycle and avoiding unsafe operating conditions will require the use of both process and performance standards, setting out ongoing requirements for testing and monitoring.

An AI assurance profession

As the market for independent assurance services develops, assurance users will increasingly demand that assurance service providers are appropriately qualified and accountable for the services they provide. They will demand evidence that providers are trustworthy and that they provide sufficient expertise to justify the costs of the service.

There are a number of components needed for developing an AI assurance profession. These range from short courses, masters degrees and vocational programs that provide qualifications for developers or assurance practitioners, to formal accreditation by chartered bodies demonstrating clear professional standards.

Accreditation services are the next rung up on the assurance ladder, above services such as audit and certification. Working together, certification for example, acts as a third party endorsement of a system, process or person. Accreditation is a third party endorsement of the certification body’s competence to provide this endorsement. In other words, we need accreditation bodies to ‘check the checkers’.

Components of professionalisation

To drive best-practice and increase the trustworthiness of the assurance ecosystem, professional bodies and other accreditation bodies should work alongside assurance providers, developers and independent researchers to build an AI assurance profession and think about what should characterise this profession.

There are already existing organisations that have some of the components (e.g. expertise, membership, incentives) for professionalisation that can be built on in this space, for example, UKAS, the UK’s national accreditation body; the British Computer Society (BCS); the Chartered Institute for IT; and the Institute for Chartered Accountants in England and Wales. However, because AI assurance is a multi-disciplinary practice, no existing models of professionalisation wholly translate to this context.

It is currently too early to tell for certain which models will work best but as a next step we think professional and accreditation bodies should be testing how their accreditation and chartering models could be translated effectively into the AI context. Existing professional and accreditation bodies such as UKAS and BCS may need to partner together, or with others to ensure that they can provide the full range of expertise for accreditation in AI assurance.

Accreditation can operate at different levels: individuals can be chartered by a professional body, specific services or schemes can be accredited, but also organisations can be accredited, like certification bodies for example. These types of accreditation could all be used together in an AI assurance ecosystem.

Because AI systems will be deployed across sectors of the economy, AI assurance services will have to be equally broad to ensure that AI systems are trustworthy across these contexts. Because of this breadth, it is not yet clear which professional or other accreditation bodies are best placed to represent AI assurance and think about options for professionalisation.

As a first step towards thinking about options for an AI assurance profession, professional bodies and other accreditation bodies will need to determine who has authority over AI assurance services in different domains, whether authority lies with existing bodies or if it requires new specialised authorities.

Individual accreditation

Individuals or organisations can be accredited to recognise particular status, qualification or expertise. To support a trustworthy AI assurance ecosystem accreditation bodies will be needed to manage and communicate the quality of assurance services to ensure they are reliable and trustworthy.

Chartered status is a form of accreditation that applies to individual professionals. Professional bodies that oversee chartered status have a duty to act in the public interest rather than in the interest of their members. Unlike being licensed to practice a profession, being chartered is not a precondition to practicing a profession, however it does designate a status based upon qualifications, integrity and good practice.

In a mature AI assurance ecosystem, individual practitioners should be able to be accredited to establish standards of expertise and integrity, to build justified trust in assurance services.

In a rapidly developing and very broad field it is perhaps unlikely that a single professional standard will exist; nor that professional accreditation will be universal or mandatory. This is probably desirable, striking a balance between encouraging quality while not creating a barrier to entry for new innovative assurance providers. However, over time we would expect to see increasingly many consumers of assurance services demanding such certifications, and individual regulators expecting this within individual regulated use cases.

Accrediting assurance services and providers

As well as individual professional accreditation, there will also be a need for accreditation of assurance services.

There are a number of ways of structuring this. Specific services could be accredited, for example a third-party certification scheme which can be awarded to businesses to demonstrate their compliance with AI quality management standards. This form of accreditation would rely on the development of quality management standards for AI and agreed benchmarks for certification against this standard. Without first developing these standards it will not be possible to assess the quality or trustworthiness of different certification schemes.

Assurance service providers could also be accredited to recognise their expertise and performance more broadly. Throughout the professional services sector, organisations such as legal, accounting and other financial services firms are accredited to indicate the highest standards of technical expertise and client service. For example, the Lexcel legal quality practice mark is awarded by The Law Society, the professional association representing and governing solicitors. Similarly, in product safety, UKAS accreditation provides an authoritative statement on the technical competence of the UK’s conformity assessment bodies.

Accreditation of an assurance service provider can work alongside the accreditation of individuals. For example, in financial accounting, an executive could procure the services of an auditing firm accredited by the Institute of Chartered Accountants in England and Wales (ICAEW). The auditors themselves could also then be chartered accountants with ACA accreditation, awarded by the ICAEW.

Narrower accreditation of specific services such as certification schemes will be a practical first step towards building trust in assurance services. Accreditation of specific services requires standardisation in the specified area, whereas accrediting assurance providers more broadly requires standardisation and agreement on best practice across a number of different areas of assurance practice.

To support the development of professionalisation for AI assurance, the CDEI will convene forums and explore pilots with existing accreditation (UKAS) and professional bodies (BCS, ICAEW) to assess the most promising routes to professionalising AI assurance. Because AI is a multipurpose technology which will impact across the economy, developing routes to professionalisation will require coordination between a number of different actors.

Role of regulation in AI assurance

An effective assurance ecosystem is key to effective regulation in many areas. It enables regulators to focus their own limited resources on high risk, contentious or novel areas, while leaving an inherently more scalable assurance ecosystem to ensure broader good practice. Regulators therefore have a lot to gain from the development of a mature AI assurance ecosystem, but they also have a key role in bringing it about.

Regulators play a direct role as assurance service providers, as they can audit and inspect AI as part of their enforcement activity, such as the ICO’s assurance function in data protection. Beyond this direct role, regulators can support assurance by turning the concerns they have about AI systems into regulatory requirements and making them assurable. Regulators and professional bodies have a key role in setting expectations for what is a suitable level and type of assurance in individual sectors and use cases, and this unique role is a crucial ‘supporting structure’ that enables an assurance ecosystem to develop.

Many regulators have started setting out recommendations and some have produced assurable guidelines for AI in their sectors, e.g. ICO, MHRA and FCA. In the future we would like to see all regulators setting out these sorts of recommendations and guidelines, as AI emerges within their regulated scope/sector.

Example regulatory initiatives for AI assurance

The ICO is currently developing an AI Auditing Framework (AIAF), which has three distinct outputs. The first is a set of tools and procedures for their assurance and investigation teams to use when assessing the compliance of organisations using AI. The second is detailed guidance on AI and data protection for organisations. The third is an AI and data protection toolkit designed to provide further practical support to organisations auditing the compliance of their own AI systems.

The ICO has also published guidance with the Alan Turing Institute, Explaining Decisions Made With AI which gives practical advice to help organisations to explain the processes, services and decisions delivered or assisted by AI, to the individuals affected by them.

The Medicines and Healthcare Products Regulatory Agency (MHRA) has developed an extensive work programme to update regulations applying to software and artificial intelligence as a medical device. The reforms offer guidance for assurance processes and on how to interpret regulatory requirements to demonstrate conformity.

Regulators are well placed to assess different risks and the characteristics of assurance subject matter within their sector. For example, the assurance requirements for a medical device with an AI component will require far stricter assurance than a chatbot being used to make content recommendations in a media app.

Currently there is ‘cross-sector’ legislation and regulators in areas like data protection (the Information Commissioner’s Office), competition (Competition and Markets Authority), human rights and equality (Equality and Human Rights Commission), as well as ‘sector-specific’ legislation and regulators such as the Financial Conduct Authority and Medicines and Healthcare products Regulatory Agency.

Regulators can ensure that accountabilities and incentives are shaped appropriately within their sectors to ensure that these risks are appropriately managed between internal and external (independent) assurance providers. Regulators could also help to determine the appropriate balance between formal requirements and the professional judgement of developers and assurance professionals to reflect types of risks and the characteristics of different subject matters.

To effectively identify risks and shape accountabilities for AI, regulators will need a mix of skills and capabilities. Some regulators have started to build this capacity, however this will be needed more widely as AI systems are deployed across an increasing number of regulated sectors. For example, in financial services, model risk is an established area. However, other regulators who are already finding AI issues emerging within their remit have limited capacity and expertise in this area.

Additionally, as the use of AI systems becomes more widespread across sectors, clear regulatory scope will need to be established between regulators with similar and overlapping mandates. On top of this, regulators will need to decide in what contexts assurance be directly sought by regulators (regulatory inspection) and when assurance should be delegated to assurance providers, or where assumed conformity is appropriate.

Enabling independent research

As demand for assurance grows, independent researchers should play an increasingly important role in the assurance ecosystem, supporting assurance providers and actors in the AI supply chain.

Academic researchers are already actively contributing to the development of assurance techniques and measurements, and the identification of risks relevant to AI. For example, Joy Buolamwini’s research at MIT was critical to uncovering the racial and gender bias in AI services from companies like Microsoft, IBM, and Amazon. This research has since been industrialised through NIST’s Facial Recognition Vendor Testing Program. Similarly, researchers are supporting regulators to consider AI developments by highlighting untrustworthy development and gaps in the regulatory regimes.

The cybersecurity ecosystem offers an example of how researchers could build a more established role in the AI assurance ecosystem. In the cyber security ecosystem, independent security researchers play a key role in identifying security issues. Clear norms have developed which guide how responsible companies interact with independent researchers. Companies provide routes for the responsible disclosure of security flaws and even offer bug bounties (financial incentives) for identifying and disclosing vulnerabilities.

Like in the cybersecurity industry, routes for independent researchers to contribute to the development of security techniques and the disclosure of risks should be developed. Independent researchers could help to develop new assurance tools, identify novel risks and provide independent oversight of assurance services. To build this role into the AI assurance ecosystem, independent researchers will need to increase their focus on issues that are relevant to industry needs, if they are not already doing so. At the same time, industry stakeholders need to provide the space for researchers to contribute meaningfully to the development of tools and identification of risks. Government can also play a convening role here, ensuring that the best independent research is linked to AI assurance providers.

As well as academic researchers, journalists and activists will also have an important role to play in providing scrutiny and transparency throughout the ecosystem. There is a strong ecosystem of non-profit organisations in this space, such as Algorithm Watch who are committed to analysing automated decision-making (ADM) systems and their impact on society. These independent organisations will play an ongoing role in keeping the public informed about the development and deployment of AI systems which could affect them or their communities. Going forward, it will be important to highlight both good and bad practices, to enable better public awareness, increased consumer choice and justified trust.

A mature ecosystem requires ongoing effort

We have deliberately used the word ecosystem to describe the network of roles needed for assurance. These are interdependent roles that co-evolve, and rely on a balance between competing interests between different actors.

Ongoing tensions

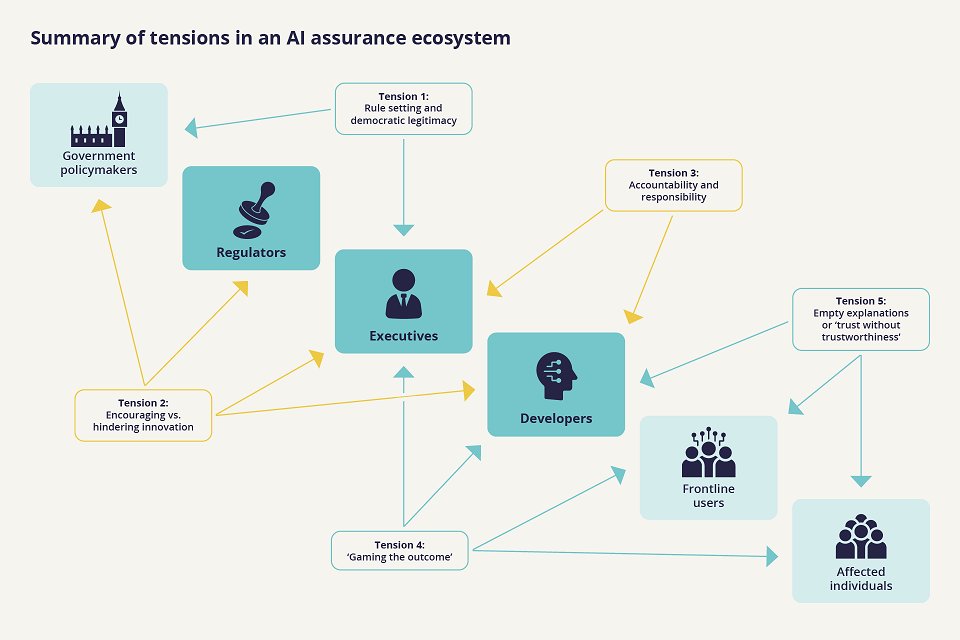

There are some fundamental tensions of assurance ecosystems that can be managed, but do not go away even in mature ecosystems, such as gaming, conflicts of interest, the power dynamics behind standards making, balancing innovation and risk minimisation, and responsibility for good AI decisions.

Tension 1 lies between regulators who may want or need specific rules or requirements that they can enforce, and the government, which may be intentionally hands-off when it comes to setting those standards, or setting policy objectives that drive those standards. Governments may be hesitant to set specific rules for two understandable reasons. Firstly, if they set them too prematurely, they might set the bar too low or be informed by popular sentiment rather than by careful consideration. Secondly, there may be aspects of regulation that government feels is beyond the scope of the state. For example, there are certain aspects of codifying fairness which might need to be left to individual judgement.

Tension 2 arises due to different trade-offs between risk minimisation and encouraging innovation. Governments, regulators, developers, and executives each face a different balance of the risks and benefits of these technologies, and often have incentives that are in tension. To resolve this, a balanced approach is needed to consider the risks and benefits across the ecosystem. The ideal arrangement is one where assurance acts to give greater confidence to both developers and purchasers of AI systems and enables wider, safer adoption.