Commentary on curriculum research - phase 3

Amanda Spielman provides a commentary on phase 3 of Ofsted's research into the school curriculum.

In January, I will consult on our new education inspection framework (EIF). As I have already announced, the heart of our proposals will be to refocus inspections on the quality of education, including curriculum intent, implementation and impact.

To ensure that inspection of the quality of education is valid and reliable, I commissioned a major, 2-year research study into the curriculum. I would like to thank the school leaders and teachers who have contributed to this work. We visited 40 schools in phase 1, 23 schools in phase 2 and now 64 schools in phase 3. When you add the focus groups, reviews of inspection reports and other methods, it’s clear that this is a significant study and we can be confident in its conclusions.

Read Amanda Spielman’s commentaries on Phase 1 and Phase 2 of Ofsted’s curriculum research. </div>

To recap, in phase 1 of the research, we attempted to understand more about the current state of curricular thinking in schools. We found that many schools were teaching to the test and teaching a narrowed curriculum in pursuit of league table outcomes, rather than thinking about the careful sequencing of a broad range of knowledge and skills. This was disappointing but unsurprising. We have accepted that inspection itself is in part to blame. It has played too great a role in intensifying performance data rather than complementing it.

Having found that some schools lacked strong curricular thinking, phase 2 sought to look at the opposite – those schools that had invested in curriculum design and aimed to raise standards through the curriculum. Although we went to schools that had very different approaches to the curriculum, we found some common factors that appear related to curriculum quality:

- the importance of subjects as individual disciplines

- using the curriculum to address disadvantage and provide equality of opportunity

- regular curriculum review

- using the curriculum as the progression model

- intelligent use of assessment to inform curriculum design

- retrieval of core knowledge baked into the curriculum

- distributed curriculum leadership

In phase 3, which is the subject of this commentary, we wanted to find out how we might inspect aspects of curriculum quality, including whether the factors above can apply across a much broader range of schools.

Read the phase 3 report ‘Curriculum research: assessing intent, implementation and impact’. </div>

We also wanted to move beyond just looking at curriculum intent to looking at how schools implemented that thinking and what outcomes it led to. There has been some debate since we published my commentary on phase 2 about whether this would lead to an Ofsted-approved curriculum model. However, to reiterate there will be no ‘Ofsted curriculum’. We will recognise a range of different approaches.

Phase 3 of our curriculum research shows that inspectors, school leaders and teachers from across a broad range of schools can indeed have professional, in-depth conversations about curriculum intent and implementation. Crucially, the evidence also shows that inspectors were able to make valid assessments of the quality of curriculum that a school is providing. Both parties could see the distinction between intent and implementation, and inspectors could see differences in curriculum quality between schools and also between subject departments within schools.

Importantly, what we also found was that schools can produce equally strong curricula regardless of the level of deprivation in their communities, which suggests that our new approach could be fairer to schools in disadvantaged areas. This is distinctly encouraging as we move towards the new inspection framework. You can read the full findings of this research study. I have summarised the research design and main findings below.

Curriculum study – phase 3

In phase 3, we wanted to design a model of curriculum assessment that could be used across all schools and test it to see whether it produced valid and reliable results. Based on the phase 2 findings, discussions with expert HMI and our review of the academic literature, we came up with several hypotheses (detailed in the full report) and 25 indicators of curriculum quality to test (detailed at the end of this commentary). These indicators will not be directly translated into the new inspection framework. First, they were only tested in schools, not early years provision or further education and skills providers. Second, 25 indicators is too many for inspectors to use on an inspection, especially given the short timescales of modern inspection practice. What we were aiming to do was first to prove the concept (i.e. that it is possible to make valid and reliable assessments of quality) and second, to find out which types of indicators did that most clearly.

The 25 indicators were underpinned by a structured and systematic set of instructions for inspectors about how to use them for the research. Using conversations with senior leaders and subject leaders and collecting first-hand evidence of implementation, inspectors were able to make focused assessments of schools against each of the indicators. Inspectors used a 5-point scale, where 5 was the highest, to help distance inspectors’ thinking from the usual Ofsted grades. The full descriptors are at the end of this commentary, but by way of illustration:

- a score of 5 means ‘this aspect of curriculum underpins/is central to the school’s work/embedded practice/may include examples of exceptional curriculum’

- a score of 1 means ‘this aspect is absent in curriculum design’

Within each school, inspectors looked at 4 different subjects: 1 core and 3 foundation. This allowed us to look at the level of consistency within each school, but also to find out more broadly which subjects, if any, had more advanced curricular thinking behind them. Inspectors also gave each school an overall banding, again from 5 to 1.

This gave us 71 data points for each school, based on all the evidence gathered. While this approach would not be suitable for an inspection, what it allowed us to do was to carry out statistical analyses to look at the validity of our research model and to refine and narrow the indicators to those that more clearly explained curriculum quality.

We visited 33 primary schools, 29 secondaries and 2 special schools. The sample was balanced in order to test the validity of our curriculum research model across a range of differing school contexts. The main selection criteria were: previous inspection judgements (outstanding, good and requires improvement (RI) only), geographical location (Ofsted regions) and school type (local authority (LA) maintained/academies), although we over-sampled for secondary schools and schools that were judged outstanding or RI at their last routine inspection. We ensured a wide spread in terms of performance data. Importantly, we also took care to select a range of institutions across an area-based index of deprivation. This meant that we had roughly equal numbers of schools in more and less deprived areas.

Primary/secondary

Figure 1: Curriculum overall banding by school phase

| School phase | Band 1 | Band 2 | Band 3 | Band 4 | Band 5 | Total |

|---|---|---|---|---|---|---|

| Primary | 3 | 12 | 10 | 6 | 2 | 33 |

| Secondary | - | 3 | 10 | 17 | 1 | 31 |

| Total | 3 | 15 | 20 | 23 | 3 | 64 |

The 2 special schools are included in the secondary school data.

Figure 1 shows a clear difference in the distribution of the overall bandings between primary and secondary schools. Only 8 out of 33 (around a quarter) primary schools scored highly, i.e. a 4 or a 5 overall, whereas 16 out of 29 secondaries (over half) did.

Only 18 out of 64 schools scored poorly, i.e. a 1 or a 2, which is more encouraging than our phase 1 research might have suggested.

When we dig down into the subject-level data, we can begin to see why this might be.

Figure 2: Indicator 6a by subject departments assessed during the 33 primary schools visits

| Subject area | Band 1 | Band 2 | Band 3 | Band 4 | Band 5 | Total |

|---|---|---|---|---|---|---|

| Core | ||||||

| English | - | 1 | 6 | 9 | 1 | 17 |

| Maths | 1 | 1 | 8 | 6 | 1 | 17 |

| Science | 2 | 4 | 6 | 1 | 1 | 14 |

| Foundation | ||||||

| Humanities | 7 | 7 | 11 | 5 | - | 30 |

| Arts | 4 | 9 | 6 | 2 | 1 | 22 |

| PE | 1 | - | 2 | 6 | 1 | 10 |

| Technology | 6 | 4 | 4 | 2 | 2 | 18 |

| Modern foreign languages | - | - | 2 | 1 | 3 | 6 |

| Total | 21 | 26 | 45 | 32 | 10 | 134 |

Indicator 6a: The curriculum has sufficient depth and coverage of knowledge in subjects.

Includes 2 subject reviews conducted in the primary phase of an all through school.

Technology includes computer studies.

Figure 2 shows that when we look at subject depth and coverage in primary curricula, there are few low scores for the core subjects – just 8 scores of 1 or 2 out of 46 assessments. English and mathematics scores are particularly good. This would appear to be a result of two factors.

First, especially in key stage 1, literacy and numeracy are extremely important. Children cannot access other subjects if they do not have those basic reading, writing and calculation skills. This was recognised, albeit imperfectly, in the national strategies, which have given rise to the current modus operandi of many primary schools: English and mathematics in the morning and everything else in the afternoon.

Second though, it is a truism that what gets measured gets done. English and mathematics are what are measured in primary schools. It is hardly surprising, then, that they get the most lesson time and most curricular attention from leaders. It is clearly possible to do this badly, as we found in phase 1 where some schools were practising SATs as early as Christmas in Year 6 and focusing on reading comprehension papers rather than actually encouraging children to read. However, our results here appear to suggest that many more primary schools are doing it well.

Unfortunately, the same cannot be said for the foundation subjects. It is disappointing to see so few higher scores in technology subjects, humanities and arts.

In phase 2 of our research, we saw that almost all of the primaries used topics or themes as their way of teaching the foundation subjects. However, the ones that were most invested in curriculum design had a clear focus on the subject knowledge to be learned in each subject and designed their topics around that. What appears to happen more often, though, is a selection of topics being taught that do not particularly link together or allow good coverage of and progression through the subjects. Figure 2 shows that 7 schools had a complete absence of curriculum design in humanities for example.

The picture appeared much stronger in secondary schools than in the primary schools we saw. There was considerably less difference between how well foundation subjects were being implemented compared with the core subjects.

Figure 3: Indicator 6a by subject departments assessed during the 29 secondary schools visits

| Subject area | Band 1 | Band 2 | Band 3 | Band 4 | Band 5 | Total |

|---|---|---|---|---|---|---|

| Core | ||||||

| English | 1 | - | 3 | 6 | 6 | 16 |

| Maths | - | - | 3 | 6 | 5 | 14 |

| Science | - | - | 2 | 4 | 2 | 8 |

| Foundation | ||||||

| Humanities | 4 | 2 | 6 | 6 | 8 | 26 |

| Arts | - | 1 | 2 | 4 | 6 | 13 |

| PE | - | 1 | 1 | 1 | 3 | 6 |

| Technology | - | 2 | 2 | 2 | 2 | 8 |

| Modern foreign languages | 1 | - | 3 | 4 | - | 8 |

| Other | 2 | - | 5 | 4 | 4 | 15 |

| Total | 8 | 6 | 27 | 37 | 36 | 114 |

Indicator 6a: The curriculum has sufficient depth and coverage of knowledge in subjects.

One of the secondary schools visited was an all through school. Only 2 subject reviews were carried out in the secondary phase for this visit.

Technology includes computer studies.

Arts subjects (art, music and drama) appeared particularly strong, with 10 out of 13 arts departments scoring a 4 or a 5. However, some subjects were still being implemented weakly compared with English and mathematics. In modern foreign languages, many of the features of successful curriculum design and implementation were absent or limited due to the lack of subject specialists. History was also less well organised and implemented in a number of schools, often to the detriment of a clear progression model through the curriculum. A lack of subject expertise, especially in leadership roles, contributed to these weaknesses.

The research visits to the 2 special schools in the sample showed that the curriculum indicators worked equally well in this context. We are unable to give more detail about the curriculum quality in these schools as it would be possible to identify which schools we are referring to. This would violate our research protocol and our agreement with the schools.

Ofsted grade, disadvantage and progress

Figure 4: Curriculum overall banding by the overall effectiveness judgement of the schools visited at their last routine inspection

| Overall effectiveness | Band 1 | Band 2 | Band 3 | Band 4 | Band 5 | Total |

|---|---|---|---|---|---|---|

| Outstanding | - | 2 | 7 | 7 | 3 | 18 |

| Good | 2 | 7 | 9 | 12 | - | 30 |

| Requires improvement | 1 | 6 | 4 | 4 | - | 15 |

| Total | 3 | 15 | 20 | 23 | 3 | 64 |

Overall effectiveness judgements are based on data at time of sampling.

Figure 4 shows that there is a positive correlation between the banding schools received on their curriculum and their current Ofsted grade, albeit a weak one. This was being driven in part by relative curriculum weakness of the primary schools in the sample. The 3 schools that achieved the highest curriculum score all do have a current outstanding grade. However, we also assessed 9 outstanding schools as band 2 or 3 and 9 good schools as band 1 or 2.

It is worth remembering that some of the outstanding schools have not been inspected for over a decade, which means that the validity of that ’outstanding’ grade is not certain.

A quarter of the RI schools visited were assessed as band 4, a high score. We know that under the current system it is harder to get a good or outstanding grade if your test scores are low, even if this is primarily a result of a challenging or deprived intake. This research suggests that some RI schools may in fact have strong curricula and should be rewarded for that

Figure 5: Curriculum overall banding by the income deprivation affecting children index quintile of each school

| IDACI | Band 1 | Band 2 | Band 3 | Band 4 | Band 5 | Total |

|---|---|---|---|---|---|---|

| Quintile 1 (least deprived) | 1 | 4 | 4 | 4 | - | 13 |

| Quintile 2 | - | 4 | 5 | 3 | 2 | 14 |

| Quintile 3 | - | - | 5 | 5 | 1 | 11 |

| Quintile 4 | 1 | 4 | 3 | 5 | - | 13 |

| Quintile 5 (most deprived) | 1 | 3 | 3 | 6 | - | 13 |

| Total | 3 | 15 | 20 | 23 | 3 | 64 |

Deprivation is based on the income deprivation affecting children index. The deprivation of a provider is based on the mean of the deprivation indices associated with the home post codes of the pupils attending the school rather than the location of the school itself. The schools are divided into 5 equal groups (quintiles), from ‘most deprived’ (quintile 5) to ‘least deprived’ (quintile 1).

Figure 5 shows that there is no clear link between the deprivation levels of a school’s community and a school’s curriculum quality. In fact, there are more schools in the top 3 bands that are situated in the most deprived communities (69%) than there are in the least deprived (62%), although the numbers of schools in each quintile are small. This is encouraging as we move towards the new inspection framework. It suggests that a move away from using performance data as such a large part of the basis for judgement and towards using overall quality of education will allow us to reward schools in challenging circumstances that are raising standards through strong curricula, much more equitably.

Figure 6: Curriculum overall banding by key stage 2 and key stage 4 progress measure bandings

| Progress | Band 1 or 2 | Band 3 | Band 4 or 5 | Total |

|---|---|---|---|---|

| Above average | 3 | 4 | 8 | 15 |

| Average | 5 | 10 | 12 | 27 |

| Below average | 3 | 3 | 2 | 8 |

| No data | 7 | 3 | 2 | 12 |

| Total | 18 | 20 | 24 | 62 |

Key stage 4 progress 8 data (2018) and key stage 2 mathematics data (2017) have been merged for statistical disclosure control purposes.

Proportion of schools in each banding differ between the various progress measures. Bandings and the key stage 4 progress measure encompasses more subjects than the single-subject measures used at key stage 2.

Data for the 2 special schools is not included.

Schools with no data are newly opened schools or infant schools.

The progress bandings shown are based on the 5 progress measure bandings calculated by the Department for Education. ‘Above average’ combines ‘above average’ and ‘well above average’; ‘Below average’ combines ‘below average’ and ‘well below average’.

Figure 6 shows the link between published progress data and the overall curriculum banding given to each school. The vast majority of those scoring a band 4 or 5 have above average or average progress scores (20 out of 24). But there are clearly some schools in our sample where despite having strong progress scores, we found their curriculum to be lacking. It would be wrong to speculate on the reasons for this, but it is clearly something that inspections under the new framework would look to explore.

Intent and implementation

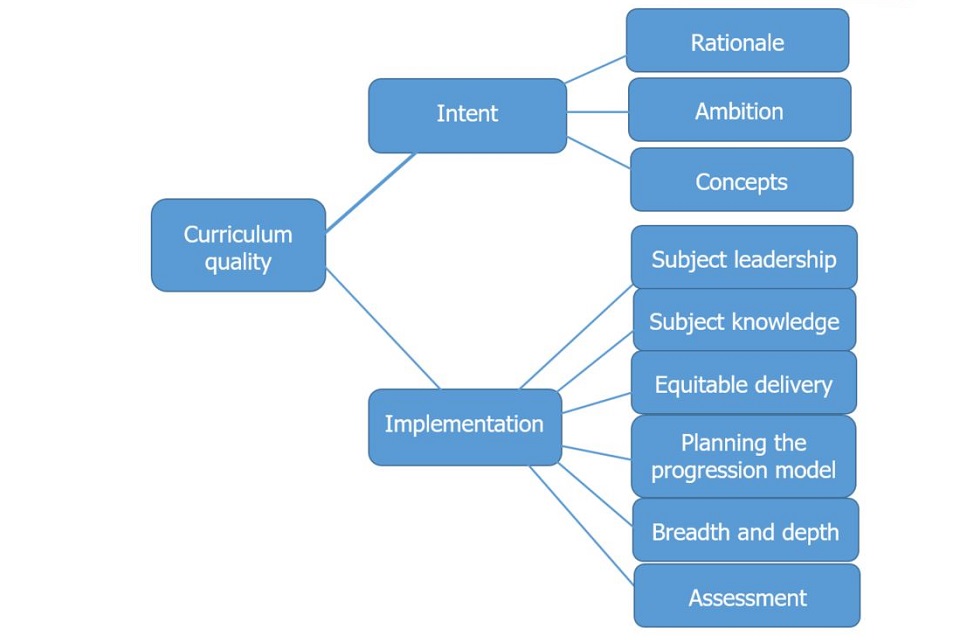

We carried out further statistical analyses of our research model. Although we had 25 indicators in the model, our analysis showed that the relationship between the scores for each one and the relationship to the overall banding really boiled them down to 2 main factors: intent and implementation.

Figure 7 visualises this statistical model of curriculum quality and the relationship between intent and implementation. There is more detail on how we calculated this in the main report.

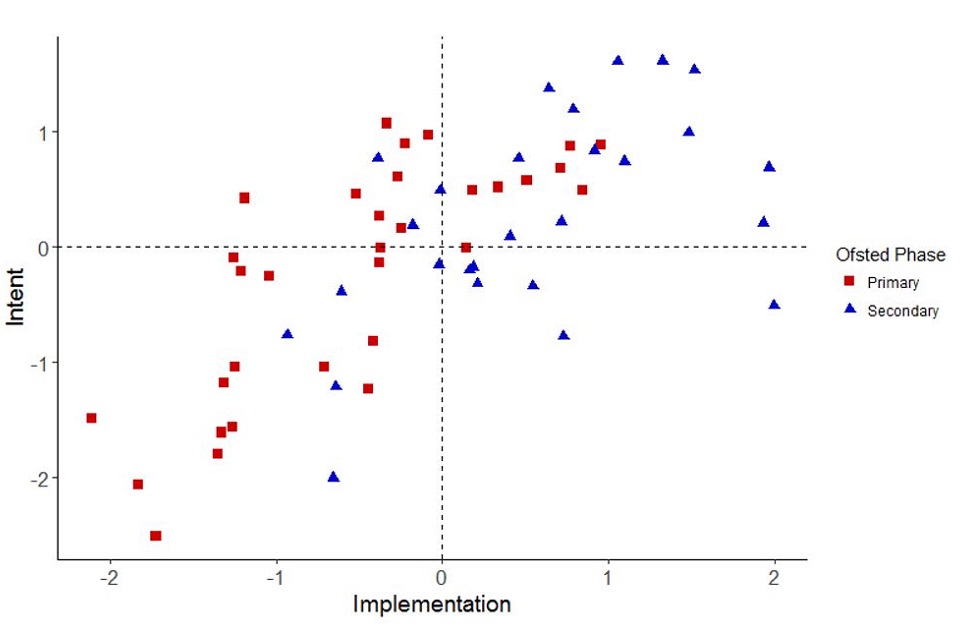

Figure 7: Scores for the intent and implementation indicators at the individual school-level, grouped by phase

Figure 8 expands on the model and shows that the scores for intent and implementation factors for most schools were well linked. However, there are some schools in the top-left and bottom-right sections in which inspectors were able to see a difference in quality between the intent and the implementation. This reinforces the conclusion above that intent and implementation can indeed be distinct. It should also dispel the suggestion by some commentators that our inspectors will be won over by schools that ‘talk a good game’ but do not put their intent into practice.

Most of the schools that scored well for intent but not so well for implementation (top left) were primaries. It is not hard to see primaries, particularly small ones, being less able to put their plans into action. It is difficult in many areas to recruit the right teachers. In small primaries, it is asking a lot of teachers to think about and teach the curriculum right across the range of subjects and even across year groups. Inspectors will of course consider these challenges when making their judgements.

Figure 8: List of curriculum indicators in the research model

In contrast, those schools that scored much better for implementation than for intent were all secondaries (bottom right). Again, it is not hard to imagine why that might be. Weak central leadership and lack of whole-school curriculum vision were more easily made up for in some of the secondary schools, particularly large ones, by strong heads of departments and strong teaching. This could also be a consequence of GCSE exam syllabuses playing the role of curricular thinking in the absence of a school’s own vision. This is another nuance that inspectors will deal with under the new framework.

The variation between intent and implementation scores suggests that our research model is valid. It appears to be assessing the right things, in the right way, to produce an accurate and useful assessment of curriculum intent and implementation.

Despite the fact that relatively few schools scored highly across the board on these measures, particularly at primary, it is worth reiterating our commitment to keeping the overall proportions of schools achieving each grade roughly the same between the old framework and the new framework. We are not ‘raising the bar’. That means explicitly that we will not be ‘downgrading’ vast numbers of primary or secondary schools. Instead, we recognise that curriculum thinking has been deprioritised in the system for too long, including by Ofsted. We do not expect to see this change overnight. The new framework represents a process of evolution rather than revolution. To set the benchmark too high would serve neither the sector nor pupils well. Instead, we will better recognise those schools in challenging circumstances that focus on delivering a rich and ambitious curriculum. At the same time, when we see schools excessively narrowing and gaming performance data, we will reflect that in their judgements.

Looking towards the EIF 2019

Through the autumn term, we have been piloting inspections under the proposed new framework. These pilots have drawn heavily from our curriculum research, including the indicators listed below. When we consult on our proposals in January, we will have behind us:

- the pilots

- our curriculum research

- research on lesson observation and work scrutiny

- an interrogation of the academic literature on educational effectiveness

The proposals will be detailed and firmly grounded in evidence.

It will be a full consultation and we genuinely welcome proposals for refinement. As the curriculum research has shown, there are still some challenges for us, particularly how we calibrate our judgement profile. Our aim over the spring term will be to listen to as many of you as possible, to address your concerns and hopefully hear your positivity about this new direction too. This research has given us a lot of confidence that our plans to look beyond data and assess the broader quality of education are achievable and necessary.

Figure 9: List of curriculum indicators in the research model

| No. | Indicator |

| 1a | There is a clear and coherent rationale for the curriculum design |

| 1b | Rationale and aims of the curriculum design are shared across the school and fully understood by all |

| 1c | Curriculum leaders show understanding of important concepts related to curriculum design, such as knowledge progression and sequencing of concepts |

| 1d | Curriculum coverage allows all pupils to access the content and make progress through the curriculum |

| 2a | The curriculum is at least as ambitious as the standards set by the National Curriculum / external qualifications |

| 2b | Curriculum principles include the requirements of centrally prescribed aims |

| 2c | Reading is prioritised to allow pupils to access the full curriculum offer |

| 2d | Mathematical fluency and confidence in numeracy are regarded as preconditions of success across the national curriculum |

| 3a | Subject leaders at all levels have clear roles and responsibilities to carry out their role in curriculum design and delivery |

| 3b | Subject leaders have the knowledge, expertise and practical skill to design and implement a curriculum |

| 3c | Leaders at all levels, including governors, regularly review and quality assure the subject to ensure it is implemented sufficiently well |

| 4a | Leaders ensure ongoing professional development/training is available for staff to ensure curriculum requirements can be met |

| 4b | Leaders enable curriculum expertise to develop across the school |

| 5a | Curriculum resources selected, including textbooks, serve the school’s curricular intentions and the course of study and enable effective curriculum implementation |

| 5b | The way the curriculum is planned meets pupils’ learning needs |

| 5c | Curriculum delivery is equitable for all groups and appropriate |

| 5d | Leaders ensure interventions are appropriately delivered to enhance pupils’ capacity to access the full curriculum |

| 6a | The curriculum has sufficient depth and coverage of knowledge in the subjects |

| 6b | There is a model of curriculum progression for every subject |

| 6c | Curriculum mapping ensures sufficient coverage across the subject over time |

| 7a | Assessment is designed thoughtfully to shape future learning. Assessment is not excessive or onerous |

| 7b | Assessments are reliable. Teachers’ ensure systems to check reliability of assessments in subjects are fully understood by staff |

| 7c | There is no mismatch between the planned and the delivered curriculum |

| 8 | The curriculum is successfully implemented to ensure pupils’ progression in knowledge - pupils successfully ‘learn the curriculum’ |

| 9 | The curriculum provides parity for all groups of pupils |

Indicators 1 to 2 are indicators framed around curriculum intent; 3 to 7 are implementation indicators and 8 to 9 relate to impact.

Figure 10: Categories applied in the rubric for scoring the curriculum indicators

| 5 | 4 | 3 | 2 | 1 |

|---|---|---|---|---|

| This aspect of curriculum underpins/is central to the school’s work/embedded practice/may include examples of exceptional curriculum | This aspect of curriculum is embedded with minor points for development (leaders are taking action to remedy minor shortfalls) | Coverage is sufficient but there are some weaknesses overall in a number of examples (identified by leaders but not yet remedying) | Major weaknesses evident in terms of either leadership, coverage or progression (leaders have not identified or started to remedy weaknesses) | This aspect is absent in curriculum design |

List of schools visited

| School name | Local authority | Type | Phase |

|---|---|---|---|

| Arnold Woodthorpe Infant School | Nottinghamshire | Community School | Primary |

| Babington Academy | Leicester | Academy Converter | Secondary |

| Birches Green Infant School | Birmingham | Community School | Primary |

| Broadwater Primary School | Wandsworth | Community School | Primary |

| Carville Primary School North | Tyneside | Foundation School | Primary |

| Castle Manor Academy | Suffolk | Academy Converter | Secondary |

| Chapelford Village Primary School | Warrington | Academy Converter | Primary |

| Chetwynde School | Cumbria | Free Schoo | Secondary |

| Chingford CofE Primary School | Waltham Forest | Voluntary Controlled School | Primary |

| Churchmead Church of England (VA) School | Windsor and Maidenhead | Voluntary Aided School | Secondary |

| City Academy Birmingham | Birmingham | Free School | Secondary |

| Corsham Primary School | Wiltshire | Academy Converter | Primary |

| Cosgrove Village Primary School | Northamptonshire | Community School | Primary |

| Cowley International College | St Helens | Community School | Secondary |

| Crossley Hall Primary School | Bradford | Community School | Primary |

| Ditton Park Academy | Slough | Free School | Secondary |

| Earlsdon Primary School | Coventry | Community School | Primary |

| Eden Girls’ School Coventry | Coventry | Free School | Secondary |

| Elmridge Primary School | Trafford | Academy Converter | Primary |

| Figheldean St Michael’s Church of England Primary School | Wiltshire | Academy Converter | Primary |

| Filey Church of England Nursery and Infants Academy | North Yorkshire | Academy Converter | Primary |

| Fir Vale School | Sheffield | Academy Converter | Secondary |

| Fowey River Academy | Cornwall | Academy Sponsor Led | Secondary |

| Harris Girls Academy Bromley** | Bromley | Academy Converter | Secondary |

| Holway Park Community Primary School | Somerset | Community School | Primary |

| Horndean Technology College | Hampshire | Community School | Secondary |

| Ixworth Free School | Suffolk | Free School | Secondary |

| JFS | Brent | Voluntary Aided School | Secondary |

| Ken Stimpson Community School | Peterborough | Community School | Secondary |

| Kettering Park Infant School | Northamptonshire | Academy Converter | Primary |

| King Edward VI Grammar School, Chelmsford | Essex | Academy Converter | Secondary |

| Lakenham Primary School | Norfolk | Foundation School | Primary |

| Lanchester Community Free School | Hertfordshire | Free School | Primary |

| Lansdowne School | Lambeth | Community Special School | Special |

| Linton Heights Junior School | Cambridgeshire | Academy Converter | Primary |

| Marfleet Primary School | Kingston upon Hull | Academy Converter | Primary |

| New Haw Community Junior School | Surrey | Academy Converter | Primary |

| Ormiston Park Academy | Thurrock | Academy Sponsor Led | Secondary |

| Our Lady and St Patrick’s, Catholic Primary | Cumbria | Voluntary Aided School | Primary |

| Parkside Primary School | East Riding of Yorkshire | Community School | Primary |

| Parley First School | Dorset | Community School | Primary |

| Pennington CofE School | Cumbria | Voluntary Controlled School | Primary |

| Penryn College | Cornwall | Academy Converter | Secondary |

| Princetown Community Primary School | Devon | Foundation School | Primary |

| Ravensbourne School | Havering | Academy Special Converter | Special |

| Ringway Primary School | Northumberland | Community School | Primary |

| Round Diamond Primary School | Hertfordshir | Community School | Primary |

| Sir Robert Pattinson Academy | Lincolnshire | Academy Converter | Secondary |

| Sir Thomas Boughey Academy | Staffordshire | Academy Converter | Secondary |

| Soar Valley College | Leicester | Community School | Secondary |

| Sompting Village Primary School | West Sussex | Community School | Primary |

| St Augustine’s Catholic College | Wiltshire | Academy Converter | Secondary |

| St John the Divine Church of England Primary School | Lambeth | Voluntary Aided School | Primary |

| St Katherine’s Church of England Primary School | Essex | Foundation School | Primary |

| St Mary Redcliffe and Temple School | Bristol | Voluntary Aided School | Secondary |

| The Barlow RC High School and Specialist Science College | Manchester | Voluntary Aided School | Secondary |

| The Bishop of Hereford’s Bluecoat School | Herefordshire | Voluntary Aided School | Secondary |

| The Cottswold School | Gloucestershire | Academy Converter | Secondary |

| The Marston Thorold’s Charity Church of England School | Lincolnshire | Voluntary Aided School | Primary |

| The North Halifax Grammar School | Calderdale | Academy Converter | Secondary |

| Trinity Catholic High School | Redbridge | Voluntary Aided School | Secondary |

| Upminster Infant School | Havering | Academy Converter | Primary |

| Westwood College | Staffordshire | Academy Converter | Secondary |

| Winklebury Junior School | Hampshire | Community School | Primary |

**The list of schools visited was amended on 19 December as Harris Academy Beckenham was included in error.

Updates to this page

Published 11 December 2018Last updated 19 December 2018 + show all updates

-

Amended the list of schools visited as Harris Academy Beckenham was included in error instead of Harris Girls Academy Bromley.

-

First published.